预约演示

更新于:2026-01-27

Folitixorin Calcium

更新于:2026-01-27

概要

基本信息

在研机构- |

权益机构- |

最高研发阶段终止临床3期 |

首次获批日期- |

最高研发阶段(中国)- |

特殊审评- |

登录后查看时间轴

结构/序列

分子式C20H23CaN7O6 |

InChIKeyXTTQVUYZSJQXLP-ZEDZUCNESA-N |

CAS号133978-75-3 |

关联

6

项与 Folitixorin Calcium 相关的临床试验NCT00663481

A Single Dose, Within Subject, 3 Period, Pharmacokinetic Bridging Study of CoFactor Formulations and of Leucovorin Administered Intravenously in Healthy, Adult Subjects.

The purpose of this study is to determine the safety and tolerability of CoFactor in healthy subjects.

开始日期2008-04-01 |

申办/合作机构 |

NCT00337389

A Phase III Multi-Center Randomized Clinical Trial to Evaluate the Safety and Efficacy of CoFactor and 5-Fluorouracil (5-FU) Plus Bevacizumab Versus Leucovorin and 5-FU Plus Bevacizumab as Initial Treatment for Metastatic Colorectal Carcinoma

To compare the progression-free survival time (PFS) in patients treated with 5-FU modulated with CoFactor (plus bevacizumab) to 5-FU modulated with leucovorin (plus bevacizumab) in patients with Metastatic Colorectal Cancer.

开始日期2006-05-01 |

申办/合作机构 |

NCT00434369

A Multi-Center, Open-Label, Single-Arm Phase II Trial Assessing the Efficacy and Safety of Weekly Bolus Infusions of 5-Fluorouracil Combined With CoFactor (5-10 Methylenetetrahydrofolate) in Advanced Breast Cancer Patients Who Failed Anthracycline and Taxane Chemotherapy Regimens

A multi-center, open-label, single-arm Phase II trial assessing the efficacy and safety of weekly bolus infusions of 5-fluorouracil combined with CoFactor (5-10 methylenetetrahydrofolate) in advanced breast cancer patients who failed anthracycline and taxane chemotherapy regimens.

开始日期2006-02-01 |

申办/合作机构 |

100 项与 Folitixorin Calcium 相关的临床结果

登录后查看更多信息

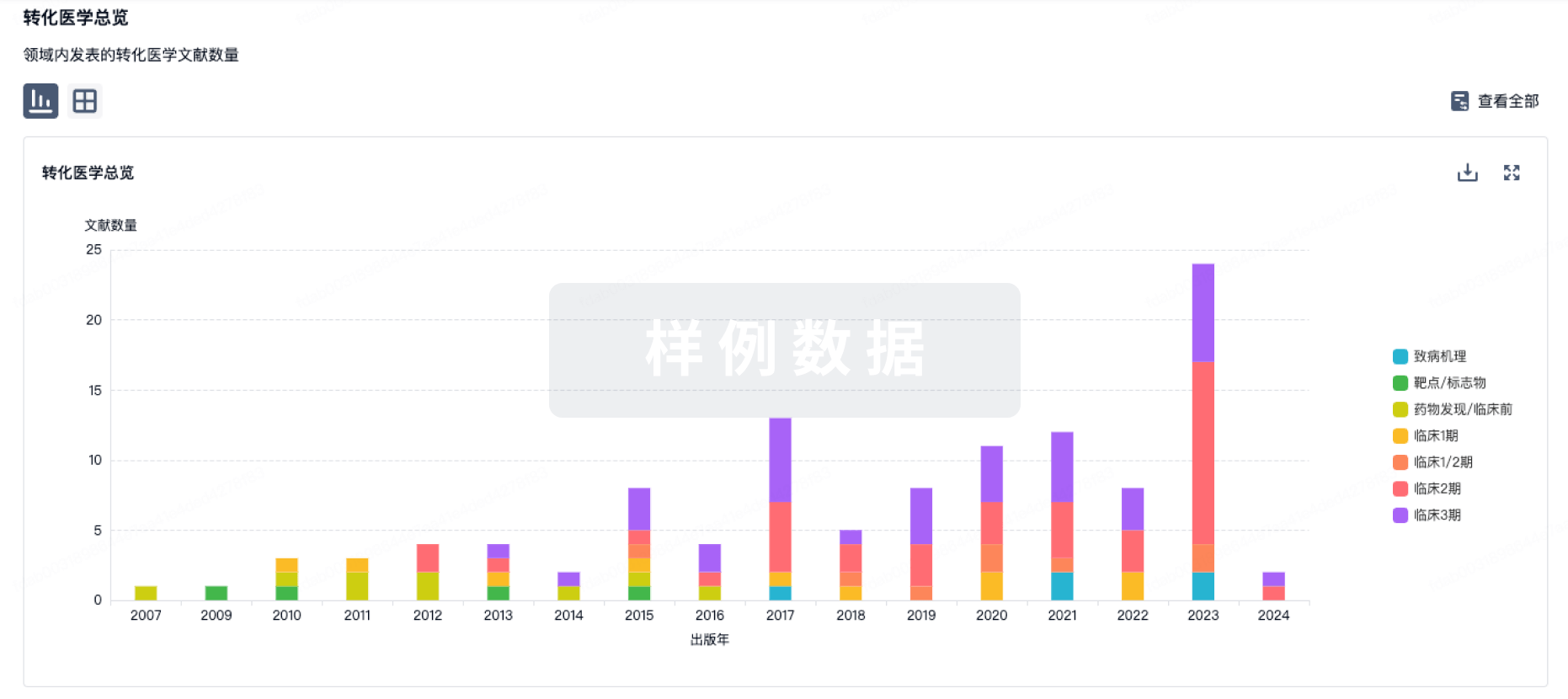

100 项与 Folitixorin Calcium 相关的转化医学

登录后查看更多信息

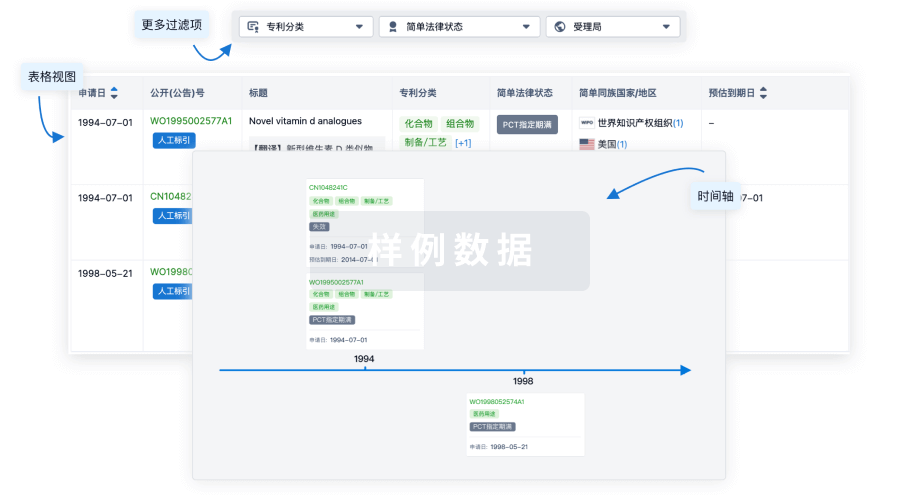

100 项与 Folitixorin Calcium 相关的专利(医药)

登录后查看更多信息

681

项与 Folitixorin Calcium 相关的文献(医药)2025-09-01·METABOLIC ENGINEERING

Insights into the methanol utilization capacity of Y. lipolytica and improvements through metabolic engineering

Article

作者: Newell, William ; Ledesma-Amaro, Rodrigo ; Jiang, Wei ; Haritos, Victoria ; Borah Slater, Khushboo ; Liu, Long ; Liu, Jingjing ; Bell, David ; Coppens, Lucas ; Peng, Huadong

Methanol is a promising sustainable alternative feedstock for green biomanufacturing. The yeast Yarrowia lipolytica offers a versatile platform for producing a wide range of products but it cannot use methanol efficiently. In this study, we engineered Y. lipolytica to utilize methanol by overexpressing a methanol dehydrogenase, followed by the incorporation of methanol assimilation pathways from methylotrophic yeasts and bacteria. We also overexpressed the ribulose monophosphate (RuMP) and xylulose monophosphate (XuMP) pathways, which led to significant improvements in growth with methanol, reaching a consumption rate of 2.35 g/L in 24 h and a 2.68-fold increase in biomass formation. Metabolomics and Metabolite Flux Analysis confirmed methanol assimilation and revealed an increase in reducing power. The strains were further engineered to produce the valuable heterologous product resveratrol from methanol as a co-substrate. Unlike traditional methanol utilization processes, which are often resource-intensive and environmentally damaging, our findings represent a significant advance in green chemistry by demonstrating the potential of Y. lipolytica for efficient use of methanol as a co-substrate for energy, biomass, and product formation. This work not only contributes to our understanding of methanol metabolism in non-methylotrophic organisms but also paves the way for achieving efficient synthetic methylotrophy towards green biomanufacturing.

2025-07-01·Science China-Chemistry

Dual roles of the sacrificial agent in efficient solar-to-chemical production by nonphotosynthetic Moorella thermoacetica

作者: Chen, Wei ; Zhou, Xudong ; Xiao, Yong ; Li, Junpeng ; He, Yi ; Ren, Chongyuan ; Tian, Xiaochun ; Zhao, Feng ; Bai, Rui ; Chen, Liqi

The integration of microorganisms and photosensitizers presents a promising approach to chem. production utilizing solar energy.However, the current system construction process remains complex.Herein, we introduce a straightforward and efficient solar-to-chem. conversion system that combines the dissolved photosensitizer Eosin Y with the non-photosynthetic bacterium Moorella thermoacetica.Under light radiation, acetate production increased to 5.1 μM h-1 μM-1 catalyst, exceeding the previously reported maximum by 5.9-fold, with a quantum efficiency of 17.6%.The soluble photosensitizer EY can penetrate the cell and directly engage in intracellular energy metabolism, significantly enhancing intracellular ATP and NADPH/NADP+ levels.Within this biohybrid system, sacrificial agent triethanolamine played a dual role: (1) providing continuous photoelectron generation by Eosin Y, enhancing intracellular reducing power, and facilitating carbon fixation via the Wood-Ljungdahl pathway; and (2) its oxidation product, formaldehyde, served as a critical intermediate and a direct precursor for methylenete-trahydrofolate in the Wood-Ljungdahl pathway, consequently simplifying reaction steps and markedly boosting acetate yield.This study provides a simple microorganism-photosensitizer biohybrid system to produce acetate and light on the multifaceted roles of sacrificial agents, paving the development of efficient solar energy conversion with nonphotosynthetic bacteria.

2025-06-04·JOURNAL OF AGRICULTURAL AND FOOD CHEMISTRY

Biotransformation of Kaempferol to Icaritin in Engineered Saccharomyces cerevisiae

Article

作者: Zhu, Si-Yu ; Li, Bing-Zhi ; Zhang, Chuan-Xi ; Zhang, Lu-Jia ; Yuan, Ying-Jin ; Liu, Zhi-Hua ; Li, Na

Flavonoids are bioactive natural products known for their pharmaceutical properties and health-promoting applications. Microbial transformation offers a promising and sustainable approach for the biosynthesis of flavonoids. However, challenges such as the lack of well-established synthesis pathways and inefficient heterologous expression of key enzymes limit the flavonoid production such as icaritin. Here, a Saccharomyces cerevisiae strain was engineered to produce icaritin from kaempferol through a metabolic engineering strategy. Enzyme screening strategies identified the functional prenyltransferases, enabling the construction of a bioconversion pathway. The engineered isopentenol and mevalonate pathway boosted the supply of dimethylallyl pyrophosphate, producing 10.4 mg/L 8-prenylkaempferol. Redesigning the N-terminal of prenyltransferase resulted in a 7.5-fold increase in the titer of 8-prenylkaempferol. Cofactor engineering strategies of S-adenosyl-l-methionine recycling resulted in a substantial 139.8% increase in icaritin production. Additionally, the rational design of rate-limiting enzymes significantly improved catalytic performance, enhancing overall icaritin production. Ultimately, engineered S. cerevisiae transformed kaempferol to icaritin successfully through engineered enzymatic modifications with a titer of 14.4 mg/L. This study offers valuable insights into the enzyme design and sustainable natural products production.

24

项与 Folitixorin Calcium 相关的新闻(医药)2026-01-26

会议推荐

2026第三届中国医药企业项目管理大会

2026第二届中国AI项目管理大会

2026第十五届中国PMO大会

2026第五届中国项目经理大会

本

文

目

录

1、新药研发(03):经典靶点--蛋白的组成和结构

2、新药研发(04):经典靶点--酶

3、新药研发(05):经典靶点i--G蛋自偶联受体(GPCR)

4、新药研发(06):经典靶点--离子通道

一、新药研发(03):经典靶点--蛋白的组成和结构

01

蛋白药物靶点概述

随着药物发现过程的不断发展,人们越来越专注于对大分子靶点的解析。以诺华开发的BCR-ABL抑制剂伊马替尼(格列卫)为例,其成功的关键在于精准靶向白血病细胞特有的融合蛋白激酶。在20世纪上半叶,由于受到当时技术的限制,有关药物-蛋白相互作用的结构信息和机制的研究停滞不前。随着技术的发展和药物发现过程的推进,X射线结晶学、分子模拟、PCR和重组DNA等技术手段为药物作用的生物靶标提供了越来越清晰的图像。冷冻电镜技术的突破使GPCR结构解析分辨率达到1.8Å,较十年前提升3倍。虽然可成药的靶点(可以与治疗药物作用的大分子)的具体数量仍然存在争议,但针对致病性生物体和人类的基因组测序有望解决这一难题。

2003年完成的人类基因组计划证明了这一点,人类生存所需的23对染色体是由大约30亿个DNA碱基对组成的,这些碱基对组成了20000~25000个蛋白质编码基因。微生物基因组虽小,但仍含有大量的蛋白编码基因。结核分枝杆菌基因组包含4018个基因,其中368个被鉴定为潜在药物靶点。虽然并非所有的这些基因和基因翻译产物都能直接或间接地参与发病过程、疾病进展或者药物治疗,但是用于药物发现的大分子靶点的数量仍然是相当可观的。最近的研究表明大约有5000个潜在的“可成药”的大分子靶点适合用于小分子药物开发,另外有3200个靶点可能适用于生物学治疗(图3.1)。《自然·药物发现》2023年统计显示,目前已有893个靶点进入临床试验阶段,其中GPCR类靶点占比达34%。

图3.1 人类和病原体基因组绘制的完成为理解潜在的药物靶点提供了丰富的信息。

以HER2基因为例,其过表达导致20-30%乳腺癌,针对该靶点的曲妥珠单抗使患者5年生存率提升37%。但并非所有被发现的基因都可作为药物作用的靶点来进行研究。用于药物治疗的有效靶点应该既是可药用的基因又是可致病的基因。不能治疗疾病的可药用基因是无效的靶点,而致病基因被调控后如果不能有效地控制疾病进程也不太可能成为药物开发的靶点。

大自然很擅于创造各种各样的生物大分子,事实上,对已上市药物的分析表明,绝大多数药物的靶点属于四种大分子,即酶(enzyme)、G蛋白偶联受体(G-protein-coupled receptor,GPCR)、离子通道(ion channel)和转运蛋白(transporter)。以PDB数据库统计,这四类靶点占所有已解析药物-靶点复合物结构的82%。虽然还有其他的一些治疗靶点,如蛋白-蛋白相互作用和DNA-蛋白相互作用,但相对而言,研究这四大类药物靶点对药物的成功发现是更加重要的。此外,这些大分子不仅仅是药物发现中的靶点,还经常被作为工具用于发现潜在治疗药物的体外筛选。例如hERG离子通道已成为心脏安全性筛选的金标准,每年避免约23%的候选药物因QT间期延长风险被淘汰。值得一提的是,制药行业目前所涉及的靶点仅仅是所有潜在靶点的九牛一毛。目前,市面上有超过21000个上市药物,但是这些药物仅含有不到1400种结构,通过与324个药物靶点相互作用而产生治疗作用(图3.2)。2022年《科学》研究指出,前50大靶点集中了68%的上市药物,反映出现有药物靶点的开发高度集中化。

图3.2 虽然已上市药物的数量超过21,000个,但核心治疗分子仅1370个。

以他汀类药物为例,7种分子通过抑制HMG-CoA还原酶占据全球心血管药物市场的45%份额。生物大分子药物虽只占12.2%,但单克隆抗体药物近五年增长率达28%,远超小分子药物的4.5%。

表1. 主要药物靶点类别与特征

靶点类型

功能

代表药物

作用机制

酶

催化生化反应(如激酶、蛋白酶)

伊马替尼(BCR-ABL激酶抑制剂)

竞争性结合活性位点阻断底物

GPCR

跨膜信号转导(如肾上腺素受体)

奥马珠单抗(抗IgE抗体)

阻断配体结合或激活G蛋白偶联通路

离子通道

调控离子跨膜运输(如钠/钾通道)

利多卡因(钠通道阻滞剂)

物理阻塞或变构调节通道开闭

转运蛋白

物质跨膜运输(如SERT、GLUT)

氟西汀(5-HT再摄取抑制剂)

抑制底物转运导致神经递质积累

02

蛋白的结构层级

在考虑各种药物靶点的整体结构和功能之前,必须先了解蛋白的基本结构。虽然DNA携带了生物体的遗传密码,但蛋白质才是真正具有生物功能的物质。蛋白质具有多种多样的活性,包括催化反应(如过氧化氢酶每秒分解400万过氧化氢分子)、运输(血红蛋白携氧量达1.34mL O2/g)、机械支撑(胶原蛋白抗张强度媲美钢铁)等。ATP合成酶的旋转马达结构每秒生产150个ATP分子,效率高达90%。虽然不同的蛋白质之间有很大的差异,但仍然有许多相似之处。

蛋白质的三维结构包括四个结构层级:

·一级结构:如胰岛素A链(21aa)和B链(30aa)的特定序列;

·二级结构:α螺旋(血红蛋白中占75%)、β折叠(免疫球蛋白轻链含12条β股)、β转角和无规卷曲;

·三级结构:肌红蛋白的球状折叠形成疏水口袋结合血红素;

·四级结构:血红蛋白由4个亚基组装,协同结合氧气。

表2.结构层级与功能关联

结构层级

定义

稳定作用力

功能意义

一级结构

氨基酸线性序列(N端→C端)

肽键(共价键)

决定折叠路径与最终构象

二级结构

局部规则折叠(α螺旋、β折叠)

氢键(骨架酰胺键间)

形成结构单元(如跨膜α螺旋)

三级结构

整体三维构象(结构域组装)

疏水作用、二硫键、盐桥

暴露功能位点(如酶活性中心)

四级结构

多亚基复合物(如血红蛋白四聚体)

范德华力、氢键、离子相互作用

协同功能调控(别构效应)

(1) 蛋白质的一级结构

·蛋白质的一级结构 (Primary Structure)是指蛋白质分子中氨基酸的排列顺序。一级结构是蛋白质最基本的结构层次,决定了蛋白质的二级、三级和四级结构。

·蛋白质一级结构主要通过肽键连接,除此之外,肽链内部还可能存在二硫键。

·在翻译过程中,多肽链是由N-末端(氨基末端)开始合成,到C-末端(羧基末端)结束,因此在书写多肽序列的时候也是从N-端开始书写。

(2) 蛋白质的二级结构

蛋白质的二级结构 (Secondary Structure)是指蛋白质主链原子的局部空间排列,不涉及氨基酸侧链的构象。主要的二级结构形式包括α-螺旋、β-折叠、β-转角和无规卷曲。

1) α-螺旋 (α-helix)

α-螺旋是一种螺旋状结构,每一圈螺旋大约包含3.6个氨基酸残基,螺距 (沿螺旋轴方向上升一圈的距离)约为0.54 nm。螺旋的走向一般右手螺旋,从螺旋的一端看过去,螺旋的旋转方向是顺时针的。在α-螺旋中,维持α-螺旋结构稳定的主要作用力是氢键,每个氨基酸残基的羰基 (C=O)与它后面第4个氨基酸残基的氨基 (N-H)之间形成氢键。这些氢键几乎与螺旋轴平行,它们的存在使得α-螺旋能够保持其螺旋形状。

2) β-折叠 (β-sheet)

β-折叠由两条或多条几乎完全伸展的肽链 (或同一肽链的不同部分)平行排列形成,相邻肽键之间通过氢键相连。氢键是在一条肽链的羰基 (C=O)与另一条肽链的氨基 (N-H)之间形成的,并且氢键与肽链的走向几乎垂直。

3) β-转角 (β-turn)

β-转角通常由4个连续的氨基酸残基组成,第一个氨基酸残基的羰基 (C=O)与第四个氨基酸残基的氨基 (N-H)之间形成氢键,使肽链急剧扭转。其一般位于蛋白质表面,能够改变肽链方向。

在β-转角中,甘氨酸 (Gly)和脯氨酸 (Pro)是比较常见的,因为甘氨酸侧链为氢原子,具有最小的空间位阻,能够为转角的形成提供便利;而脯氨酸具有特殊的环状结构,其存在会使肽链产生刚性的弯曲,有助于β-转角的形成,尤其是在需要固定角度转折的位置。

4) 无规卷曲 (random coil)

无规卷曲是肽链中没有确定规律性的部分,构象比较灵活,主链骨架原子的排列没有明显的周期性,但仍受多种因素限制。

在使用Alphafold 3预测蛋白结构时,由于无规卷曲结构的构象灵活,因此往往难以像β-折叠那样能够进行很好地预测。

图3.4 (a)α螺旋在角蛋白中形成超螺旋结构,赋予毛发200MPa的抗拉强度;(b)β折叠在丝心蛋白中平行排列,造就蚕丝优异的韧性;(c)β转角使多肽链在有限空间内折叠,如CDR环决定抗体特异性。

(3) 蛋白质的三级结构

蛋白质的三级结构 (Tertiary Structure)是指整条肽链中所有原子在三维空间的整体排布,包括主链和侧链的全部原子的空间排列。蛋白质的二级结构不涉及氨基酸侧链 (R基团)的构象,而三级结构是在二级结构的基础上,通过侧链基团之间的相互作用进一步折叠形成的。

蛋白质的三级结构涉及多种化学键和非共价作用力,包括疏水作用 (氨基酸的疏水侧链基团聚集在内部,远离水相)、氢键、离子键 (代征带和带负电的侧链基团之间的静电相互作用)和范德华力。二硫键也在稳定蛋白质三级结构中起到重要作用,它可以将不同区域的肽链连接起来。

三级结构使蛋白质具有特定的形状和功能,例如酶的活性中心往往是在三级结构形成的特定空间区域内,这个区域的形状和化学性质能够特异性地结合底物并催化反应。

1) 锌指结构

锌指结构 (Zinc finger)是一种常见的蛋白质结构模体 (具有特定功能的短序列或局部结构模式),是由一段含有特定氨基酸序列的多肽链折叠而成,由几个氨基酸 (通常是半胱氨酸和组氨酸)与一个锌离子 (Zn2+)通过配位键结合,形成类似手指形状的结构。

锌指结构是氨基酸残基与锌离子相互作用形成的具有特定三维空间形状的结构,属于蛋白质的三级结构。其在基因表达调控、核酸识别等方面具有重要作用,许多转录因子中都含有锌指结构来识别特定的DNA序列。

2)亮氨酸拉链

亮氨酸拉链 (leucine zipper)也是蛋白质中的一种常见三维结构模体。亮氨酸拉链由两个α-螺旋组成,其主要特征是亮氨酸残基在每七个氨基酸中出现一次,每个α-螺旋上的亮氨酸残基分布在螺旋的一侧。当两个α-螺旋相互靠近时,这些亮氨酸残基就像拉链的齿一样相互交叉,这种作用主要是通过亮氨酸残基之间的疏水相互作用实现的。

亮氨酸拉链的主要功能之一是作为二聚体化结构域,使蛋白质能够形成二聚体,这种二聚体化作用对于许多蛋白质的功能发挥至关重要。

(4)蛋白质的四级结构

蛋白质的四级结构 (Quaternary Structure)是指由两条或两条以上具有独立三级结构的多肽链通过非共价键 (疏水相互作用、氢键、离子键和范德华力等)相互结合而成的聚合体结构。这些独立的多肽链成为亚基,亚基之间可以相同也可以不同。

四级结构能够增加蛋白质功能的复杂性和调节能力,实现更复杂的生物学功能。例如,血红蛋白是由4个亚基 (2个α亚基和2个β亚基)组成的四聚体,这种组合使得血红蛋白结构更加稳定。此外,血红蛋白的四级结构还具有协同效应,如一个亚基结合氧气后引起整个血红蛋白分子构象变化,使其他亚基更容易结合氧气 (正协同效应),释放氧气时同理,这种作用使得血红蛋白能够更有效地运输氧气。

03

蛋白的氨基酸组成

首先,所有蛋白质是由一套α-氨基酸通过一系列酰胺键连接在一起组成的。理论上,可用的α-氨基酸的数量是无限的,但自然界主要有20种α-氨基酸(图3.3)。极端环境生物体中发现第21种氨基酸吡咯赖氨酸,但其仅存在于0.0001%的已知蛋白质中。所有的氨基酸除了甘氨酸外都含有手性中心,而自然界只利用其中的一个对映异构体(图3.3)。α-氨基酸通过酰胺键连接在一起,从而形成几十个到上千个由α-氨基酸组成的线性多肽。目前已知的最大的多肽Titin包含34350个氨基酸,其编码基因长达281434个碱基对。Titin蛋白在肌肉收缩中发挥分子弹簧作用,可承受4pN的机械拉力。

图3.3 组成蛋白质的20种基本α-氨基酸。

20种氨基酸的发现过程

1)甘氨酸Glycine:1820年,法国化学家H. Braconnot 研究明胶水解时,分离出了甘氨酸,当时被认为是一种糖,后来发现这个“明胶糖”中含有氮原子,是最简单的氨基酸,称之为glycine(源于希腊语,’glykys’,意思是“甜的”)。事实上,甘氨酸的甜度是蔗糖甜度的80%。甘氨酸是人类发现的第一个氨基酸,也是最简单的、非极性的、不具有旋光性的氨基酸。

2)丙氨酸Alanine:1850年,由A. Strecker用乙醛acetaldehyde、氨和氢氰酸首次合成。

3)缬氨酸Valine:1856年,Von Group Besanez从胰脏的浸提液中分离出来缬氨酸,直至1906年由Fisher分析出其化学结构为2-氨基-3-甲基丁酸,并将其命名为缬氨酸valine,名称源于valerian(缬草)。

4)亮氨酸Leucine:1819年,亮氨酸(又称白氨酸)是 Proust首先从奶酪中分离出来的,之后 1820年Braconnot从肌肉与羊毛的酸水解物中得到其结晶,并定名为亮氨酸。英文名称为Leucine,源于希腊语leuco,意思是‘白的’。称其为白氨酸是因为它本身为白色粉末,称其为亮氨酸是因为它本身易于结晶,而且折光度很高,非常闪亮。

5)异亮氨酸Isoleucine:亮氨酸衍生物。

6)脯氨酸Proline:1900年,R. Willstätter研究N-甲基脯氨酸时获得。1901年,由E. Fischer从γ-邻苯二甲酰亚胺丙二酸酯的降解产物和酪蛋白中分离。

7)苯丙氨酸Phenylalanine:1879年,Schulze和Barbieri在黄色羽扇豆幼苗中发现了一种分子式为C9H11NO2的化合物。1882年,由Erlenmeyer和Lipp通过苯乙醛、氨和氰化氢合成。

8)酪氨酸Tyrosine:由德国化学家J. Liebig首先在乳酪中的酪蛋白发现。

9)色氨酸Tryptophan:1901年,由英国人F. Hopkins 和S. Cole 用胰蛋白酶trypsin消化酪蛋白时分离得到的。

10)丝氨酸Serine:1865 年,由E. Cramer将蚕丝中的丝胶蛋白sericin置于硫酸中水解得到。当初把它误认为是甘氨酸,直到1902后才确定其结构。

11)苏氨酸Threonine: 1935年,由W. Rose发现于纤维蛋白水解物之中。此后W. Rose与C. Meyer合作研究其空间结构。

12)天冬酰胺Asparagine:1806年,由法国化学家L. Vauquelin和其助手P. Robiquet从芦笋asparagus的汁液中分离。1809年,这位助手从甘草根中鉴定出一种性质十分相似的物质,并在1828年确认它就是天冬酰胺。

13)天冬氨酸Aspartic acid: 1827年,A.Plisson水解蜀葵根的分离物天冬酰胺,得到天冬氨酸。

14)谷氨酸Glutamic acid:1861年,由德国化学家K. Ritthausen用硫酸从小麦的面筋当中提取。1908年,日本的池田菊苗在蒸干海带煮出的汁后,得到了一种棕色结晶,品尝时“重现了多种食物中难以言喻却真实存在的味道”。这就是谷氨酸钠。然后,他为大规模生产谷氨酸钠的方法申请了专利,并作为人工调料第一次投放市场。

15)谷氨酰胺Glutamine:谷氨酸的衍生物。

16)组氨酸Histidine:1896年,由德国医生A. Kossel和S. Hedin相互独立发现。

17)精氨酸Arginine:1886年,由德国化学家E. Schultze和他的博士生E. Steiger从羽扇豆苗中分离。1894年,瑞典化学家S. Hedin水解角蛋白也得到了精氨酸,之后与Schultze实验室的样品比对,确定是同种物质。

18)赖氨酸Lysine: 1889年,由F. Drechsel从牛奶中的酪蛋白中分离出。当时实际上得到的是赖氨酸与精氨酸的混合物,命名为lytatine;之后用磷钨酸处理后,分离出赖氨酸的铂盐。1891年,由他的学生M. Siegfried发表这种氨基酸的正确成分。1902年,E. Fisher和F. Weigert通过合成确定了结构。

19)甲硫氨酸Methionine:1921年,美国科学家J. Mueller从在研究微生物培养时,发现酪蛋白中含有当时未知的某种必需氨基酸,遂从酪蛋白中分离出甲硫氨酸。

20)半胱氨酸Cysteine:1810年,英国科学家W. Wollaston从膀胱结石中发现胱氨酸,英文为 cystinol。而半胱氨酸,英文为Cysteine,因为它为胱氨酸的一半,所以中文名为半胱氨酸。

04

蛋白的相互作用力

X射线晶体学显示,溶菌酶的活性中心由β折叠构成,通过6个关键氨基酸催化细菌细胞壁水解。这些结构层次的形成依赖于多种分子作用力:

·二硫键:在抗体铰链区形成刚性结构,IgG1含12对二硫键,使Fc段耐受56℃高温;

·盐桥:核糖核酸酶A中Lys41-Asp83盐桥贡献8.3kcal/mol稳定能;

·疏水作用:细胞色素c的疏水核心占体积68%,是维持折叠的主要驱动力;

·π-π堆积:T4溶菌酶中Trp138与Tyr161形成三明治式堆积,键能达5.2kcal/mol;

·氢键:α螺旋中每个残基形成3.6个氢键,总键能占二级结构稳定性的75%。

分子动力学模拟显示,单个二硫键断裂可使蛋白展开速率加快10^4倍。这些作用力的协同效应使蛋白质在动态中保持功能活性,如GPCR在信号传导中发生0.5nm的构象位移即可激活G蛋白。

表3. 稳定蛋白质结构的相互作用力

作用力类型

强度 (kcal/mol)

常见位置

实例与应用

共价键

60-110

二硫键(Cys-Cys)

抗体铰链区稳定(IgG1的链间二硫键)

盐桥(离子键)

3-7

酸性/碱性残基侧链(如Asp-Lys)

酶活性中心电荷稳定(如胰蛋白酶)

疏水作用

1-3

非极性侧链簇(Leu、Phe等)

跨膜区脂质双分子层锚定(如GPCR)

π-堆积

2-5

芳香环(Phe、Tyr)

DNA碱基堆积(拓扑异构酶抑制剂靶向)

π-阳离子作用

3-6

芳香环与Arg/Lys(如Tyr-Arg)

激酶ATP结合口袋(伊马替尼设计)

氢键

1-7

极性基团(如Ser-OH与Asn-NH2)

底物特异性识别(如HIV蛋白酶抑制剂)

参考文献

1. 《药物研发基本原理》第2版,〔美〕本杰明·E. 布拉斯,科学出版社

2. 公众号:叮当学术篇、NIRO科研喵

3. 其它信息源于网络搜索

二、新药研发(04):经典靶点--酶

01

酶的本质与历史发现

酶是生物催化剂,主要由蛋白质构成(如消化酶),少数为具有催化功能的RNA(核酶,如RNase P)。酶具有高度结构多样性,例如最小的酶仅含62个氨基酸,而最大的脂肪酸合酶超过2500个氨基酸。尽管体积差异大,酶的活性位点仅占整体的小部分,通常由特定氨基酸残基形成的空腔构成,通过氢键、疏水作用、π堆积等相互作用精确识别底物,确保催化特异性。

·19世纪中期:巴斯德提出发酵依赖活细胞,认为“酵素”与生命相关。

·1877年:威廉·库内提出"酶"概念时,正值德国生理学派主导的发酵研究热潮,当时科学界普遍认为发酵是细胞整体活动的结果。

·1897年:爱德华·布希纳通过无细胞酵母提取液实现蔗糖发酵,证明酶可独立于细胞存在,开启酶学研究,获1907年诺贝尔奖。

·1926年:詹姆斯·萨姆纳首次结晶脲酶,证实酶为蛋白质,获1946年诺贝尔奖。他成功结晶脲酶的过程堪称科学传奇——历时9年,尝试200余种溶剂系统,最终在低温丙酮沉淀法中观察到针状晶体,其蛋白质属性通过双缩脲反应和元素分析得以确证。

02

酶的命名和分类

1. 酶的命名

·习惯命名法:基于底物(如淀粉酶)或催化反应类型(如脱氢酶)来命名,有时在这些命名基础上加上酶的来源或其它特点。

·国际系统命名法(EC编号):由国际生化联合会制定,格式为EC X.X.X.X,如EC 1.1.1.1(乙醇脱氢酶)。系统名称包括底物名称、构型、反应性质,最后加一个酶字。

例如:

习惯名称:谷丙转氨酶

国际系统名称:丙氨酸:α-酮戊二酸氨基转移酶

酶催化的反应:谷氨酸 + 丙酮酸⎯→ α-酮戊二酸 + 丙氨酸

2. 酶的分类(EC系统)

·氧化还原酶类(EC1):催化氧化-还原反应,主要包括脱氢酶(Dehydrogenase)和氧化酶(Oxidase)。

·转移酶类(EC2):催化基团转移反应,即将一个底物分子的基团或原子转移到另一个底物的分子上,如转氨酶。

·水解酶类(EC3):催化底物的加水分解反应,主要包括淀粉酶、蛋白酶、核酸酶及脂酶等。

·裂合酶类(EC4):催化从底物分子中移去一个基团或原子形成双键的反应及其逆反应,主要包括醛缩酶、水化酶及脱氨酶等。

·异构酶类(EC5):催化各种同分异构体的相互转化,即底物分子内基团或原子的重排过程,如磷酸葡萄糖异构酶。

·连接酶类(EC6):又称为合成酶,能够催化 C-C、C-O、C-N 以及 C-S 键的形成反应。这类反应必须与 ATP 分解反应相互偶联,如DNA连接酶。

·核酶:是唯一的非蛋白酶,它是一类特殊的 RNA,能够催化 RNA 分子中的磷酸酯键的水解及其逆反应。

根据酶的组成情况,可以将酶分为两大类:

·单纯蛋白酶:它们的组成为单一蛋白质。

·结合蛋白酶:某些酶,如氧化-还原酶等,其分子中除了蛋白质外,还含有非蛋白组分。结合蛋白酶的蛋白质部分称为酶蛋白,非蛋白质部分包括辅酶及金属离子(或辅因子 cofactor)。酶蛋白与辅助成分组成的完整分子称为全酶,单纯的酶蛋白无催化功能。

辅酶在酶促反应中的作用特点:

·辅酶在催化反应过程中,直接参加反应。

·每一种辅酶都具有特殊的功能,可以特定地催化某一类型的反应。

·同一种辅酶可以和多种不同的酶蛋白结合形成不同的全酶。

·一般来说,全酶中的辅酶决定了酶所催化的类型(反应专一性),而酶蛋白则决定了所催化的底物类型(底物专一性)。

酶分子中的金属离子:

·根据金属离子与酶蛋白结合程度,可分为两类:金属酶和金属激酶。

o金属酶:酶蛋白与金属离子结合紧密,如 Fe2+/ Fe3+、Cu+/Cu3+、Zn2+、Mn2+、Co2+ 等。金属酶中的金属离子作为酶的辅助因子,在酶促反应中传递电子,原子或功能团。

金属离子

配体

酶或蛋白

Mn2+

咪唑

丙酮酸脱氢酶

Fe2+/Fe3+

卟啉环,咪唑,含硫配体

血红素,氧化-还原酶,过氧化氢酶

Cu+/Cu2+

咪唑,酰胺

细胞色素氧化酶

Co2+

卟啉环

变位酶

Zn2+

-NH3,咪唑,(-RS)

碳酸酐酶,醇脱氢酶

Pb2+

-SH

d-氨基-g-酮戊二酸脱水酶

Ni2+

-SH

尿酶

o金属激活酶:酶蛋白与金属离子松散结合,在溶液中,酶与这类离子结合而被激活。如 Na+、K+、 Mg2+、 Ca2+ 等。金属离子对酶有一定的选择性,某种金属只对某一种或几种酶有激活作用。

·作用:稳定结构、参与电子传递、协助底物结合。

03

酶分子的结构

酶分子的结构特点:

酶的活性中心(active center):与底物相结合并将底物转化为产物的区域。对于结合酶来说,辅酶或辅基往往是活性中心的组成成分。

1. 结合部位(Binding site)

·酶分子中与底物结合的部位或区域一般称为结合部位。

2. 催化部位(Catalytic site)

·酶分子中促使底物发生化学变化的部位称为催化部位。

·通常将酶的结合部位和催化部位总称为酶的活性部位或活性中心。

·结合部位决定酶的专一性,催化部位决定酶所催化反应的性质。

3. 调控部位(Regulatory site)

·酶分子中存在着一些可以与其他分子发生某种程度的结合的部位,从而引起酶分子空间构象的变化,对酶起激活或抑制作用。

4. 酶活性中心的必需基团(Essential group)

·酶的分子中存在着许多功能基团,例如,-NH2、-COOH、-SH、-OH等,但并不是这些基团都与酶活性有关。

·一般将与酶活性有关的基团称为酶的必需基团。

必需基团可分为四种:

1) 接触残基( contact residue)

·直接与底物接触的基团,它们参与底物的化学转变,是活性中心的主要必需基团。

·结合基团(binding group):与底物结合

·催化基团(catalytic group):催化底物发生化学变化

·还有些必需基团虽然不参加酶的活性中心的组成,但为维持酶活性中心应有的空间构象所必需,这些基团是酶的活性中心以外的必需基团。

2) 辅助残基(auxiliary residue)

·这种残基既不直接与底物结合,也不催化底物的化学反应,但对接触残基的功能有促进作用。

·它可促进结合基团对底物的结合,促进催化基团对底物的催化反应。它也是活性中心不可缺少的组成部分。

3) 结构残基(structure residue)

·这是活性中心以外的必需基团,它们与酶的活性不发生直接关系,但它们可稳定酶的分子构象。

·特别是稳定酶活性中心的构象,因而对酶的活性也是不可缺少的基团,只是起间接作用而已。

4) 非贡献残基(noncontribution residue)

·酶分子中除上述基团外的其它基团,它们对酶的活性“没有贡献”,也称为非必需基团。

·它们可能在系统发育的物种专一性方面、免疫方面或者在体内的运输转移、分泌、防止蛋白酶降解的方面起一定作用。这些基团的存在也可能是该酶迄今未发现的新的活力类型的活力中心。

拓展知识#1

某些小分子有机化合物与酶蛋白结合在一起并协同实施催化作用,这类分子被称为辅酶(或辅基)。辅酶是一类具有特殊化学结构和功能的化合物。参与的酶促反应主要为氧化-还原反应或基团转移反应。

大多数辅酶的前体主要是水溶性 B 族维生素,许多维生素的生理功能与辅酶的作用密切相关。

辅酶、辅基的区别:能否用物理方法去除。

(1) 维生素 PP

·烟酸和烟酰胺,在体内转变为辅酶 I 和辅酶 II。

·能维持神经组织的健康。缺乏时表现出神经营养障碍,出现皮炎。

·NAD+ (烟酰胺-腺嘌呤二核苷酸,又称为辅酶 I) 和 NADP+(烟酰胺-腺嘌呤磷酸二核苷酸,又称为辅酶II )是维生素烟酰胺的衍生物。

·功能:是多种重要脱氢酶的辅酶。

(2)核黄素(VB2)

·核黄素(维生素 B2)由核糖醇和 6,7-二甲基异咯嗪两部分组成。

·缺乏时组织呼吸减弱,代谢强度降低。主要症状为口腔发炎,舌炎、角膜炎、皮炎等。

·FAD(黄素-腺嘌呤二核苷酸)和 FMN(黄素单核苷酸)是核黄素(维生素 B2)的衍生物

·功能:在脱氢酶催化的氧化-还原反应中,起着电子和质子的传递体作用。

(3) 泛酸和辅酶 A (CoA)

·维生素(B3)-泛酸是由 α, γ-二羟基-β-二甲基丁酸和一分子 β- 丙氨酸缩合而成。

·辅酶 A 是生物体内代谢反应中乙酰化酶的辅酶,它的前体是维生素(B3)泛酸。

·功能:是传递酰基,是形成代谢中间产物的重要辅酶。

(4) 叶酸和四氢叶酸(FH4 或 THFA)

·四氢叶酸是合成酶的辅酶,其前体是叶酸(又称为蝶酰谷氨酸,维生素 B11)。

·四氢叶酸的主要作用是作为一碳基团,如-CH3, -CH2-, -CHO 等的载体,参与多种生物合成过程。

(5) 硫胺素

·硫胺素(维生素 B1)在体内以焦磷酸硫胺素(TPP)形式存在。缺乏时表现出多发性神经炎、皮肤麻木、心力衰竭、四肢无力、下肢水肿。

·焦磷酸硫胺素是脱羧酶的辅酶,它的前体是硫胺素(维生素 B1)。

·功能:是催化酮酸的脱羧反应。

(6)吡哆素

·磷酸吡哆素主要包括磷酸吡哆醛和磷酸吡哆胺。

·磷酸吡多素是转氨酶的辅酶,转氨酶通过磷酸吡多醛和磷酸吡多胺的相互转换,起转移氨基的作用。

(7) 生物素

·生物素是羧化酶的辅酶,它本身就是一种 B 族维生素 B7。

·功能:是作为 CO2的递体,在生物合成中起传递和固定 CO2的作用。

(8) 维生素 B12 辅酶

·维生素 B12 又称为钴胺素,维生素 B12 分子中与 Co+相连的 CN 基被 5′-脱氧腺苷所取代,形成维生素B12 辅酶。

·主要功能:是作为变位酶的辅酶,催化底物分子内基团(主要为甲基)的变位反应。

(9)硫辛酸

·硫辛酸是少数不属于维生素的辅酶。硫辛酸是 6, 8-二硫辛酸,有两种形式,即硫辛酸(氧化型)和二氢硫辛酸(还原型)。

(10) 辅酶 Q (Co Q)

·辅酶 Q 又称为泛醌,广泛存在与动物和细菌的线粒体中。

·辅酶 Q 的活性部分是它的醌环结构。

·主要功能:是作为线粒体呼吸链氧化-还原酶的辅酶,在酶与底物分子之间传递电子。

(11) 维生素 C

·在体内参与氧化还原反应,羟化反应;人体不能合成。

图1. 辅酶是许多酶促反应的必需成分。

NADP 和FAD被用于酶还原反应;ATP 是细胞系统中最常见的能量转移剂;辅酶A 是酰基转移剂;辅酶Q 是产生细胞能量的电子传递链的一部分;血红素B 是人体中最丰富的血红素,是血红蛋白中氧的载体。

04

酶的催化机制

酶作用机制的认知经历了三次范式转移:

·锁钥模型(1894):费舍尔基于糖苷酶研究提出,该理论成功解释麦芽糖酶的底物特异性——其对α-1,4糖苷键的识别精度可达0.2Å空间误差。该理论认为整个酶分子的天然构象是具有刚性结构的,酶表面具有特定的形状,酶与底物的结合如同一把钥匙对一把锁一样。

·中间产物学说(1902):布朗通过动力学实验测得蔗糖酶Km值为0.05M,首次量化酶-底物亲和力。酶在催化化学反应时,酶与底物首先形成不稳定的中间物,然后分解酶与产物。即酶将原来活化能很高的反应分成两个活化能较低的反应来进行,因而加快了反应速度。

·诱导契合模型(1958):科什兰德团队用停流光谱捕捉到溶菌酶在0.1毫秒内发生的构象调整,其活性位点体积缩小23%以适应底物。该学说认为酶表面并没有一种与底物互补的固定形状,而只是由于底物的诱导才形成了互补形状。

图2. (a)埃米尔·费舍尔于1894 年提出的理论奠定了当今对酶促反应理解的基石,其认为酶和底物(S)一定要有互补的形状,从而来催化反应生成产物(P)。后来,布朗和亨利提出了新的概念,认为会短暂地生成酶-底物复合物反应中间体。(b)科什兰德在1958年提出了“诱导契合”理论,认为底物和酶的结合会引起酶的整体构象发生变化,变成有利于反应催化所需的结构。

05

酶抑制剂的作用模式

根据酶抑制剂的一般作用模式,主要分为三类:竞争性抑制剂、不可逆抑制剂和变构抑制剂(图3)。

图3. (a)在常规酶促反应过程中,底物(绿色)与活性位点的相互作用。(b)竞争性抑制剂(橙色)可逆阻断酶的活性位点。(c)不可逆抑制剂(橙色)与活性位点发生共价结合。(d)变构抑制剂(黄色)与变构结合位点结合,改变活性位点,阻止底物与活性位点的结合。

·竞争性抑制剂

o定义:作为酶竞争性抑制剂的化合物能够占据酶的活性位点(或其中一部分),从而阻止天然底物进入活性位点。在这种情况下,抑制是可逆的,因为酶和抑制剂之间没有形成共价键。抑制剂与蛋白活性位点相互作用的作用力与蛋白质在自然状态下的作用力是一致的(如氢键、疏水相互作用等)。

o作用:结构与底物相似,竞争活性中心(如丙二酸抑制琥珀酸脱氢酶)。

o动力学:Km增加,Vmax不变(高底物可逆转)。

·不可逆抑制剂

o定义:与竞争抑制剂和变构抑制剂不同,不可逆抑制剂共价连接到目标酶的活性位点,阻断天然底物的进入,使酶失活。涉及药物代谢酶的不可逆抑制也会改变药物从体内清除的速率而导致显著的不良反应。一般而言,制药公司更倾向于开发竞争性和变构抑制剂而非不可逆抑制剂。

o作用:共价修饰酶(如青霉素G抑制青霉素结合蛋白A)。

o动力学:Vmax下降,酶活性不可恢复。

图4. (a)青霉素(青霉素G,红色)共价结合到结核分枝杆菌青霉素结合蛋白A 的活性位点(显示关键侧链)。(b)青霉素与结核分枝杆菌青霉素结合蛋白A 的活性位点发生共价结合(未显示侧链)。

·变构抑制剂

o定义:正如它们名字所暗示的,其结合在酶的活性区域以外的区域而发挥作用。尽管酶的活性位点未被占据,但由于变构抑制剂的存在,自然底物也无法进入。当变构抑制剂与变构结合位点(与酶上活性位点不同的结合位点)结合时,会诱导酶的整体构型发生变化,使结合位点不再能够与自然配体发生相互作用。

o作用:结合别构位点,改变酶构象(如CTP抑制天冬氨酸转氨甲酰酶)。

o动力学:Vmax下降,可能影响协同效应,属可逆非竞争性抑制。

拓展知识#2

酶的活性中心的一级结构

·应用化学修饰法对多种酶的活性中心进行研究发现,在酶的活性中心处存在频率最高的氨基酸残基是:丝氨酸、组氨酸、天冬氨酸、酪氨酸、赖氨酸和半胱氨酸。如果用同位素标记酶的活性中心后,将酶水解,分离带标记水解片段,对其进行一级结构测定,就可了解酶的活性中心的一级结构。

·一些丝氨酸蛋白酶在活性丝氨酸附近的氨基酸几乎完全一样,而且这个活性丝氨酸最邻近的 5-6 氨基酸顺序,从微生物到哺乳动物都一样,说明蛋白质活性中心在种系进化上有严格的保守性。

酶活性中心证明方法

1) 切除法

·对小分子且结构已知的酶多用此法。

·用专一性的酶切除一段肽链后剩余的肽链仍有活性,说明切除的肽链与活性无关。反之,切除的肽链与活性有关。

2) 化学修饰法

·用化学试剂与酶蛋白中的氨基酸残基的侧链基团发生反应引起共价结合、氧化或还原等修饰,称之为化学修饰。酶分子中可以修饰的基团有:-SH、-OH、咪唑基、氨基、羧基、胍基等,修饰剂已有七十多种,但专一性的修饰剂不多。

·修饰试剂既可与酶的活性部位的某特异基团结合,又可与酶的非活性部位的同一基团结合,称之为非特异性共价修饰。

·此法适用于所修饰的基团只存在与活性部位,在非活性部位不存在或极少存在。判断标准是一:酶活力的丧失程度与修饰剂的浓度成正比;二:底物或竞争性抑制剂保护下可防止修饰剂的抑制作用。

·DIFP(二异丙基氟磷酸)可专一性地结合丝氨酸蛋白酶活性部位的丝氨酸-OH 而使酶失活。DIFP 一般不与蛋白质反应,也不与含丝氨酸的蛋白酶原或变性的酶反应,只与活性的酶且活性部位含丝氨酸的酶结合。

3) 亲和标记法

·含反应基团的底物类似物,作为活性部位的标记试剂,它能象底物一样进入酶的活性部位,并以其活泼的化学基团与酶的活性基团的某些特定基团共价结合,使酶失去活性。

·如胰凝乳蛋白酶最适底物为:N-对甲苯磺酰-L-苯丙氨酰乙酯或甲酯,根据此结构设计的亲和标记试剂为:N-对甲苯磺酰-苯丙氨酰氯甲基酮(TPCK)。

4) X射线衍射法

·把一纯酶的 X射线晶体衍射图谱与酶与底物反应后的 X射线图谱相比较,即可确定酶的活性中心。

酶原激活

·有些酶在细胞内合成时,或初分泌时,没有催化活性,这种无活性状态的酶的前体称为酶原(zymogen)。

·酶原向活性的酶转化的过程称为酶原激活,酶原激活实际上是酶的活性中心形成或暴露的过程。

·胃蛋白酶、胰蛋白酶、胰糜蛋白酶、羧肽酶、弹性蛋白酶等,例如:胰蛋白酶原进入小肠后,受肠激酶或胰蛋白酶本身的激活,第6位赖氨酸与第7位异亮氨酸残基之间的肽键被切断,水解掉一个六肽,酶分子空间构象发生改变,产生酶的活性中心,于是胰蛋白酶原变成了有活性的胰蛋白酶。

·除消化道的蛋白酶外,血液中有关凝血和纤维蛋白溶解的酶类,也都以酶原的形式存在。

参考文献

1. 《药物研发基本原理》第2版,〔美〕本杰明·E. 布拉斯,科学出版社

2. 公众号:手球实验室

3. 其它信息源于网络搜索

三、新药研发(05):经典靶点i--G蛋自偶联受体(GPCR)

01

引言

G蛋白偶联受体(G protein-coupled receptors, GPCRs)作为细胞通讯系统中至关重要的组成部分,在人体生理功能调控中扮演着不可或缺的角色。GPCRs是一类跨膜蛋白,负责接收细胞外信号并将其转化为细胞内信号,进而调控多种生理过程。作为药物研发领域最丰富的靶点之一,约三分之一的FDA批准药物通过靶向GPCRs发挥治疗作用。本文将深入探讨GPCRs的结构特点、信号转导机制、药物调控原理及其在药物研发中的应用,旨在全面展示这一重要靶点在现代医药科学中的核心地位和广阔前景。

02

GPCRs的结构与分类

2.1 GPCR结构特点

GPCRs是人类膜结合蛋白中最大的家族,其结构特征具有高度一致性。所有GPCRs都有七个跨膜区段(TM-1至TM-7),主要由α螺旋构成,这些跨膜区域通过三个细胞内环(IL-1、IL-2、IL-3)和三个细胞外环(EL-1、EL-2、EL-3)相互连接。这种"七跨膜"结构是GPCRs最显著的特征,也为其赢得了"7TM受体"的别称。

GPCRs的氨基末端位于细胞外侧,而羧基末端位于细胞内侧。这种定向对于GPCRs的功能至关重要,因为它决定了信号转导的方向:从细胞外到细胞内。TM5/6区域的细胞内环显示出最高的变异性,这一区域主要负责GPCRs区分不同配体的能力,使每种GPCR能够特异性识别其对应的配体。

图1. 经典的G 蛋白偶联受体(GPCR)有七个跨膜区(TM-1 ~ TM-7,灰色),三个细胞内环(IL-1 ~ IL-3,黄色)和三个细胞外环(EL-1 ~ EL-3,红色)。

2.2 GPCR分类

基于结构和序列相似性,GPCRs被分为五个主要家族:

·视紫红质家族(A类):这是最大的家族,包含超过700个成员,包括血清素受体、多巴胺受体、血管紧张素Ⅱ受体和前列腺素受体等。这些受体不仅在中枢神经系统中发挥作用,还在心血管调节和疼痛感知中扮演重要角色。

·分泌素家族(B类):约包含15个成员,包括甲状旁腺激素受体和胰高血糖素受体。这一家族的受体对激素信号传导至关重要。

·谷氨酸受体(C类):包含约15个成员,主要参与神经传递中的突触细胞兴奋性调节。

·黏附家族GPCRs(E类):包含至少24种成员,其细胞外结构域显著大于其他GPCRs。这一家族的名称源于其在细胞黏附中的重要作用,例如免疫细胞与靶细胞的相互作用。

·卷曲/味觉GPCRs(F类):包含约24个成员,参与Wnt信号通路介导的细胞分化、增殖,以及味觉的感知。

03

GPCRs的历史发展

3.1发现历程

GPCRs的概念最初是在20世纪初由兰利(Langley)和黑尔(Hale)提出的,他们提出了"接受物质"(receptive substance)的概念,认为细胞外表面存在能够对周围环境产生反应的物质。然而,当时人们对GPCRs的详细作用机制并不清楚,关于"接受物质"本质的争论持续了近70年。

直到1970年,罗伯特·莱夫科维茨(Robert Lefkowitz)及其团队开创性地使用放射性同位素标记的促肾上腺皮质激素(ACTH),首次检测并观察到激动剂与肾上腺细胞膜上受体的结合现象,才真正揭开了GPCRs研究的序幕。

3.2结构解析里程碑

GPCRs的结构解析是该领域研究的重要里程碑。2000年,第一个哺乳动物GPCR晶体——牛视紫红质的结构被报道,这标志着GPCR结构研究的开始。随后,在2007年,人类β2-肾上腺素能受体的X射线衍射晶体结构也被成功解析。

这些结构解析工作为理解GPCRs的信号转导机制提供了直接的分子基础,也为药物设计和开发提供了重要参考。特别是,这些结构揭示了GPCRs如何通过构象变化将胞外信号转化为胞内信号,以及配体如何影响这些构象变化。

04

GPCRs的信号转导机制

GPCRs通过第二信使系统将胞外信号传递到胞内,主要的第二信使系统包括cAMP系统和磷脂酰肌醇信使系统。

4.1 cAMP信号系统

在cAMP信号系统中,GPCR与G蛋白复合物相互作用。在没有配体的情况下,Gα亚基与GDP结合,并牢固地附着在Gβ和Gγ亚基上。当配体结合GPCR时,GPCR的构象发生变化,导致G蛋白复合物与GPCR解离,释放Gα蛋白和GDP,然后GTP与Gα蛋白结合。GTP-Gα蛋白复合物与腺苷酸环化酶结合,激活产生cAMP的酶。cAMP与调节蛋白(“R”)的结合抑制蛋白激酶A(RC)释放活性蛋白激酶A(C),使其磷酸化分子靶标。

该系统受Gα蛋白和cAMP磷酸二酯酶的GTP酶活性共同调节,Gα蛋白的GTP酶活性将GTP缓慢转化为GDP,导致Gα从腺苷酸环化酶中释放出来,使cAMP的生成停止。此外,cAMP磷酸二酯酶将cAMP转化为AMP,使调节蛋白恢复到抑制蛋白激酶A的活性,进而终止GPCR信号。

图2. cAMP 信号转导始于配体与GPCR 的结合。GPCR 中的构象变化导致G 蛋白复合物与GPCR 解离,释放Gα 蛋白和GDP,并且GTP与Gα 蛋白结合。GTP-Gα 蛋白复合物与腺苷酸环化酶结合,激活产生cAMP 的酶。cAMP 与调节蛋白(“R”)的结合抑制蛋白激酶A(RC)释放活性蛋白激酶A(C),使其磷酸化分子靶标,该系统受Gα 蛋白和cAMP 磷酸二酯酶的GTP 酶活性共同调节。

4.2 磷脂酰肌醇(IP3)信号系统

在磷脂酰肌醇信号系统中,Gα-GTP复合物与磷脂酶C相互作用,激活该酶,然后将膜结合的磷脂酰-4,5-肌醇二磷酸(PIP2)裂解成两个第二信使分子:肌醇-1,4,5-三磷酸(inositol-1,4,5-trisphosphate,IP3)和二酰甘油(diacylglycerol,DAG)。IP3被释放到细胞质中并引发储存于内质网中钙离子的释放,DAG与膜结合的蛋白激酶C(PKC)相互作用,PKC包含调节结构域和催化结构域,DAG通过与PKC的调节结构域结合引起PKC的构象发生变化,激活PKC并增加其对钙的亲和力。由于PKC的酶活性需要钙的参与,因此IP3诱导的钙释放对PKC的激活具有协同作用。

信号终止在IP3信号通路中与在cAMP通路中一样重要,Gα蛋白的GTP酶活性可以将GTP缓慢转化为GDP并导致Gα从磷脂酶C中释放出来,导致磷脂酶C的失活,这会中断IP3和DAG的生成,从而终止信号。此外,通过钙ATP酶泵从细胞质基质中移除钙离子也将抑制信号转导,同时通过一系列磷酸酶将IP3转化为肌醇也可以从信号级联中消除其影响。

图3. IP3 信号转导通过配体与GPCR 的结合而启动。GPCR 的构象改变导致G 蛋白复合物与GPCR 解离,释放Gα 蛋白和GDP,然后GTP与Gα 蛋白结合。GTP-Gα 蛋白复合物再与磷脂酶C 结合,磷脂酶C 水解PIP2,释放出DAG 和IP3。进入细胞质的IP3 引起细胞内钙库中钙离子的释放,同时膜结合的DAG 激活蛋白激酶C,激活的蛋白激酶C 通过ATP 对靶分子进行磷酸化。钙的存在可以增强蛋白激酶C 的活性。该系统通过IP3和DAG 的酶促降解、Gα 蛋白的GTP 酶活性和细胞质内钙的移除来调控。

05

GPCRs的活性调节

5.1 激动剂、拮抗剂和反向激动剂

与GPCRs相互作用的药物主要分为三类:激动剂、拮抗剂和反向激动剂。

(1) 激动剂

激动剂模拟GPCR的天然配体并诱导产生相同的细胞响应,根据其效力,激动剂可以分为完全激动剂和部分激动剂。完全激动剂能产生与内源性配体相当的信号转导,而部分激动剂激活GPCR信号转导的程度则要低一些。在某些情况下,部分激动剂可以有效地与天然配体竞争,降低GPCR对信号诱导的响应。

例如,β2肾上腺素受体的部分激动剂布新洛尔(Bucindolol)在治疗心血管疾病中显示出独特的优势,因为它同时具有激动剂和拮抗剂的特性,提供了一种独特的治疗指数。

(2) 拮抗剂

拮抗剂与GPCR结合但不引起细胞响应,在不存在激动剂或内源性配体的情况下,拮抗剂对细胞活性没有影响。然而,拮抗剂的存在可以阻止GPCR介导的细胞响应,因为拮抗剂的结合阻止了天然配体或激动剂与GPCR的结合,从而阻止了起始信号转导所必需的构象变化。

例如,普萘洛尔是一种非选择性的β肾上腺素能受体拮抗剂,用于治疗高血压、心绞痛和某些类型的心律失常。它通过阻断肾上腺素的作用,使心脏跳动更慢,力度更小,从而降低血压。

(3) 反向激动剂

反向激动剂也可以阻断内源性配体或激动剂的活性,从而将它们自身呈现为拮抗剂。然而,在某些情况下,反向激动剂也能够产生与内源性配体介导的相反的药理响应。这种逆转作用仅在GPCR具有自发的活性时才有可能存在,即无内源性配体时GPCR依然具有固有的或基础水平的信号转导活性。

例如,ICI-118,511是一种β2肾上腺素受体的反向激动剂,它可以抑制基础活性,即使在不存在内源性配体时也阻断通常存在的基础信号。

图4. 完全激动剂(绿色)诱导GPCR产生与内源性配体相当的信号转导,部分激动剂(蓝色)激活GPCR信号转导的程度则要低一些。中性拮抗剂(黑色)不诱导GPCR发挥活性,但可以阻断激动剂的活性。反向激动剂(黄色)可以抑制自发激活类GPCR的基础活性。

5.2 偏向性配体

近年来,“偏向性配体”(biased ligand)的概念被提出,即单个GPCR存在多种活性构象,可以根据配体的化学结构产生不同的下游信号转导。这种复杂性进一步增加了针对GPCR进行新药开发的复杂性。

例如,阿片受体的偏向性配体可以激活某些信号通路但抑制其他信号通路,从而产生镇痛作用的同时减少成瘾性和呼吸抑制等副作用。

图5. G蛋白偶联受体(GPCR)激活的经典和新模式。

(A)经典模式,(B)偏态激活,(C)细胞内激活,(D)二聚化激活,(E)反式激活,(F)双相激活。

06

GPCRs在药物发现中的应用

6.1 FDA批准的GPCR靶向药物

截至目前,FDA批准的GPCR靶向药物共计476款,其中小分子药物占92%,多肽类药物占5%,蛋白类药物占2%,而抗体药仅有2款。这表明小分子药物仍然是GPCR药物开发的主要形式,但随着生物技术的发展,针对GPCRs的生物制剂也在逐渐增加。

值得注意的是,许多GPCR靶向药物的成功开发都依赖于结构生物学的进步。过去5年中,许多新的GPCR药物进入市场,但这些药物都是在靶向GPCR的结构被公布后才发现的,这强调了结构信息在GPCR药物开发中的重要性。

6.2 GPCR药物开发的挑战

GPCR药物开发面临诸多挑战,首先,GPCR信号通路的复杂性和多样性使得药物开发变得非常复杂。GPCR不是孤立存在的,而是与多种信号分子和调节蛋白相互作用,形成复杂的网络。此外,GPCR信号通路往往不是简单的线性,而是存在多种交叉调节。

其次,GPCR结构生物学的限制也是药物开发的一个挑战。尽管近年来GPCR结构解析取得了显著进展,但仍有大量GPCR的结构尚未被解析,这限制了基于结构的药物设计。

此外,GPCR药物的特异性和选择性也是一个挑战。由于不同GPCR之间存在结构相似性,开发高选择性的药物往往需要大量的结构和功能研究。

6.3 GPCR药物开发的策略

针对GPCR的药物开发策略主要包括以下几个方面:

(1) 配体导向的药物设计

配体导向的药物设计基于GPCR与其配体的相互作用,通过微小的结构改变来操纵配体的功能。表明,在71%的微小改造中,γ位置到氮或更近的结构改变会导致配体功能从激动剂转变为拮抗剂或反之亦然,例如CCR3拮抗剂的哌啶环3’位甲基化等。这种策略在药物化学中被广泛应用于开发具有所需药理特性的药物。

(2) 结构导向的药物设计

结构导向的药物设计基于GPCR的三维结构信息,通过理解配体与受体的分子相互作用来设计新的药物分子。例如,β2肾上腺素受体的结构解析为开发选择性β2肾上腺素受体激动剂和拮抗剂提供了重要参考。

(3) 基于GPCR信号通路的药物开发

基于GPCR信号通路的药物开发关注GPCR信号通路的特定环节,通过调节信号通路中的关键分子来影响整体信号转导。例如,通过抑制腺苷酸环化酶或cAMP磷酸二酯酶来调节cAMP信号通路,或者通过抑制磷脂酶C来调节IP3信号通路。

07

GPCR药物的案例研究

7.1 β2肾上腺素受体药物

β2肾上腺素受体(β2AR)是GPCRs中最著名的成员之一,靶向该受体的药物广泛用于治疗哮喘和其他呼吸系统疾病。

(1) 沙丁胺醇

沙丁胺醇是一种有效的选择性人β2肾上腺素受体激动剂,它能有效刺激cAMP积累。在CHO细胞中,对人β2、β1和β3肾上腺素受体的pEC50分别为9.6、6.1和6.7。沙丁胺醇通过激活β2肾上腺素受体,松弛支气管平滑肌,从而缓解哮喘症状。作为选择性β2肾上腺素受体激动剂,沙丁胺醇相比非选择性的β肾上腺素受体激动剂(如肾上腺素和异丙肾上腺素)具有更少的心脏副作用。

(2) 异丙肾上腺素

异丙肾上腺素是一种非选择性的β肾上腺素受体激动剂,作用于β1和β2肾上腺素受体。虽然它也能缓解哮喘症状,但由于其对β1肾上腺素受体的激活,可能导致心悸、心动过速等心脏副作用。这强调了药物选择性的重要性。

(3) 阿洛尔

阿洛尔是一种非选择性的β肾上腺素受体部分激动剂,主要用于治疗高血压。它通过部分激活β1和β2肾上腺素受体,减慢心率,降低血压,部分激动剂的特性使其在某些情况下具有独特的治疗优势。

7.2 GPCR抗体药物

近年来,针对GPCRs的抗体药物也取得了显著进展。2018年5月,诺华/安进的Erenumab获得FDA批准,成为第一个预防性偏头痛治疗药物。Erenumab靶向降钙素基因相关肽(CGRP)受体,这是一种GPCR。随后,以色列梯瓦制药公司的Ajovy(fremanezumab)也于2018年9月获FDA批准,用于预防偏头痛。

这些抗体药物的成功开发表明,GPCR不仅是小分子药物的良好靶点,也是抗体药物的潜在靶点。与小分子药物相比,抗体药物具有更高的特异性和更长的半衰期,这为GPCR药物开发提供了新的方向。

7.3 GPCR药物开发的最新进展

近年来,GPCR药物开发取得了多项重要进展,例如,研究人员首次发表了β2受体激动剂研究进展的相关英文综述,详细介绍了2-氨基-2-苯基乙醇类化合物的兴奋β2受体的作用机制,以及原创候选药物川丁特罗的开发过程。川丁特罗是一种国家1.1类新药,目前已完成Ⅲ期临床研究。此外,盐酸马布特罗的研制也取得了重要进展,为哮喘患者提供了新的治疗选择。

08

GPCR药物研究的未来方向

8.1 结构生物学在GPCR药物开发中的应用

随着冷冻电镜等技术的发展,GPCR结构解析的速度显著加快,为基于结构的药物设计提供了更多机会。未来,结构生物学将继续在GPCR药物开发中发挥重要作用,帮助研究人员理解GPCR与配体的相互作用机制,指导药物分子的设计和优化。

8.2 GPCR偏向性配体的开发

偏向性配体(biased ligands)是指能够选择性激活GPCR特定信号通路的化合物,通过开发偏向性配体,可以实现对GPCR信号通路的精确调控,提高药物的疗效和安全性。例如,开发只激活镇痛信号通路而抑制成瘾性信号通路的阿片受体偏向性配体,将为疼痛治疗提供新的策略。

8.3 GPCR信号通路网络的研究

GPCR信号通路不是孤立的,而是与多种信号通路相互作用,形成复杂的网络。深入了解这些网络的调控机制,将有助于开发更有效、更安全的GPCR靶向药物。例如,研究GPCR信号通路与免疫系统的相互作用,可能为开发新型抗炎药物提供新的思路。

8.4 多靶点GPCR药物的开发

多靶点药物可以通过同时作用于多个GPCR或与其他信号分子相互作用,实现对复杂疾病的综合调控。例如,开发同时靶向β2肾上腺素受体和某些免疫受体的药物,可能为哮喘和慢性阻塞性肺病(COPD)的治疗提供新的策略。

09

结论

G蛋白偶联受体(GPCRs)作为细胞通讯系统中的关键分子,在人体生理功能调控中发挥着重要作用。从结构上看,GPCRs具有高度一致的"七跨膜"结构,但其配体结合位点和信号转导机制具有高度多样性,使其能够识别多种配体并调控多种生理过程。

GPCRs的信号转导主要通过cAMP和磷脂酰肌醇两条第二信使系统实现,这些系统可以进一步激活多种下游信号通路,调控细胞的增殖、分化、凋亡等重要功能。GPCRs的活性可以通过激动剂、拮抗剂和反向激动剂等多种方式进行调节,为药物开发提供了多种策略。

目前,已有476款FDA批准的GPCR靶向药物,广泛应用于哮喘、高血压、疼痛等多种疾病的治疗。然而,GPCR药物开发仍面临诸多挑战,包括GPCR信号通路的复杂性、GPCR结构生物学的限制以及药物选择性的提高等。

未来,随着结构生物学、计算化学和生物信息学的发展,GPCR药物开发将进入一个新的阶段。基于结构的药物设计、偏向性配体的开发、GPCR信号通路网络的研究以及多靶点药物的开发将成为GPCR药物研究的主要方向,为更多疾病提供有效的治疗手段。

参考文献

1. 《药物研发基本原理》第2版,〔美〕本杰明·E. 布拉斯,科学出版社

2. β2肾上腺素受体结构研究进展. https://image.hanspub.org/html/1-3070016_14976.htm.

四、新药研发(06):经典靶点--离子通道

01

引言

在药物研发领域,离子通道作为靶点具有重要战略意义。作为跨膜蛋白,离子通道在调节离子跨膜流动过程中发挥关键作用,影响神经冲动传导、肌肉收缩、心血管功能和细胞体积调节等多个重要生理过程。据统计,目前约有10%的药物靶点是离子通道,涉及治疗癫痫、心律失常、疼痛和囊性纤维化等多种疾病。本文将全面探讨离子通道的结构、功能、门控机制及其在药物发现中的应用,特别关注其作为药物靶点的潜力和挑战。

02

离子通道的结构与功能

2.1 基本定义与分类

离子通道(ion channel)是一类跨膜大分子孔道,允许离子在电化学梯度驱动下穿过细胞膜,完成信号传导、细胞兴奋性调节等生理功能。它们在药物研发中仅次于受体成为第二大类药物靶点,目前已发现超过300种不同的离子通道。

虽然它们的个体结构各不相同,但都具有以下共同特征:

·跨膜结构域:大多数离子通道是由一系列跨膜结构域和细胞外环、细胞内环连接的完整膜蛋白组成。

·多亚基结构:许多离子通道是多亚基蛋白复合体,只有当多个兼容的蛋白质亚基聚集在一起才能形成活性通道。

·选择性孔隙:通道的孔隙通常一次只能通过一个离子,并具有特定的离子类型选择性。

离子通道根据其激活机制主要分为以下几类:

·配体门控通道

·电压门控通道

·机械敏感性通道

·pH门控通道

·温度门控通道

2.2历史发展

对离子通道的研究可以追溯到19世纪40年代,当时卡洛·马泰乌奇(Carlo Matteucci)和埃米尔·杜·博-瑞蒙德(Emil du Bois-Reymond)探索了"生物电"的概念。马泰乌奇使用电流计测量青蛙腿产生的生物电,而杜·博-瑞蒙德研究了电流对神经和肌肉组织的影响。他们的工作为电流在生物过程中的作用提供了支持,但没有解释活组织如何支持电流传导。赫尔曼·范·亥姆霍兹(Hermann von Helmholtz)证明了电信号在活组织中的传播速度远远低于在金属导线中的传播速度,这表明简单的电导不是一个可行的解释,可能涉及潜在的化学过程。

在1902年,朱利叶斯·伯恩斯坦(Julius Bernstein)引入了"膜理论",解释了活体器官内的生物电现象。他假设神经和肌肉被半透膜包围,跨膜电位差是由离子在细胞屏障上的选择性运输所产生的细胞内外离子浓度的差异。伯恩斯坦将这种效应称为"双电层"的形成,但它通常被称为"膜电位"或"膜电压"。

尽管伯恩斯坦的基本理论是正确的,但在20世纪的大部分时间里,对离子通道本质的全面理解仍然是一个谜。阿姆斯特朗(Armstrong)及其同事在20世纪70年代的研究表明,神经细胞膜上的离子通道是由蛋白质构成的,这些蛋白质控制着离子的流动。阿姆斯特朗和同事们曾总结道:“科学家们普遍认为神经细胞膜的离子通道和控制离子通过的通道是由蛋白组成的,但在这个问题上的证据却出奇的少。”

03

离子通道的门控机制

3.1 配体门控通道

配体门控通道是指当配体与通道上的特异性结合位点相互作用时被激活的离子通道。在没有配体的情况下,通道通常处于关闭状态,而当配体结合后,蛋白质构象发生变化,打开通道允许离子流动。配体移除或移动会导致通道关闭,终止离子流动。

烟碱型乙酰胆碱受体(nAChR)是一个典型的配体门控通道例子,它由5个290k Da的亚基组成,对称排列形成中心孔,每个亚基含有4个跨膜结构域。在没有乙酰胆碱等配体的情况下,孔隙关闭不允许通过。然而,当乙酰胆碱与细胞外表面上的结合位点相互作用时,蛋白质构象变化,通道打开,允许离子流通过细胞膜,产生电信号。去除激动剂可使离子通道重新回到关闭状态,信号终止。

配体门控通道的活性可以通过多种方式调节:

·激动剂:模拟天然配体的化合物可以激活通道。例如,尼古丁是nAChR的激动剂,它在该配体门控通道中的活性对烟草制品激活大脑的愉悦感受系统有部分作用。

·部分激动剂:如伐尼克兰(varenicline),与尼古丁相比,其与nAChR结合后降低了离子通道的活性水平。因为伐尼克兰与尼古丁竞争具有相同的nAChR结合位点,因此已被成功地用于降低对尼古丁的依赖。

·拮抗剂:阻断通道的化合物可以分为竞争拮抗剂和变构拮抗剂。竞争拮抗剂结合配体结合位点但不引起与配体结合相关的构象变化,阻止通道开放。变构拮抗剂则结合通道的变构位点,稳定通道的关闭状态或引起构象变化阻止天然配体结合。例如,α-神经毒素是一种肽类蛇毒,它是神经肌接头中突触后膜上nAChR的拮抗剂,导致麻痹症状。

·变构调节:巴比妥类和苯二氮类化合物可增强GABA受体(GABAAR)的活性。当这些化合物与GABAAR上各自的变构位点结合时,它们的结构发生构象变化,产生对GABA具有更高亲和力的构型,增加了氯离子通道开放的频率,增加了跨膜的氯离子转移,使相应神经元超极化。

图1. 配体门控通道在没有配体(红色)的情况下处于关闭状态。配体与通道的结合导致构象变化,从而引起通道打开,允许合适的离子通过通道迁移。配体移除后导致通道关闭,阻止离子流动。配体门控通道可以被合成配体激活或被拮抗剂(与配体结合位点结合的化合物,但不会导致通道开放)阻断。直接阻断通道也是可行的。

3.2 电压门控通道

电压门控通道是另一类主要的离子通道。与配体门控通道不同,电压门控通道没有天然配体,它们随着膜电位的变化而打开和关闭。电压感知域使得这些通道对膜电位的变化非常敏感,非常适合于神经冲动传导、肌肉收缩和心脏功能。

在静息状态下,电压门控通道关闭。当膜电位达到适当的水平时,构象变化导致通道打开,从而允许离子流跨膜。这很快导致超极化状态,诱导一系列构象变化使通道失活。在静息电位恢复并且其构象转变回闭合静息电位状态之前,通道不能重新打开。

电压门控通道的活性可以通过多种方式调节:

·直接阻断:阻断开放通道是最直接的方式。例如,氟卡尼(flecainide)通过阻断电压门控钠通道Nav1.5发挥正常的心脏功能,用于治疗心律失常和预防心动过速。

·稳定闭合构象:用化合物稳定通道的闭合形式,能有效地提高活化阈值,降低通道活性。例如,玛格毒素(margatoxin)可阻断存在于包括神经元细胞在内的多种细胞类型中的电压门控钾通道Kv1.3,改变神经元细胞的膜电位,导致动作电位传导和神经传导所需时间发生变化。

·稳定失活状态:稳定电压门控通道的超极化状态,维持通道失活,减缓达到闭合静止状态所需的构象变化。例如,T淋巴细胞中也存在Kv1.3通道,Kv1.3通道阻滞可通过抑制T细胞增殖诱导免疫抑制。

·稳定开放构象:稳定电压门控通道开放构象的化合物将增加离子通道活性。例如,瑞替加滨(retigabine)是一种用于治疗癫痫和惊厥的药物,能够稳定电压门控钾通道Kv7.2和Kv7.3的开放形式,从而导致钾离子流量增加,抑制癫痫发作。

图2. 在静息状态下,电压门控通道关闭。当膜电位达到适当的水平时,构象变化导致通道打开,从而允许离子流跨膜。这很快导致超极化状态,诱导一系列构象变化使通道失活。在静息电位恢复并且其构象转变回闭合静息电位状态之前,通道不能重新打开。

图3. 电压门控离子通道随时间作用的电位图(也称为动作电位)提供了通道活动的另一视图。刺激必须高于门控阈值才能诱导通道开放。离子流过通道引起的快速去极化导致超极化和通道失活关闭。通过相反作用使膜电位恢复之前,失活通道保持关闭状态。在此“不应期”结束并恢复静息电位之前,通道对刺激不会产生应答反应。

3.3 其他门控机制

除了配体门控和电压门控外,还有其他门控机制在正常和疾病状态中发挥重要作用:

·机械敏感性通道:由膜的机械形变(如增加的张力或曲率变化)激活,在触觉中发挥作用。

·温度门控通道:开启和关闭基于不同的热阈值,构成了冷热感觉的基础。

·pH门控通道:麦金农在1998年发现结晶的变铅青链霉菌的KcsA钾通道就是pH门控的,其活性受pH影响。

04

离子通道与疾病

4.1 离子通道病概述

不正常的离子通道功能与许多重要的疾病(离子通道病)相关,如囊性纤维化、癫痫和QT间期延长综合征等。这些疾病通常是由编码离子通道蛋白的基因突变引起的,导致离子通道功能异常,进而引起各种生理功能障碍。

4.2囊性纤维化

囊性纤维化是一种遗传性外分泌腺疾病,主要影响消化和呼吸系统,导致慢性肺部病变、胰腺外分泌功能不足、肝胆疾病和大量出汗、电解质紊乱。该病是由CFTR基因突变引起的,CFTR是一种跨膜蛋白质,也是一种重要的离子通道。

CFTR蛋白不是典型的离子通道,但它通过与氯离子通道结合,或通过将氯离子通道的调节因子传递到细胞内外,在氯离子通道的调控中发挥作用。CFTR维持上皮和其他膜上盐分和液体的正确平衡,是一种阴离子通道。CFTR内的形态变化与ATP水解有关,这种能量释放反应调节着通道的功能。

正常组织中CFTR被6个高度整齐排列的信号分子调控,了解这些调控机制对治疗囊性纤维化非常重要。近年来,针对CFTR的治疗策略已经取得显著进展,为囊性纤维化患者提供了更有效的治疗选择。

4.3癫痫

癫痫是一种遗传性或获得性的脑部疾病,特征是反复发作的癫痫发作。研究表明,多种离子通道基因与癫痫发生有关,主要包括钠离子通道、钾离子通道和钙离子通道等。编码这些通道的基因突变可影响离子通道的功能,导致神经元兴奋性异常,进而引发癫痫发作。

离子通道突变会导致遗传性癫痫的发生,而遗传性癫痫多为难治性的。研究表明,几种子家族遗传性癫痫患者携带Slo家族钾离子通道突变体。

癫痫也被认为是一种离子通道疾病,可能是由于编码离子通道蛋白基因发生突变,导致离子通道功能发生改变,从而造成神经组织兴奋性或抑制性异常改变,进而导致癫痫的发作。电压门控钠离子通道在动作电位产生和传导中至关重要,近年来研究发现,这些通道与癫痫发病机制有着密切关系,许多癫痫综合征的发生已被证实与钠通道功能异常有关。

4.4 QT间期延长综合征

长QT间期综合征(LQTS)是由任何先天性或后天性心脏离子通道功能或调节障碍(通道病)引起的,该障碍延长了心室肌细胞动作电位的持续时间,如心电图上速率校正QT间期的延长所示。

先天性LQTS是编码心肌离子通道蛋白的基因突变导致心肌细胞复极异常而引起的一组临床综合征,是一种具有潜在致死性的心电生理紊乱的离子通道病。心电图表现为QT间期延长,当QT间期延长时,尖端扭转型室性心动过速的风险增加。

QT间期延长是由心脏离子通道功能障碍损害心室复极化引起的,导致多形性室性心动过速。长QT间期综合征是一种以心电图上QT间期延长、T波异常和易发尖端扭转型室性心动过速(TdP)为特征的心脏电生理疾病。TdP通常可自行终止,导致晕厥。

05

离子通道研究技术的发展

5.1 膜片钳技术

20世纪最后30年出现的生物技术和计算机技术为最终解开离子通道之谜提供了必要的工具。由于重组技术、转染方法和电子技术的进步,厄温·内尔(Erwin Neher)和伯特·萨克曼(Bert Sakmann)开发出了能直接研究离子通道的"膜片钳"方法。在这些技术出现之前,离子通道实验仅限于分析天然存在细胞的电流,生物技术为创造表达单一离子通道的细胞系提供了必要的工具。

内尔和萨克曼的"膜片钳"技术是将含有微量吸管的盐溶液置于单个细胞表面,并测量离子流通过离子通道所产生的电流,其方式与测量导线中电流的方式大致相同。这一技术允许研究人员记录单个离子通道的电流,从而研究它们的门控特性、配体结合和电压敏感性。

电信号放大技术使得研究单个离子通道在细胞中的作用成为可能,并可以直接测量离子通道活性。内尔和萨克曼于1991年获得诺贝尔生理学或医学奖,彰显他们工作的重要意义。该方法的现代版本仍然是研究离子通道的黄金标准,是目前直接研究单个蛋白为数不多的几种技术之一。

图4. 基本的膜片钳系统由一个微量吸管组成,其开口的大小为1μm,压在细胞表面上。微吸管的内部覆盖了有限数量的离子通道,并通过在细胞表面抽吸而产生高电阻的密封(“gigaohm 密封”)。然后可以使用电极、微量吸管的盐溶液,以及适当的电流放大和监测系统,在恒定电压下测定电流,或者在恒定电流下检测化合物的存在对膜电位的改变。

5.2 离子通道的结构解析

20世纪末由于技术进步,离子通道的结构细节也逐渐被揭示。科学家测定了编码多种离子通道的蛋白序列,并利用分子模建的方法预测了跨膜蛋白通过亲脂屏障运输荷电物质的结构特征。虽然各种结构模型很早就被提出,但直到1998年才获得关于离子通道结构细节的直接晶体学证据。

罗德里克·麦金农(Roderick Mackinnon)从土壤细菌变铅青链霉菌(Streptomyces Lividans)中得到的KcsA钾通道X射线晶体结构提供了第一个完整的离子通道视图,这一成果使他获得了2003年的诺贝尔化学奖。截至2013年,RCSB蛋白质数据库中已包含3100多个离子通道的晶体结构。

KcsA钾通道的结构揭示了选择性过滤器和门控机制,为理解所有离子通道的结构和功能奠定了基础。这一突破性发现不仅增进了对离子通道基本功能的理解,也为基于结构的药物设计提供了重要基础。

5.3现代分析技术

现代技术如FLIPR®钾检测试剂盒等为离子通道研究提供了新的工具,细胞中钾离子通道的功能性评估在药物发现过程中至关重要,尤其是在涉及到心脏安全性时。FLIPR®钾检测试剂盒利用电压和配体门控钾(K+)通道对铊离子(Tl+)的通透性,提供快速、高通量的离子通道活性检测方法。此外,先进的计算方法和分子模拟技术也极大地促进了对离子通道功能和药物相互作用的理解。这些技术结合实验数据,可以预测新的药物靶点和设计具有更高特异性和疗效的化合物。

06

离子通道药物发现

6.1 离子通道作为药物靶点

离子通道药物一直是全球药物研发的热点,离子通道也是继药物受体后的第二大类药物靶点。目前已发现许多调节离子通道活性的重要药物和致命的毒素,这些药物通过作用于不同的离子通道靶点,用于治疗各种疾病。

6.2 代表性离子通道药物

(1) 钾通道阻滞剂

胺碘酮(amiodarone)是一种阻断钾通道的抗心律失常药,用于治疗心律失常和预防心动过速。玛格毒素(margatoxin)是一种从Centruroides margaritatus(中美洲树皮蝎子)的毒液中发现的39个氨基酸组成的肽,Kv1.3通道阻滞剂,可阻断存在于包括神经元细胞在内的多种细胞类型中的电压门控钾通道Kv1.3,改变神经元细胞的膜电位,导致动作电位传导和神经传导所需时间发生变化。T淋巴细胞中也存在这种通道,Kv1.3通道阻滞可通过抑制T细胞增殖诱导免疫抑制。

(2) 钙通道阻滞剂

氨氯地平(amlodipine,Norvasc®)是一种阻断钙通道的抗高血压药,用于治疗高血压和心绞痛。它通过抑制钙离子进入血管平滑肌细胞,松弛血管,降低血压。

(3) 钠通道阻滞剂

普鲁卡因(procaine)是一种阻断钠通道的局部麻醉剂,用于局部麻醉。苯妥英(phenytoin)是一种阻断钠通道的抗癫痫药,用于治疗癫痫。氟卡尼(flecainide)是一种Nav1.5通道阻滞剂和抗心律失常药,用于治疗心律失常和预防心动过速。

(4) 钾通道开放剂

瑞替加滨(retigabine,Toviaz®)是一种用于治疗癫痫和膀胱过度活动症的药物,能够稳定电压门控钾通道Kv7.2和Kv7.3的开放形式,从而导致钾离子流量增加,抑制癫痫发作和膀胱痉挛。

(5) 其他离子通道药物

格列吡嗪(glipizide)是一种阻断胰岛B细胞中钾通道的抗糖尿病药,通过影响胰岛素分泌发挥作用。伐尼克兰(varenicline,Chantix®)是一种烟碱型乙酰胆碱受体(nAChR)的部分激动剂,用于戒烟治疗。

图5.(a) 氨氯地平(amlodipine,Norvasc®),一种阻断钙通道的抗高血压药;(b)胺碘酮(amiodarone),一种阻断钾通道的抗心律失常药;(c)普鲁卡因(procaine),一种阻断钠通道的局部麻醉剂;(d)格列吡嗪(glipizide),一种阻断胰岛B细胞中钾通道的抗糖尿病药;(e)苯妥英(phenytoin),一种阻断钠通道的抗癫痫药;(f)河鲀毒素(tetrodotoxin),一种发现于河鲀中的阻断钠通道的毒素,致死性比氰化物强100 倍。

6.3 离子通道药物的机制

离子通道药物的作用机制多种多样,主要通过以下几种方式调节离子通道的活性:

·直接阻断:如胺碘酮阻断钾通道,普鲁卡因阻断钠通道等。

·调节门控状态:如稳定通道的闭合状态或开放状态,改变通道的活性。

·影响通道的表达或稳定性:如调节离子通道蛋白的合成、转运或降解。

·改变细胞内信号通路:如影响与离子通道相互作用的信号分子,间接调节通道活性。

6.4 离子通道药物的挑战

尽管离子通道是重要的药物靶点,但开发选择性、高效和安全的离子通道药物仍然面临许多挑战:

·缺乏选择性:许多离子通道在结构和功能上相似,开发高选择性药物困难。

·脱靶效应:离子通道药物可能影响非靶向通道,导致不良反应。

·安全性问题:特别是对于心脏离子通道,药物可能引起严重的心律失常。

·耐药性:长期使用某些离子通道药物可能导致耐药性。

·个体差异:不同患者对离子通道药物的反应可能存在显著差异。

6.5 基于结构的药物设计

随着离子通道晶体结构的解析,基于结构的药物设计已成为开发新型离子通道药物的重要策略。通过解析KcsA钾通道等离子通道的三维结构,研究人员可以了解离子选择性过滤器和门控机制的分子基础,为设计具有更高选择性和疗效的化合物提供依据。例如,麦金农团队解析的KcsA钾通道结构揭示了钾离子通道的特异性选择机制,为设计新型钾通道阻滞剂提供了结构基础。类似地,电压门控钠通道的结构解析也为开发新型抗癫痫和镇痛药物提供了重要信息。

07

离子通道药物的临床应用

7.1 心血管疾病

离子通道在心血管系统中发挥关键作用,特别是钾通道和钙通道。异常的离子通道功能与多种心血管疾病相关,如心律失常、高血压和心力衰竭。

抗心律失常药:如胺碘酮、氟卡尼等通过阻断钾通道或钠通道,延长或缩短动作电位,恢复心脏节律。这些药物在治疗室性心动过速、房颤和房扑等心律失常中发挥重要作用。

抗高血压药:如氨氯地平通过阻断钙通道,松弛血管平滑肌,降低血压。这类药物是目前最常用的抗高血压药物之一,具有良好的疗效和安全性。

7.2 神经系统疾病

离子通道在神经系统中至关重要,参与神经元的兴奋性和信号传递。异常的离子通道功能与多种神经系统疾病相关,如癫痫、疼痛和神经退行性疾病。

抗癫痫药:如苯妥英、卡马西平和拉莫三嗪等通过阻断钠通道或增强抑制性神经传递,减少神经元的过度兴奋。这些药物在控制癫痫发作中发挥重要作用,但可能伴随多种副作用。

镇痛药:如普鲁卡因等局部麻醉剂通过阻断钠通道,抑制神经冲动的产生和传导,产生局部麻醉效果。此外,一些电压门控钙通道阻滞剂也具有镇痛作用。

治疗神经退行性疾病:研究表明,某些离子通道可能参与阿尔茨海默病、帕金森病等神经退行性疾病的病理过程,针对这些离子通道的药物可能为这些疾病的治疗提供新策略。

7.3免疫系统疾病

离子通道在免疫系统中也发挥重要作用,参与T细胞活化和免疫应答的调节。异常的离子通道功能与多种免疫系统疾病相关,如自身免疫病和炎症。

免疫抑制剂:如环孢素和他克莫司通过抑制钙调磷酸酶活性,影响T细胞活化。这些药物在器官移植和自身免疫病的治疗中发挥重要作用。

抗炎药:一些离子通道如P2X7嘌呤受体参与炎症反应的调节,针对这些通道的药物可能为炎症性疾病的治疗提供新选择。

7.4呼吸系统疾病

囊性纤维化是一种与CFTR离子通道相关的遗传性疾病,影响呼吸系统和消化系统。针对CFTR的治疗策略已经取得显著进展,为囊性纤维化患者提供了更有效的治疗选择。

CFTR调节剂:如Ivacaftor、Tezacaftor和Lumacaftor等通过增强CFTR的蛋白表达、转运或门控功能,改善氯离子的转运,减轻囊性纤维化的症状,这些药物的开发代表了离子通道药物研发的重要里程碑。

08

未来展望

8.1 新型离子通道靶点的发现

随着基因组学、转录组学和蛋白质组学技术的发展,越来越多的离子通道被发现和表征。这些新型离子通道可能成为治疗多种疾病的新靶点,例如,机械敏感性离子通道在疼痛、触觉和血压调节中发挥作用,针对这些通道的药物可能为疼痛管理和高血压治疗提供新策略。此外,温度门控通道如TRP通道在疼痛和炎症中也扮演重要角色,针对这些通道的药物可能为慢性疼痛和炎症性疾病的治疗提供新选择。

8.2 基于结构的药物设计

随着更多离子通道晶体结构的解析,基于结构的药物设计将为开发新型离子通道药物提供更精确的工具。通过了解离子通道的三维结构和功能机制,研究人员可以设计具有更高选择性和疗效的化合物,减少脱靶效应和不良反应。

8.3 个性化医疗

不同患者对离子通道药物的反应可能存在显著差异,这与遗传背景、代谢状态和疾病特征等多种因素有关。通过基因检测和生物标志物分析,可以实现离子通道药物的个体化治疗,提高治疗效果并减少不良反应。

8.4联合治疗策略

单一靶点的离子通道药物可能面临疗效有限和耐药性等问题,通过联合针对不同离子通道或信号通路的药物,可以增强治疗效果并减少副作用。例如,联合使用不同作用机制的抗癫痫药可以提高癫痫的控制率,减少单一药物的剂量和副作用。

09

结论

离子通道作为药物发现中的经典靶点,具有重要的治疗价值和广阔的发展前景。从历史发展来看,对离子通道的研究经历了从宏观到微观、从整体到分子水平的转变,这一过程促进了我们对离子通道结构和功能的理解,为开发新型离子通道药物提供了科学基础。

目前,已有多种离子通道药物用于治疗心血管疾病、神经系统疾病、免疫系统疾病和呼吸系统疾病等,这些药物通过不同的机制调节离子通道的活性,如直接阻断、调节门控状态、影响表达或稳定性等。然而,离子通道药物的开发仍面临选择性、安全性和耐药性等挑战,需要进一步的研究和创新。

未来,随着新型离子通道靶点的发现、基于结构的药物设计、个性化医疗和联合治疗策略的发展,离子通道药物将为更多疾病的治疗提供新的选择和希望。特别是针对囊性纤维化的CFTR调节剂的成功开发,为基于结构和机制的药物设计提供了成功案例,为其他离子通道相关疾病的治疗提供了重要启示。总之,离子通道作为药物发现中的经典靶点,将继续在医药研发中发挥重要作用,为患者提供更有效、更安全的治疗选择。

参考文献

1. 《药物研发基本原理》第2版,〔美〕本杰明·E. 布拉斯,科学出版社

2. 囊性纤维化跨膜调节因子与非囊性纤维化肺部疾病关系的研究进展. https://m-lcbl.amegroups.com/article/view/12096.

3. 癫痫的治疗和药物发现现状. https://html.rhhz.net/YXXB/html/20210404.htm.

4. 电压门控钠离子通道与癫痫 - 临床与病理杂志. https://m-lcbl.amegroups.com/article/view/5339.

5. 电压门控钠离子通道在难治性癫痫中的作用机制研究进展 - 自然科学版. http://jnmu.njmu.edu.cn/zr/aumn/article/html/20230617?st=article_issue.

6. 离子通道,FLIPR膜电位检测 - 酶标仪. https://www.moleculardevices.com.cn/applications/ion-channels.

7. 其它信息源于网络搜索

(生物屋)

end

本公众号声明:

1、如您转载本公众号原创内容必须注明出处。

2、本公众号转载的内容是出于传递更多信息之目的,若有来源标注错误或侵犯了您的合法权益,请作者或发布单位与我们联系,我们将及时进行修改或删除处理。

3、本公众号文中部分图片来源于网络,版权归原作者所有,如果侵犯到您的权益,请联系我们删除。

4、本公众号发布的所有内容,并不意味着本公众号赞同其观点或证实其描述。其原创性以及文中陈述文字和内容未经本公众号证实,对本文全部或者部分内容的真实性、完整性、及时性我们不作任何保证或承诺,请浏览者仅作参考,并请自行核实。

临床研究微生物疗法

2026-01-24

编者按:2025年以来,siRNA疗法在治疗凝血相关疾病的研究方面取得了重要里程碑进展。RNA干扰(RNAi)机制通过在肝脏细胞中沉默编码凝血因子或天然抗凝物的mRNA,为治疗凝血相关疾病提供了一种全新的治疗模式。基于GalNAc偶联的递送技术,是将治疗性siRNA分子高效递送到肝脏的关键之一。药明康德旗下WuXi TIDES团队围绕siRNA等分子,打造了涵盖定制合成、共价连接、以及一体化CMC的一站式服务平台,更好地满足全球合作伙伴的研发需求,加速创新转化,惠及全球病患。本文将探讨siRNA在治疗凝血相关疾病方面的应用,并展示WuXi TIDES如何助力合作伙伴加速GalNAc偶联siRNA疗法的开发。

siRNA疗法与凝血相关疾病

由于大多数调控凝血过程的蛋白都在肝细胞中合成,这使肝脏成为高度适配的治疗靶点。GalNAc通过与肝细胞表面高度表达的去唾液酸糖蛋白受体(ASGPR)结合,促进siRNA药物被肝细胞选择性摄取,使其在调控凝血系统时展现出高度精准性。

进入肝脏后,这些siRNA可长期沉默目标基因,使蛋白水平在一次皮下注射后显著下降并维持数月之久。对于治疗负担高、用药依从性弱的疾病而言,这一特点尤其重要。siRNA药物不但可以提供高度可预测的疗效,给药间隔可延长至8周甚至更久。对患者而言,这意味着治疗负担更轻,用药更容易融入日常生活。

此外,siRNA药物的药效动力学平稳,能够对凝血平衡进行持续而温和的调节。2025年获得FDA批准的Qfitlia(fitusiran)就是范例之一。

在血友病中重新平衡止血

血友病长期以来被视为一种缺失性疾病:因缺乏凝血因子VIII(FVIII)或IX(FIX),患者易发生自发出血、关节损伤和长期并发症。过去几十年,治疗焦点始终围绕如何替代缺失因子展开。siRNA疗法为解决这个问题提供了新思路。它不试图恢复FVIII或FIX,而是从凝血信号通路的另一方面入手,通过降低内源性抗凝物来提升凝血酶生成,从而调控凝血通路的整体平衡。

这一理念在2025年3月得到监管验证。美国FDA批准了Qfitlia(fitusiran)用于血友病A或B青少年及成人的常规预防。作为一种靶向抗凝血酶的皮下注射siRNA,fitusiran在3期临床试验中使年化出血率下降约90%。患者只需每两个月接受一次治疗。

对于许多患者而言,这种改变意义深远。长期频繁的静脉输注一直是治疗依从性的巨大障碍,尤其对静脉条件差或存在治疗疲劳的患者而言。Fitusiran每年仅需注射约6次,且为皮下给药,为患者和家庭带来了截然不同的治疗节奏。

Fitusiran的获批不仅具有里程碑意义,也揭示了RNAi作为技术平台的可拓展性。研究人员正在探索更多抗凝靶点,如肝素辅因子II和蛋白S,前期研究显示,沉默这些蛋白同样能够在FVIII或FIX缺失背景下恢复凝血酶生成。

siRNA为血栓疾病开辟新方向

正如siRNA可用于增强出血性疾病患者的凝血功能,它同样能够在血栓风险高的患者中抑制凝血活动。在这一领域中,备受关注的靶点之一是凝血因子XI(FXI)。先天性FXI缺乏患者的血栓风险显著降低,却通常仅出现轻度出血。这些人类遗传学研究结果为新一代抗凝策略提供了启示:抑制FXI活性可能比传统抗凝药具有更宽广的安全窗口。

目前,多款靶向FXI的药物已经进入临床开发并获得显著进展。例如,拜耳(Bayer)公司的FXIa抑制剂asundexian在全球性3期临床试验中获得积极顶线结果,在显著降低患者缺血性卒中风险的同时,并未增加主要出血风险。

多家生物技术公司也在探索通过siRNA药物降低FXI蛋白表达的治疗策略。例如,瑞博生物开发的在研GalNAc偶联siRNA药物RBD4059已经完成在健康志愿者中进行的1期临床试验,试验结果显示RBD4059呈现出剂量依赖性、可预测的药代动力学特性、以及显著(>90%)和持久的FXI活性和蛋白水平降低效果;同时,在安全性和耐受性方面达到主要终点,在研究的剂量范围内未发现不良安全信号,显示出良好的安全性。目前这款药物已经进入2期临床试验。

此外,靖因药业与CRISPR Therapeutics共同开发的FXI靶向siRNA药物SRSD107也已完成2期临床试验首例患者给药。Sirnaomics公司的在研siRNA药物STP122G目前正在1期临床试验中接受检验。City Therapeutics公司的新一代siRNA疗法CITY-FXI已经递交临床试验申请,预计在2026年初启动1期临床试验。

这些在研疗法的未来愿景明确:通过每年数次皮下注射实现对静脉血栓或卒中的长期防护,同时不显著增加重大出血风险。如果成功,将代表着抗凝策略的重大革新。

一体化平台助力GalNAc偶联siRNA药物开发

尽管GalNAc偶联siRNA药物在治疗凝血相关疾病方面表现出显著潜力,但药物实现规模化应用,还需要强有力的全方位技术整合能力作为支撑。一家生物技术公司在研发治疗心血管疾病的GalNAc偶联siRNA候选药物时,就曾遇到难题:由于缺乏成熟的GalNAc分子来源,加之产率和粗纯度低下,项目推进受阻。他们找到了WuXi TIDES,寻求解决方案。

首先要解决的便是GalNAc的供应问题。针对合作伙伴提出的特殊需求,团队迅速建立合成路线,采用先进的流动化学及重结晶技术,获得了纯度超过98%的高质量产品,在短短4个月内,成功制备出4.5公斤高纯度定制GalNAc分子,保障了稳定的供应链,大幅缩短了项目周期。

在关键的偶联环节,凭借在多种偶联类型、偶联化学和修饰策略上的经验积累,WuXi TIDES团队选择了具有高度选择性的“点击化学”策略,显著降低副产物产生,简化合成和纯化过程,使最终收率从13%提升至62%,粗品纯度从18%提高到75%,确保了适合临床试验的高纯度和稳定性。

同时,基于一体化CMC服务能力,WuXi TIDES团队平行开展了分析方法、制剂开发等多项工作,同时利用无菌灌装生产线和生产流程设计,在GMP批次生产中达到99%产率,显著降低API损失。全方位协作使两款siRNA候选药物在14个月内顺利完成了IND申报准备,加速推进至临床阶段。

展望未来,随着更多siRNA药物正进入临床开发,类似的产业协同将持续发挥重要作用,进一步推动药物研发的快速前进。

结语

siRNA正在重塑凝血障碍的治疗模式。通过沉默肝脏中关键蛋白的生成,siRNA提供了持久且可精确调控的治疗方式。FDA批准首款siRNA血友病疗法,标志着凝血系统调控可从体内实现这一概念已被验证。目前,全球已有8款siRNA疗法获批上市,治疗的疾病类型也从罕见病扩展到患者人数众多的常见病,有望变革高血压、高血脂和肥胖症等慢性病的治疗模式。据统计,截至2025年7月,全球在研RNAi疗法接近400款,其中约三分之一已进入临床开发阶段。RNAi领域的“明星公司”Alnylam表示,预计在2030年前解决主要组织的递送挑战,最大限度地释放RNAi技术的潜力。面向未来,WuXi TIDES将持续基于其一体化CRDMO平台,赋能包括RNAi在内的寡核苷酸药物开发,助力合作伙伴加快将科学创新转化为新药、好药,造福全球病患。

A Milestone Breakthrough: siRNA Therapy Brings New Hope to Coagulation Disorders

In March 2025, the U.S. FDA approved Qfitlia (fitusiran), an siRNA therapy, as a prophylactic treatment to reduce bleeding risk in patients with hemophilia. The approval represents an important milestone for the use of siRNA in coagulation-related diseases. RNA interference (RNAi) enables targeted silencing of mRNA encoding coagulation factors or natural anticoagulants within hepatocytes, opening a new therapeutic paradigm for disorders of hemostasis and thrombosis.

A key enabler of this modality is GalNAc-conjugated delivery, which facilitates efficient and selective transport of therapeutic siRNA molecules to the liver. To meet rising demand in this field, WuXi TIDES, a unique CRDMO platform that is part of WuXi AppTec, has established an integrated service platform around siRNA molecules and conjugates, covering from drug discovery and CMC development to commercial-scale manufacturing. This article reviews the emerging role of siRNA in coagulation disorders, and highlights how the WuXi TIDES team supports partners in overcoming the challenges of GalNAc-conjugated drug development.

siRNA Therapies: A Precise Modality for Coagulation Disorders

Because most proteins that regulate coagulation are synthesized in hepatocytes, the liver presents an important site for therapeutic intervention. GalNAc ligands bind to the asialoglycoprotein receptor (ASGPR), which is abundantly expressed on hepatocytes, enabling highly selective uptake of siRNA and precise modulation of the coagulation cascade.

Once internalized, siRNA can silence target genes for extended periods, producing sustained reductions in protein levels for months after a single subcutaneous dose. This long-acting profile is particularly meaningful for diseases associated with high treatment burden and poor adherence. siRNA therapies deliver predictable efficacy while extending dosing intervals to eight weeks or more, allowing treatment to integrate more easily into patients’ daily lives.

Their smooth pharmacodynamic profile also supports gradual, durable adjustments to the coagulation balance. Qfitlia (fitusiran), approved by the FDA this year, demonstrates these advantages in clinical practice.

Restoring Hemostatic Balance in Hemophilia

Hemophilia has long been defined by the absence of a single component, coagulation factor VIII (FVIII) or IX (FIX), leading to spontaneous bleeding, joint damage, and long-term complications. For decades, therapy focused on replacing the missing factor.

siRNA therapy introduces a fundamentally different concept. Instead of restoring FVIII or FIX, it targets the pathway from the opposite direction by reducing endogenous anticoagulants to enhance thrombin generation and rebalance coagulation globally.

This approach received regulatory validation in March 2025, when the FDA approved Qfitlia (fitusiran) for routine prophylaxis in adolescents and adults with hemophilia A or B. As an siRNA that silences antithrombin mRNA, fitusiran reduced annualized bleeding rates by approximately 90% in Phase 3 trials, with dosing required only once every two months.

For many patients, this represents a transformative shift. Frequent intravenous infusions, long a major barrier to adherence, can now be replaced by around six subcutaneous injections per year. For individuals with poor venous access or treatment fatigue, this new rhythm of care offers tremendous relief.

Beyond its clinical benefits, fitusiran underscores the scalability of RNAi as a platform. Researchers are actively investigating additional anticoagulant targets, including heparin cofactor II and protein S, and early findings suggest that silencing these proteins may similarly restore thrombin generation in the absence of FVIII or FIX.

siRNA Opens New Frontiers in Thrombotic Disease

Just as siRNA can augment coagulation in bleeding disorders, it can also attenuate coagulation for patients at high risk of thrombosis. Factor XI (FXI) has emerged as a particularly compelling target.

Individuals with congenital FXI deficiency experience substantially lower thrombosis risk yet typically only mild bleeding, an insight from human genetics that suggests FXI inhibition may offer a wider safety margin than conventional anticoagulants.

Several FXI-targeted therapies have already achieved meaningful clinical milestones. Bayer’s FXIa inhibitor asundexian demonstrated positive topline Phase 3 results recently, reducing ischemic stroke risk without elevating major bleeding events.

At the same time, multiple biotech companies are advancing siRNA strategies to lower FXI expression. Several GalNAc-siRNA candidates have demonstrated sustained reductions in FXI activity and protein levels in Phase 1 clinical trials and are in Phase 2 development. Collectively, these programs aim to deliver long-term protection against venous thrombosis or stroke through only a handful of subcutaneous injections per year—potentially redefining the future of anticoagulation.

Fast-Track to Phase 1: Two siRNA IND CMC Packages Completed in 14 Months

Despite their promise, GalNAc-siRNA therapies require robust technical infrastructure to achieve scalable, reliable development. One biotech company developing a GalNAc-siRNA therapy for cardiovascular disease faced multiple challenges—including limited supply of a unique GalNAc molecule, low yield, and poor purity—which stalled their program. To overcome these hurdles, they partnered with WuXi TIDES.

The priority was to ensure a stable supply of the GalNAc molecule. WuXi TIDES quickly designed a customized synthetic route tailored to the client’s specifications. By utilizing flow chemistry and recrystallization techniques, the team achieved a high-quality product with over 98% purity. Within just four months, they successfully delivered 4.5 kilograms of high-purity, custom GalNAc—effectively securing the supply of the starting material for the GalNAc-siRNA conjugate and shortening development timelines.

Next came the critical conjugation step. Drawing on extensive expertise in conjugation chemistries and modification strategies, the WuXi TIDES team adopted a highly selective “Click Chemistry” approach, minimizing byproduct formation and simplifying synthesis and purification. As a result, the overall yield increased from 13% to 62%, and crude purity improved from 18% to 75%, ensuring the production of clinical-grade material with superior purity and stability.

In parallel, WuXi TIDES leveraged its integrated CMC capabilities to advance analytical method development and formulation optimization. Together with an advanced sterile fill-finish line and optimal process design, the team achieved a batch yield of >99% in GMP production, significantly minimizing the overall loss of costly API. These coordinated efforts enabled two siRNA candidates to complete IND-enabling activities within just 14 months, accelerating progress toward the clinic.

The above case is just one example of the capabilities of WuXi TIDES’ integrated CRDMO platform. In addition to oligonucleotides, WuXi TIDES also provides comprehensive development solutions for GalNAc-conjugated peptide therapeutics. As more GalNAc-conjugated drugs advance into clinical development, integrated collaboration will be essential to accelerating innovation and bringing therapies to patients faster.

Looking Ahead

siRNA is redefining how coagulation disorders are treated. By silencing key liver-derived proteins, siRNA delivers precise, durable, and programmable modulation of hemostasis. The approval of the first siRNA therapy for hemophilia confirms that rebalancing the coagulation system from within the body is now a clinically validated strategy.

To date, eight siRNA therapies have been approved globally, with indications expanding beyond rare diseases to highly prevalent chronic conditions. They offer the potential to transform treatment strategies for hypertension, hyperlipidemia, and obesity. As of July this year, nearly 400 siRNA therapies are in development worldwide, with one-third already in clinical stages.

Looking ahead, WuXi TIDES will continue to leverage its integrated CRDMO platform to support the development of oligonucleotide therapeutics, including siRNA, and help partners transform scientific breakthroughs into new medicines that benefit patients across the globe.

参考资料:

[1] Ragni and Chan, (2023). Innovations in RNA therapy for hemophilia. Blood, https://doi.org/10.1182/blood.2022018661

[2] Presume et al., (2024). Factor XI Inhibitors: A New Horizon in Anticoagulation Therapy. Cardiol Ther., doi: 10.1007/s40119-024-00352-x

[3] Capodanno et al., (2025). Factor XI inhibitors for the prevention and treatment of venous and arterial thromboembolism. Nat Rev Cardiol, https://doi.org/10.1038/s41569-025-01144-z

免责声明:本文仅作信息交流之目的,文中观点不代表药明康德立场,亦不代表药明康德支持或反对文中观点。本文也不是治疗方案推荐。如需获得治疗方案指导,请前往正规医院就诊。

版权说明:欢迎个人转发至朋友圈,谢绝媒体或机构未经授权以任何形式转载至其他平台。转载授权请在「药明康德」微信公众号回复“转载”,获取转载须知。

分享,点赞,在看,传递医学新知

2026-01-23

·腾讯网

编者按:2025年以来,siRNA疗法在治疗凝血相关疾病的研究方面取得了重要里程碑进展。RNA干扰(RNAi)机制通过在肝脏细胞中沉默编码凝血因子或天然抗凝物的mRNA,为治疗凝血相关疾病提供了一种全新的治疗模式。基于GalNAc偶联的递送技术,是将治疗性siRNA分子高效递送到肝脏的关键之一。药明康德旗下WuXi TIDES团队围绕siRNA等分子,打造了涵盖定制合成、共价连接、以及一体化CMC的一站式服务平台,更好地满足全球合作伙伴的研发需求,加速创新转化,惠及全球病患。本文将探讨siRNA在治疗凝血相关疾病方面的应用,并展示WuXi TIDES如何助力合作伙伴加速GalNAc偶联siRNA疗法的开发。siRNA疗法与凝血相关疾病由于大多数调控凝血过程的蛋白都在肝细胞中合成,这使肝脏成为高度适配的治疗靶点。GalNAc通过与肝细胞表面高度表达的去唾液酸糖蛋白受体(ASGPR)结合,促进siRNA药物被肝细胞选择性摄取,使其在调控凝血系统时展现出高度精准性。进入肝脏后,这些siRNA可长期沉默目标基因,使蛋白水平在一次皮下注射后显著下降并维持数月之久。对于治疗负担高、用药依从性弱的疾病而言,这一特点尤其重要。siRNA药物不但可以提供高度可预测的疗效,给药间隔可延长至8周甚至更久。对患者而言,这意味着治疗负担更轻,用药更容易融入日常生活。此外,siRNA药物的药效动力学平稳,能够对凝血平衡进行持续而温和的调节。2025年获得FDA批准的Qfitlia(fitusiran)就是范例之一。图片来源:123RF在血友病中重新平衡止血血友病长期以来被视为一种缺失性疾病:因缺乏凝血因子VIII(FVIII)或IX(FIX),患者易发生自发出血、关节损伤和长期并发症。过去几十年,治疗焦点始终围绕如何替代缺失因子展开。siRNA疗法为解决这个问题提供了新思路。它不试图恢复FVIII或FIX,而是从凝血信号通路的另一方面入手,通过降低内源性抗凝物来提升凝血酶生成,从而调控凝血通路的整体平衡。这一理念在2025年3月得到监管验证。美国FDA批准了Qfitlia(fitusiran)用于血友病A或B青少年及成人的常规预防。作为一种靶向抗凝血酶的皮下注射siRNA,fitusiran在3期临床试验中使年化出血率下降约90%。患者只需每两个月接受一次治疗。对于许多患者而言,这种改变意义深远。长期频繁的静脉输注一直是治疗依从性的巨大障碍,尤其对静脉条件差或存在治疗疲劳的患者而言。Fitusiran每年仅需注射约6次,且为皮下给药,为患者和家庭带来了截然不同的治疗节奏。Fitusiran的获批不仅具有里程碑意义,也揭示了RNAi作为技术平台的可拓展性。研究人员正在探索更多抗凝靶点,如肝素辅因子II和蛋白S,前期研究显示,沉默这些蛋白同样能够在FVIII或FIX缺失背景下恢复凝血酶生成。siRNA为血栓疾病开辟新方向正如siRNA可用于增强出血性疾病患者的凝血功能,它同样能够在血栓风险高的患者中抑制凝血活动。在这一领域中,备受关注的靶点之一是凝血因子XI(FXI)。先天性FXI缺乏患者的血栓风险显著降低,却通常仅出现轻度出血。这些人类遗传学研究结果为新一代抗凝策略提供了启示:抑制FXI活性可能比传统抗凝药具有更宽广的安全窗口。目前,多款靶向FXI的药物已经进入临床开发并获得显著进展。例如,拜耳(Bayer)公司的FXIa抑制剂asundexian在全球性3期临床试验中获得积极顶线结果,在显著降低患者缺血性卒中风险的同时,并未增加主要出血风险。多家生物技术公司也在探索通过siRNA药物降低FXI蛋白表达的治疗策略。例如,瑞博生物开发的在研GalNAc偶联siRNA药物RBD4059已经完成在健康志愿者中进行的1期临床试验,试验结果显示RBD4059呈现出剂量依赖性、可预测的药代动力学特性、以及显著(>90%)和持久的FXI活性和蛋白水平降低效果;同时,在安全性和耐受性方面达到主要终点,在研究的剂量范围内未发现不良安全信号,显示出良好的安全性。目前这款药物已经进入2期临床试验。此外,靖因药业与CRISPR Therapeutics共同开发的FXI靶向siRNA药物SRSD107也已完成2期临床试验首例患者给药。Sirnaomics公司的在研siRNA药物STP122G目前正在1期临床试验中接受检验。City Therapeutics公司的新一代siRNA疗法CITY-FXI已经递交临床试验申请,预计在2026年初启动1期临床试验。这些在研疗法的未来愿景明确:通过每年数次皮下注射实现对静脉血栓或卒中的长期防护,同时不显著增加重大出血风险。如果成功,将代表着抗凝策略的重大革新。一体化平台助力GalNAc偶联siRNA药物开发尽管GalNAc偶联siRNA药物在治疗凝血相关疾病方面表现出显著潜力,但药物实现规模化应用,还需要强有力的全方位技术整合能力作为支撑。一家生物技术公司在研发治疗心血管疾病的GalNAc偶联siRNA候选药物时,就曾遇到难题:由于缺乏成熟的GalNAc分子来源,加之产率和粗纯度低下,项目推进受阻。他们找到了WuXi TIDES,寻求解决方案。首先要解决的便是GalNAc的供应问题。针对合作伙伴提出的特殊需求,团队迅速建立合成路线,采用先进的流动化学及重结晶技术,获得了纯度超过98%的高质量产品,在短短4个月内,成功制备出4.5公斤高纯度定制GalNAc分子,保障了稳定的供应链,大幅缩短了项目周期。在关键的偶联环节,凭借在多种偶联类型、偶联化学和修饰策略上的经验积累,WuXi TIDES团队选择了具有高度选择性的“点击化学”策略,显著降低副产物产生,简化合成和纯化过程,使最终收率从13%提升至62%,粗品纯度从18%提高到75%,确保了适合临床试验的高纯度和稳定性。同时,基于一体化CMC服务能力,WuXi TIDES团队平行开展了分析方法、制剂开发等多项工作,同时利用无菌灌装生产线和生产流程设计,在GMP批次生产中达到99%产率,显著降低API损失。全方位协作使两款siRNA候选药物在14个月内顺利完成了IND申报准备,加速推进至临床阶段。展望未来,随着更多siRNA药物正进入临床开发,类似的产业协同将持续发挥重要作用,进一步推动药物研发的快速前进。结语siRNA正在重塑凝血障碍的治疗模式。通过沉默肝脏中关键蛋白的生成,siRNA提供了持久且可精确调控的治疗方式。FDA批准首款siRNA血友病疗法,标志着凝血系统调控可从体内实现这一概念已被验证。截至2025年底,全球已有8款siRNA疗法获批上市,治疗的疾病类型也从罕见病扩展到患者人数众多的常见病,有望变革高血压、高血脂和肥胖症等慢性病的治疗模式。据统计,截至2025年7月,全球在研RNAi疗法接近400款,其中约三分之一已进入临床开发阶段。RNAi领域的“明星公司”Alnylam表示,预计在2030年前解决主要组织的递送挑战,最大限度地释放RNAi技术的潜力。面向未来,WuXi TIDES将持续基于其一体化CRDMO平台,赋能包括RNAi在内的寡核苷酸药物开发,助力合作伙伴加快将科学创新转化为新药、好药,造福全球病患。A Milestone Breakthrough: siRNA Therapy Brings New Hope to Coagulation DisordersIn March 2025, the U.S. FDA approved Qfitlia (fitusiran), an siRNA therapy, as a prophylactic treatment to reduce bleeding risk in patients with hemophilia. The approval represents an important milestone for the use of siRNA in coagulation-related diseases. RNA interference (RNAi) enables targeted silencing of mRNA encoding coagulation factors or natural anticoagulants within hepatocytes, opening a new therapeutic paradigm for disorders of hemostasis and thrombosis.A key enabler of this modality is GalNAc-conjugated delivery, which facilitates efficient and selective transport of therapeutic siRNA molecules to the liver. To meet rising demand in this field, WuXi TIDES, a unique CRDMO platform that is part of WuXi AppTec, has established an integrated service platform around siRNA molecules and conjugates, covering from drug discovery and CMC development to commercial-scale manufacturing. This article reviews the emerging role of siRNA in coagulation disorders, and highlights how the WuXi TIDES team supports partners in overcoming the challenges of GalNAc-conjugated drug development.siRNA Therapies: A Precise Modality for Coagulation DisordersBecause most proteins that regulate coagulation are synthesized in hepatocytes, the liver presents an important site for therapeutic intervention. GalNAc ligands bind to the asialoglycoprotein receptor (ASGPR), which is abundantly expressed on hepatocytes, enabling highly selective uptake of siRNA and precise modulation of the coagulation cascade.Once internalized, siRNA can silence target genes for extended periods, producing sustained reductions in protein levels for months after a single subcutaneous dose. This long-acting profile is particularly meaningful for diseases associated with high treatment burden and poor adherence. siRNA therapies deliver predictable efficacy while extending dosing intervals to eight weeks or more, allowing treatment to integrate more easily into patients’ daily lives.Their smooth pharmacodynamic profile also supports gradual, durable adjustments to the coagulation balance. Qfitlia (fitusiran), approved by the FDA this year, demonstrates these advantages in clinical practice.Restoring Hemostatic Balance in HemophiliaHemophilia has long been defined by the absence of a single component, coagulation factor VIII (FVIII) or IX (FIX), leading to spontaneous bleeding, joint damage, and long-term complications. For decades, therapy focused on replacing the missing factor.siRNA therapy introduces a fundamentally different concept. Instead of restoring FVIII or FIX, it targets the pathway from the opposite direction by reducing endogenous anticoagulants to enhance thrombin generation and rebalance coagulation globally.This approach received regulatory validation in March 2025, when the FDA approved Qfitlia (fitusiran) for routine prophylaxis in adolescents and adults with hemophilia A or B. As an siRNA that silences antithrombin mRNA, fitusiran reduced annualized bleeding rates by approximately 90% in Phase 3 trials, with dosing required only once every two months.For many patients, this represents a transformative shift. Frequent intravenous infusions, long a major barrier to adherence, can now be replaced by around six subcutaneous injections per year. For individuals with poor venous access or treatment fatigue, this new rhythm of care offers tremendous relief.Beyond its clinical benefits, fitusiran underscores the scalability of RNAi as a platform. Researchers are actively investigating additional anticoagulant targets, including heparin cofactor II and protein S, and early findings suggest that silencing these proteins may similarly restore thrombin generation in the absence of FVIII or FIX.siRNA Opens New Frontiers in Thrombotic DiseaseJust as siRNA can augment coagulation in bleeding disorders, it can also attenuate coagulation for patients at high risk of thrombosis. Factor XI (FXI) has emerged as a particularly compelling target.Individuals with congenital FXI deficiency experience substantially lower thrombosis risk yet typically only mild bleeding, an insight from human genetics that suggests FXI inhibition may offer a wider safety margin than conventional anticoagulants.Several FXI-targeted therapies have already achieved meaningful clinical milestones. Bayer’s FXIa inhibitor asundexian demonstrated positive topline Phase 3 results recently, reducing ischemic stroke risk without elevating major bleeding events.At the same time, multiple biotech companies are advancing siRNA strategies to lower FXI expression. Several GalNAc-siRNA candidates have demonstrated sustained reductions in FXI activity and protein levels in Phase 1 clinical trials and are in Phase 2 development. Collectively, these programs aim to deliver long-term protection against venous thrombosis or stroke through only a handful of subcutaneous injections per year—potentially redefining the future of anticoagulation.Fast-Track to Phase 1: Two siRNA IND CMC Packages Completed in 14 MonthsDespite their promise, GalNAc-siRNA therapies require robust technical infrastructure to achieve scalable, reliable development. One biotech company developing a GalNAc-siRNA therapy for cardiovascular disease faced multiple challenges—including limited supply of a unique GalNAc molecule, low yield, and poor purity—which stalled their program. To overcome these hurdles, they partnered with WuXi TIDES.The priority was to ensure a stable supply of the GalNAc molecule. WuXi TIDES quickly designed a customized synthetic route tailored to the client’s specifications. By utilizing flow chemistry and recrystallization techniques, the team achieved a high-quality product with over 98% purity. Within just four months, they successfully delivered 4.5 kilograms of high-purity, custom GalNAc—effectively securing the supply of the starting material for the GalNAc-siRNA conjugate and shortening development timelines.Next came the critical conjugation step. Drawing on extensive expertise in conjugation chemistries and modification strategies, the WuXi TIDES team adopted a highly selective “Click Chemistry” approach, minimizing byproduct formation and simplifying synthesis and purification. As a result, the overall yield increased from 13% to 62%, and crude purity improved from 18% to 75%, ensuring the production of clinical-grade material with superior purity and stability.In parallel, WuXi TIDES leveraged its integrated CMC capabilities to advance analytical method development and formulation optimization. Together with an advanced sterile fill-finish line and optimal process design, the team achieved a batch yield of >99% in GMP production, significantly minimizing the overall loss of costly API. These coordinated efforts enabled two siRNA candidates to complete IND-enabling activities within just 14 months, accelerating progress toward the clinic.The above case is just one example of the capabilities of WuXi TIDES’ integrated CRDMO platform. In addition to oligonucleotides, WuXi TIDES also provides comprehensive development solutions for GalNAc-conjugated peptide therapeutics. As more GalNAc-conjugated drugs advance into clinical development, integrated collaboration will be essential to accelerating innovation and bringing therapies to patients faster. Looking AheadsiRNA is redefining how coagulation disorders are treated. By silencing key liver-derived proteins, siRNA delivers precise, durable, and programmable modulation of hemostasis. The approval of the first siRNA therapy for hemophilia confirms that rebalancing the coagulation system from within the body is now a clinically validated strategy.To date, eight siRNA therapies have been approved globally, with indications expanding beyond rare diseases to highly prevalent chronic conditions. They offer the potential to transform treatment strategies for hypertension, hyperlipidemia, and obesity. As of July this year, nearly 400 siRNA therapies are in development worldwide, with one-third already in clinical stages.Looking ahead, WuXi TIDES will continue to leverage its integrated CRDMO platform to support the development of oligonucleotide therapeutics, including siRNA, and help partners transform scientific breakthroughs into new medicines that benefit patients across the globe.参考资料:[1] Ragni and Chan, (2023). Innovations in RNA therapy for hemophilia. Blood, https://doi.org/10.1182/blood.2022018661[2] Presume et al., (2024). Factor XI Inhibitors: A New Horizon in Anticoagulation Therapy. Cardiol Ther., doi: 10.1007/s40119-024-00352-x[3] Capodanno et al., (2025). Factor XI inhibitors for the prevention and treatment of venous and arterial thromboembolism. Nat Rev Cardiol, https://doi.org/10.1038/s41569-025-01144-z免责声明:本文仅作信息交流之目的,文中观点不代表药明康德立场,亦不代表药明康德支持或反对文中观点。本文也不是治疗方案推荐。如需获得治疗方案指导,请前往正规医院就诊。版权说明:欢迎个人转发至朋友圈,谢绝媒体或机构未经授权以任何形式转载至其他平台。转载授权请在「药明康德」微信公众号回复“转载”,获取转载须知。分享,点赞,在看,聚焦全球生物医药健康创新

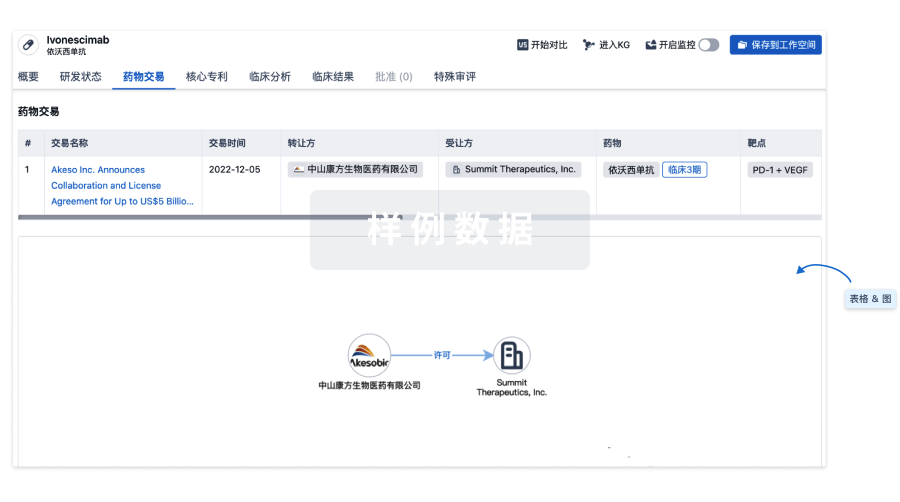

100 项与 Folitixorin Calcium 相关的药物交易

登录后查看更多信息

研发状态

10 条进展最快的记录, 后查看更多信息

登录

| 适应症 | 最高研发状态 | 国家/地区 | 公司 | 日期 |

|---|---|---|---|---|

| 结肠癌 | 临床3期 | 美国 | 2006-05-01 | |

| 转移性结直肠癌 | 临床3期 | 美国 | 2006-05-01 | |

| 直肠癌 | 临床3期 | 美国 | 2006-05-01 | |

| 晚期乳腺癌 | 临床2期 | 阿根廷 | 2006-02-01 | |

| 晚期乳腺癌 | 临床2期 | 墨西哥 | 2006-02-01 | |

| 晚期乳腺癌 | 临床2期 | 秘鲁 | 2006-02-01 | |

| 晚期乳腺癌 | 临床2期 | 俄罗斯 | 2006-02-01 | |

| 晚期乳腺癌 | 临床2期 | 西班牙 | 2006-02-01 | |

| 胰腺癌 | 临床前 | 美国 | - |

登录后查看更多信息

临床结果

临床结果

适应症

分期

评价

查看全部结果

| 研究 | 分期 | 人群特征 | 评价人数 | 分组 | 结果 | 评价 | 发布日期 |

|---|

No Data | |||||||

登录后查看更多信息

转化医学

使用我们的转化医学数据加速您的研究。

登录

或

药物交易

使用我们的药物交易数据加速您的研究。

登录

或

核心专利

使用我们的核心专利数据促进您的研究。

登录

或

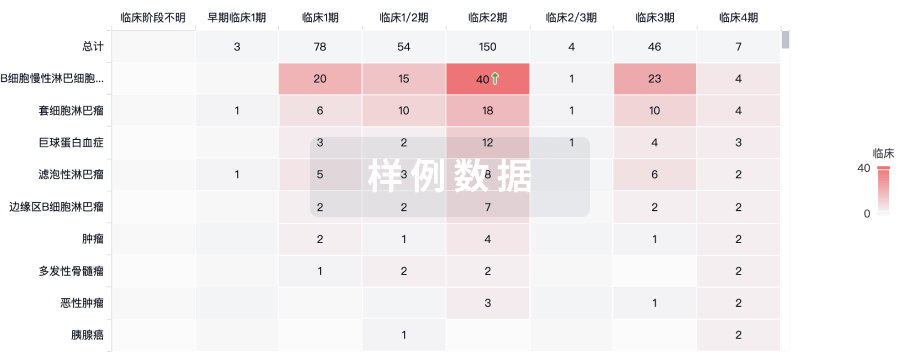

临床分析

紧跟全球注册中心的最新临床试验。

登录

或

批准

利用最新的监管批准信息加速您的研究。

登录

或

特殊审评

只需点击几下即可了解关键药物信息。

登录

或

生物医药百科问答

全新生物医药AI Agent 覆盖科研全链路,让突破性发现快人一步

立即开始免费试用!

智慧芽新药情报库是智慧芽专为生命科学人士构建的基于AI的创新药情报平台,助您全方位提升您的研发与决策效率。

立即开始数据试用!

智慧芽新药库数据也通过智慧芽数据服务平台,以API或者数据包形式对外开放,助您更加充分利用智慧芽新药情报信息。

生物序列数据库

生物药研发创新

免费使用

化学结构数据库

小分子化药研发创新

免费使用