预约演示

更新于:2025-05-07

Congenital cataract

先天性白内障

更新于:2025-05-07

基本信息

别名 CATARACT CONGENITAL、Cataract congenital、Cataract, congenital + [22] |

简介 Cataract that is present at birth. |

关联

2

项与 先天性白内障 相关的药物作用机制 ADRA1激动剂 [+2] |

原研机构 |

最高研发阶段批准上市 |

首次获批国家/地区 美国 |

首次获批日期2014-05-30 |

靶点 |

作用机制 GPR161 antagonists |

在研机构- |

原研机构 |

在研适应症- |

最高研发阶段无进展 |

首次获批国家/地区- |

首次获批日期1800-01-20 |

72

项与 先天性白内障 相关的临床试验NCT06950619

Associations Between Congenital Cataract and Dental Anomalies: a Cross-sectional Observational Study

Congenital cataract (CC) is the leading cause of treatable childhood blindness and reduced vision worldwide, with a global prevalence of 4.24/10,000 and significant ethnic variations. In most cases it occurs in isolation, and more rarely with other ocular or systemic features. Some syndromic forms of CC, such as the X-linked conditions of Nance-Horan syndrome (NHS - MIM #302350) and oculo-facio-cardio-dental syndrome (OFCD - MIM #300166) often include dental abnormalities, such as Hutchinson's incisors ('screwdriver-shaped') and numerical defects (from dental agenesis to oligodontia). In a previous cohort study on CC, we observed that individuals carrying variants in genes mainly associated with non-syndromic forms of CC (e.g. PAX6) also had dental abnormalities (mainly number defects). This evidence led us to hypothesise a deeper link between cataractogenesis and dental abnormalities, not limited exclusively to syndromic forms of CC.

开始日期2025-09-01 |

申办/合作机构 |

NCT06751342

Using Psychophysics Methods to Investigate Sensory Dominance After Cataract Surgery

Using Psychophysics Methods for Quantitative Assessment of Sensory Binocular Imbalance After Cataract Surgery

开始日期2024-12-01 |

申办/合作机构 |

JPRN-jRCT1030240180

Retrospective study on visual acuity prognosis in patients with unilateral congenital cataract.

开始日期2024-06-21 |

申办/合作机构- |

100 项与 先天性白内障 相关的临床结果

登录后查看更多信息

100 项与 先天性白内障 相关的转化医学

登录后查看更多信息

0 项与 先天性白内障 相关的专利(医药)

登录后查看更多信息

3,775

项与 先天性白内障 相关的文献(医药)2025-08-01·American Journal of Ophthalmology

Association of Age With Glaucoma and Visual Acuity Outcomes 10.5 Years After Unilateral Congenital Cataract Surgery

Article

作者: Lambert, Scott R ; Drews-Botsch, Carolyn ; Chase, Sera ; Alexander, Janet L ; Mansoor, Shaiza ; Das, Urjita ; Kore, Madi ; Cho, Euna ; Magder, Larry ; Levin, Moran Roni ; Kolosky, Taylor ; Forbes, He ; Wong, Claudia K

2025-06-01·Experimental Eye Research

CD24 is required for sustained transparency of the adult lens

Article

作者: Varadaraj, Kulandaiappan ; Parreno, Justin ; Duncan, Melinda K ; Faranda, Adam P ; Nie, Xingju ; Rafi, Rabiul ; Balasubramanian, Ramachandran ; Shihan, Mahbubul H ; Mathias, Richard T ; Gao, Junyuan ; Wang, Yan

2025-06-01·Life Sciences

Different GJA8 missense variants reveal distinct pathogenic mechanisms in congenital cataract

Article

作者: Wang, Jiangang ; Xu, Hongbo ; Yuan, Lamei ; Deng, Hao ; Li, Zexuan ; Deng, Xinyue ; Cao, Yanna

7

项与 先天性白内障 相关的新闻(医药)2024-06-25

主播有话说

本期播客主题

抢先聆听

苹果播客与小宇宙链接,请前往留言区获取

本期老友与主持人

< 左滑查看简介 >

精彩内容mark一下

04:00

如果眼睛是相机,白内障就是坏掉的镜头

04:47

只要寿命足够长,没人能躲过白内障?

07:25

先天性白内障vs外伤性白内障

09:35

不建议“创意使用”筋膜枪

11:47

无痛+缓慢进展的视力下降可能是白内障在拉响警报

20:39

治疗白内障,要等“熟透了”吗?

23:13

白内障手术就像是清理家具后再布置装修的过程

26:33

选择人工晶体就像挑手机,丰俭由人,合适更重要

27:27

人工晶体大幅降价将进一步利好医患双方

29:27

患者的眼部情况会限制晶体的发挥,并非功能越多越好

34:34

白内障会复发吗?如何应对“后发障”?

39:28

敲黑板:白内障术前、术后、康复期注意要点

45:00

帮助患者“复明”,是一份神圣的使命

49:36

珍藏在书房的马凡氏综合征患者画作

53:43

“悬吊小王子”是怎样炼成的?

59:10

微创化、精准化、个性化是未来白内障治疗的趋势

上下滑动查看完整时间码

*本节目仅做信息交流之目的,嘉宾观点不代表任何公司立场。

什么是《IQ老友说》?

这是一档IQVIA艾昆纬的谈话类播客节目,聚焦医疗行业,网罗多元视角,激发观点碰撞,探寻新鲜洞见,让我们一起在轻松话聊的氛围中,老友说医疗,有趣又有料!

《IQ老友说》现已入驻苹果播客、喜马拉雅、小宇宙与网易云音乐,欢迎订阅关注~

声明

原创内容的最终解释权以及版权归IQVIA艾昆纬中国所有。如需转载文章,请发送邮件至iqviagcmarketing@iqvia.com。

临床结果AHA会议

2024-06-10

·生物谷

尽管人类的视觉系统拥有复杂的色彩处理机制,但大脑在识别黑白图像中的物体时却毫无问题。在一项新的研究中,来自麻省理工学院的研究人员提供了一种可能的解释,说明大脑为何如此擅长识别彩色图像和彩色退化图像(color-degraded images)。通过实验数据和计算建模,他们发现有证据表明,这种能力根植于婴儿期的视觉发育过程。相关研究结果发表在2024年5月24日的Science期刊上,论文标题为“Impact of early visual experience on later usage of color cues”。

在生命早期,新生儿通常只能接收到非常有限的颜色信息,此时,大脑被迫学会根据物体的亮度或发光强度而不是颜色来分辨物体。在生命的后期,当视网膜和大脑皮层具备了更好的处理色彩的能力时,大脑也会将色彩信息纳入其中,但同时也会保持之前获得的识别图像的能力,而不会完全依赖色彩线索。这项发现与先前研究相呼应,证实了早期视觉与听觉的限制性输入对感官系统发展具有促进作用。

麻省理工学院大脑与认知科学教授Pawan Sinha说,“一个普遍的想法是,在我们的感知系统中,有一些重要的感官最初是受到限制的。我们实验室在听力方面所做的一些研究工作也表明,限制新生儿系统最初接触到的信息的丰富程度是很重要的。”

这些发现还有助于解释为什么先天失明但后天通过切除先天性白内障恢复视力的儿童在识别黑白物体时会遇到更多困难。这些儿童在视力恢复后立即接受了丰富的色彩输入,可能会形成对色彩的过度依赖,使他们对色彩信息的变化或去除的适应能力大大降低。

观察黑白物体

研究人员对早期色彩经验如何影响日后物体识别的探索,源于关注先天性白内障患儿复明后的情况。2005 年,Sinha启动了 “普拉卡什项目(Project Prakash)”,旨在印度发现并治疗可逆性视力丧失的儿童。

这些儿童中有许多人因双侧白内障致盲。印度是世界上盲童人数最多的国家,估计盲童人数在 20 万到 70 万之间。

“普拉卡什计划”不仅为这些儿童提供了视力康复的机会,还促成了对视觉发展深入科学研究的参与,孩子们成为了宝贵的案例,帮助科研人员洞悉视力恢复过程中大脑结构的适应性变化、亮度感知机制,以及其他视觉相关机能的诸多奥秘。

图片来自Science, 2024, doi:10.1126/science.adk9587

在这项新颖研究中,Sinha及其团队对儿童执行了一项基本的物体辨认试验,向他们同时展示彩色及黑白图像。研究揭示,对那些天生视力无碍的儿童而言,将图像转为灰阶并未削弱他们识别图像中物体的能力。相比之下,经由手术移除白内障的儿童在面对非彩色图像时,其表现显著下滑。

基于此现象,研究者推测,儿童早期视觉体验的特性对于培养他们在颜色变化条件下的适应力及无色状态下物体识别的技能至关重要。正常新生儿由于视网膜视锥细胞未完全发育,视力和色觉均较弱,但随年龄增长,视锥系统的发育使视力显著提升。鉴于视觉系统初期的不成熟导致色彩信息接收受限,婴儿大脑或被逼迫学会从色彩线索稀缺的图像中进行有效识别。

另外,他们假设,先天白内障儿童在白内障移除后可能过度依赖色彩信息来辨认物体,这一点在研究中通过证明视觉系统已成熟的儿童在术后立即具备良好色觉得到证实。

为了严谨检验这一理论,研究团队采用标准卷积神经网络AlexNet作为视觉计算模型,模拟人类视觉学习过程。他们以不同方式训练AlexNet识别物体,一部分训练程序初始仅提供灰阶图像,随后引入彩色图像,模拟婴儿视觉成熟过程中的色彩感知逐步增强;另一程序则全程使用彩色图像,接近“普拉卡什计划”儿童在白内障去除后直接接触到全彩世界的经历。

研究显示,受到发育启发的模型能精准识别各类图像中的物体,并能很好地适应其他颜色处理。而仅在彩色图像训练下的模型,在面对灰阶或色调调整的图像时,泛化能力欠佳。

麻省理工学院博士后Lukas Vogelsang说,“这种类似普拉卡什的模型在处理彩色图像时表现非常出色,但在处理其他图像时却非常糟糕。如果不是从最初的颜色退化训练开始,这些模型就无法泛化,这可能是因为它们过度依赖于特定的颜色线索。”

该模型的优秀泛化性能不仅仅是因为它在彩色和灰度图像上都接受过训练,图像呈现的顺序同样关键。实验中,先彩色后灰阶的训练模式在辨认黑白物体上亦不理想,说明“发育序列的安排及其顺序”同样重要。

有限感官输入的优势

通过分析这些模型的内部架构,研究人员发现,那些初时仅接受灰阶输入的模型逐渐擅长利用亮度特征来辨别物体。当这些模型后续接触到彩色输入时,它们并不会大幅调整既定策略,因为它们已掌握了一套有效的识别机制。相反,那些起始即在彩色图像上训练的模型,在后续加入灰阶图像后虽有所调整策略,但这种调整幅度不足以让它们达到先期经历灰阶输入模型的识别精度。

类似的现象也可能发生在人脑中,人脑在生命早期具有更强的可塑性,可以很容易地学会仅根据亮度来识别物体。在生命早期,色彩信息的匮乏实际上可能对发育中的大脑有益,因为它可以学会根据少量的信息识别物体。

麻省理工学院兼职助理研究员Sidney Diamond说,“在新生儿时期,视力正常的儿童在某种意义上被剥夺了色彩视觉。而这恰恰是一种优势。”

Sinha团队已观察到,早期感官输入的限制也会对视觉的其他方面以及听觉系统有益。2022 年,他们利用计算模型发现,早期只接触低频声音(类似于婴儿在子宫内听到的声音),能提高需要在较长时间内分析声音的听觉任务的表现,比如识别情绪。他们如今计划探索这种现象是否会延伸到发育的其他方面,如语言学习。

参考资料:

Marin Vogelsang et al. Impact of early visual experience on later usage of color cues. Science, 2024, doi:10.1126/science.adk9587.

本文仅用于学术分享,转载请注明出处。若有侵权,请联系微信:bioonSir 删除或修改!

2024-05-23

New research offers a possible explanation for how the brain learns to identify both color and black-and-white images. The researchers found evidence that early in life, when the retina is unable to process color information, the brain learns to distinguish objects based on luminance, rather than color.

Even though the human visual system has sophisticated machinery for processing color, the brain has no problem recognizing objects in black-and-white images. A new study from MIT offers a possible explanation for how the brain comes to be so adept at identifying both color and color-degraded images.

Using experimental data and computational modeling, the researchers found evidence suggesting the roots of this ability may lie in development. Early in life, when newborns receive strongly limited color information, the brain is forced to learn to distinguish objects based on their luminance, or intensity of light they emit, rather than their color. Later in life, when the retina and cortex are better equipped to process colors, the brain incorporates color information as well but also maintains its previously acquired ability to recognize images without critical reliance on color cues.

The findings are consistent with previous work showing that initially degraded visual and auditory input can actually be beneficial to the early development of perceptual systems.

"This general idea, that there is something important about the initial limitations that we have in our perceptual system, transcends color vision and visual acuity. Some of the work that our lab has done in the context of audition also suggests that there's something important about placing limits on the richness of information that the neonatal system is initially exposed to," says Pawan Sinha, a professor of brain and cognitive sciences at MIT and the senior author of the study.

The findings also help to explain why children who are born blind but have their vision restored later in life, through the removal of congenital cataracts, have much more difficulty identifying objects presented in black and white. Those children, who receive rich color input as soon as their sight is restored, may develop an overreliance on color that makes them much less resilient to changes or removal of color information.

MIT postdocs Marin Vogelsang and Lukas Vogelsang, and Project Prakash research scientist Priti Gupta, are the lead authors of the study, which appears today in Science. Sidney Diamond, a retired neurologist who is now an MIT research affiliate, and additional members of the Project Prakash team are also authors of the paper.

Seeing in black and white

The researchers' exploration of how early experience with color affects later object recognition grew out of a simple observation from a study of children who had their sight restored after being born with congenital cataracts. In 2005, Sinha launched Project Prakash (the Sanskrit word for "light"), an effort in India to identify and treat children with reversible forms of vision loss.

Many of those children suffer from blindness due to dense bilateral cataracts. This condition often goes untreated in India, which has the world's largest population of blind children, estimated between 200,000 and 700,000.

Children who receive treatment through Project Prakash may also participate in studies of their visual development, many of which have helped scientists learn more about how the brain's organization changes following restoration of sight, how the brain estimates brightness, and other phenomena related to vision.

In this study, Sinha and his colleagues gave children a simple test of object recognition, presenting both color and black-and-white images. For children born with normal sight, converting color images to grayscale had no effect at all on their ability to recognize the depicted object. However, when children who underwent cataract removal were presented with black-and-white images, their performance dropped significantly.

This led the researchers to hypothesize that the nature of visual inputs children are exposed to early in life may play a crucial role in shaping resilience to color changes and the ability to identify objects presented in black-and-white images. In normally sighted newborns, retinal cone cells are not well-developed at birth, resulting in babies having poor visual acuity and poor color vision. Over the first years of life, their vision improves markedly as the cone system develops.

Because the immature visual system receives significantly reduced color information, the researchers hypothesized that during this time, the baby brain is forced to gain proficiency at recognizing images with reduced color cues. Additionally, they proposed, children who are born with cataracts and have them removed later may learn to rely too much on color cues when identifying objects, because, as they experimentally demonstrated in the paper, with mature retinas, they commence their post-operative journeys with good color vision.

To rigorously test that hypothesis, the researchers used a standard convolutional neural network, AlexNet, as a computational model of vision. They trained the network to recognize objects, giving it different types of input during training. As part of one training regimen, they initially showed the model grayscale images only, then introduced color images later on. This roughly mimics the developmental progression of chromatic enrichment as babies' eyesight matures over the first years of life.

Another training regimen comprised only color images. This approximates the experience of the Project Prakash children, because they can process full color information as soon as their cataracts are removed.

The researchers found that the developmentally inspired model could accurately recognize objects in either type of image and was also resilient to other color manipulations. However, the Prakash-proxy model trained only on color images did not show good generalization to grayscale or hue-manipulated images.

"What happens is that this Prakash-like model is very good with colored images, but it's very poor with anything else. When not starting out with initially color-degraded training, these models just don't generalize, perhaps because of their over-reliance on specific color cues," Lukas Vogelsang says.

The robust generalization of the developmentally inspired model is not merely a consequence of it having been trained on both color and grayscale images; the temporal ordering of these images makes a big difference. Another object-recognition model that was trained on color images first, followed by grayscale images, did not do as well at identifying black-and-white objects.

"It's not just the steps of the developmental choreography that are important, but also the order in which they are played out," Sinha says.

The advantages of limited sensory input

By analyzing the internal organization of the models, the researchers found that those that begin with grayscale inputs learn to rely on luminance to identify objects. Once they begin receiving color input, they don't change their approach very much, since they've already learned a strategy that works well. Models that began with color images did shift their approach once grayscale images were introduced, but could not shift enough to make them as accurate as the models that were given grayscale images first.

A similar phenomenon may occur in the human brain, which has more plasticity early in life, and can easily learn to identify objects based on their luminance alone. Early in life, the paucity of color information may in fact be beneficial to the developing brain, as it learns to identify objects based on sparse information.

"As a newborn, the normally sighted child is deprived, in a certain sense, of color vision. And that turns out to be an advantage," Diamond says.

Researchers in Sinha's lab have observed that limitations in early sensory input can also benefit other aspects of vision, as well as the auditory system. In 2022, they used computational models to show that early exposure to only low-frequency sounds, similar to those that babies hear in the womb, improves performance on auditory tasks that require analyzing sounds over a longer period of time, such as recognizing emotions. They now plan to explore whether this phenomenon extends to other aspects of development, such as language acquisition.

The research was funded by the National Eye Institute of NIH and the Intelligence Advanced Research Projects Activity.

分析

对领域进行一次全面的分析。

登录

或

生物医药百科问答

全新生物医药AI Agent 覆盖科研全链路,让突破性发现快人一步

立即开始免费试用!

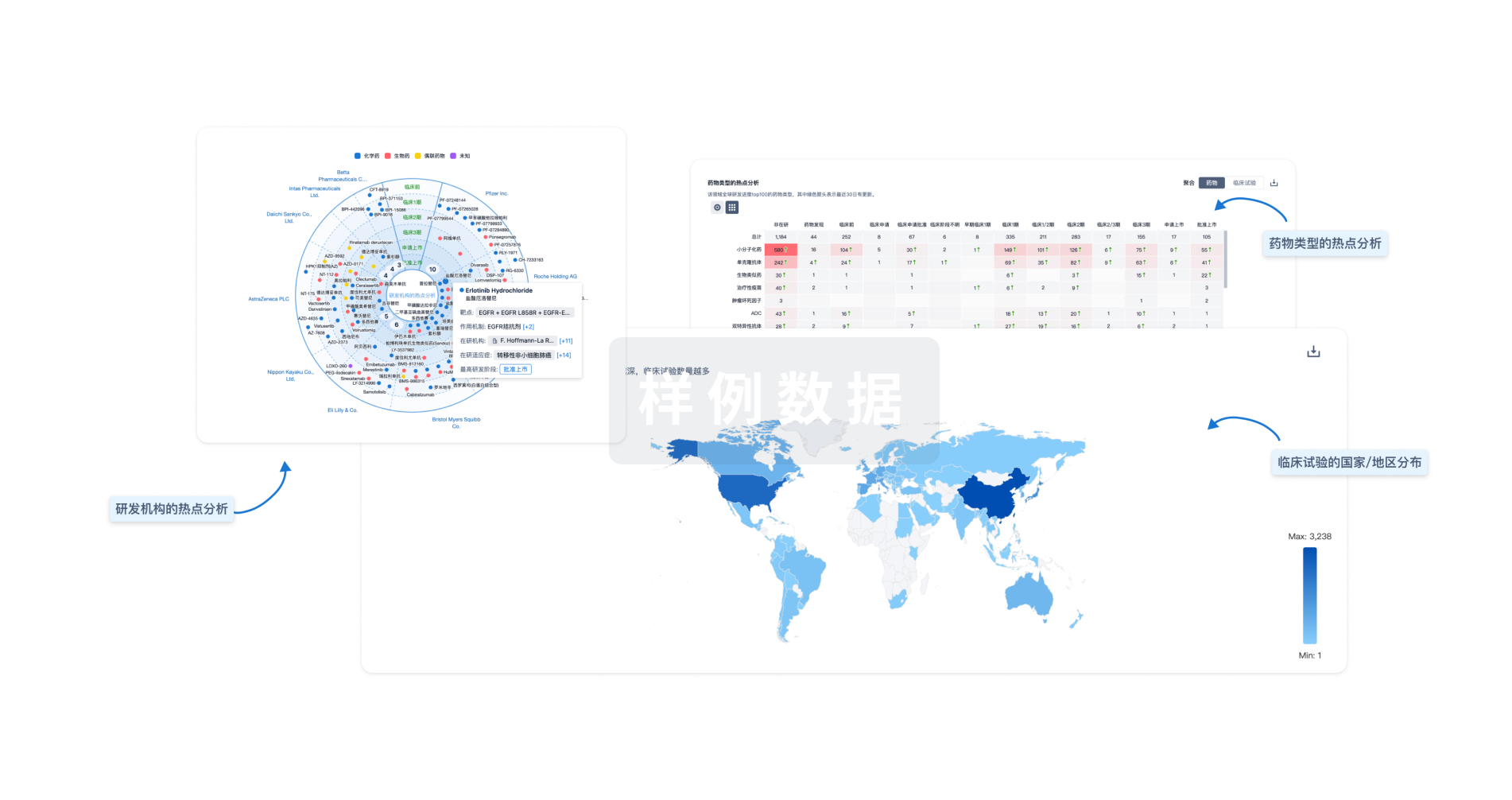

智慧芽新药情报库是智慧芽专为生命科学人士构建的基于AI的创新药情报平台,助您全方位提升您的研发与决策效率。

立即开始数据试用!

智慧芽新药库数据也通过智慧芽数据服务平台,以API或者数据包形式对外开放,助您更加充分利用智慧芽新药情报信息。

生物序列数据库

生物药研发创新

免费使用

化学结构数据库

小分子化药研发创新

免费使用