预约演示

更新于:2026-02-27

Peginterferon lambda-1a(ZymoGenetics, Inc.)

更新于:2026-02-27

概要

基本信息

药物类型 干扰素 |

别名 Lambda、PEG-interferon lambda、PEG-interleukin-29 + [6] |

靶点 |

作用方式 激动剂 |

作用机制 IFNLR1激动剂(干扰素λ受体1激动剂) |

非在研适应症 |

最高研发阶段临床3期 |

首次获批日期- |

最高研发阶段(中国)终止 |

特殊审评快速通道 (美国)、孤儿药 (美国)、孤儿药 (欧盟)、突破性疗法 (美国) |

登录后查看时间轴

结构/序列

Sequence Code 199195

来源: *****

关联

27

项与 Peginterferon lambda-1a(ZymoGenetics, Inc.) 相关的临床试验NCT05070364

Phase 3, Randomized, Open-Label, Parallel Arm Study to Evaluate the Efficacy and Safety of 180 mcg Peginterferon Lambda-1a (Lambda) Subcutaneous Injection for 48 Weeks in Patients With Chronic Hepatitis Delta Virus (HDV) Infection (LIMT-2)

The Phase 3 LIMT-2 study will evaluate the safety and efficacy of Peginterferon Lambda treatment for 48 weeks with 24 weeks follow-up compared to no treatment for 12 weeks in patients chronically infected with HDV. The primary analysis will compare the proportion of patients with HDV RNA < LLOQ at the 24-week post-treatment visit in the Peginterferon Lambda treatment group vs the proportion of patients with HDV RNA < LLOQ at the Week 12 visit in the no-treatment comparator group.

开始日期2021-12-21 |

NCT04388709

A Randomized Phase 2 Trial of Peginterferon Lambda-1a (Lambda) for the Treatment of Hospitalized Patients Infected With SARS-CoV-2 With Non-critical Illness

The main purpose of this research study is to test the safety and effectiveness of an investigational drug peginterferon lambda-1a in treating COVID-19.

开始日期2020-09-01 |

申办/合作机构 |

NCT04344600

Peginterferon Lambda-1a for the Prevention and Treatment of SARS-CoV-2 Infection

This is a phase 2b prospective, randomized, single-blind, controlled trial of a single subcutaneous injection of peginterferon lambda-1a versus placebo for prevention of SARS-CoV-2 infection in non-hospitalized participants at high risk for infection due to household exposure to an individual with coronavirus disease (COVID-19). The study will also evaluate the regimens participants with asymptomatic SARS-CoV-2 infection detected at study entry. All participants will be followed for up to 12 weeks.

开始日期2020-06-29 |

申办/合作机构 |

100 项与 Peginterferon lambda-1a(ZymoGenetics, Inc.) 相关的临床结果

登录后查看更多信息

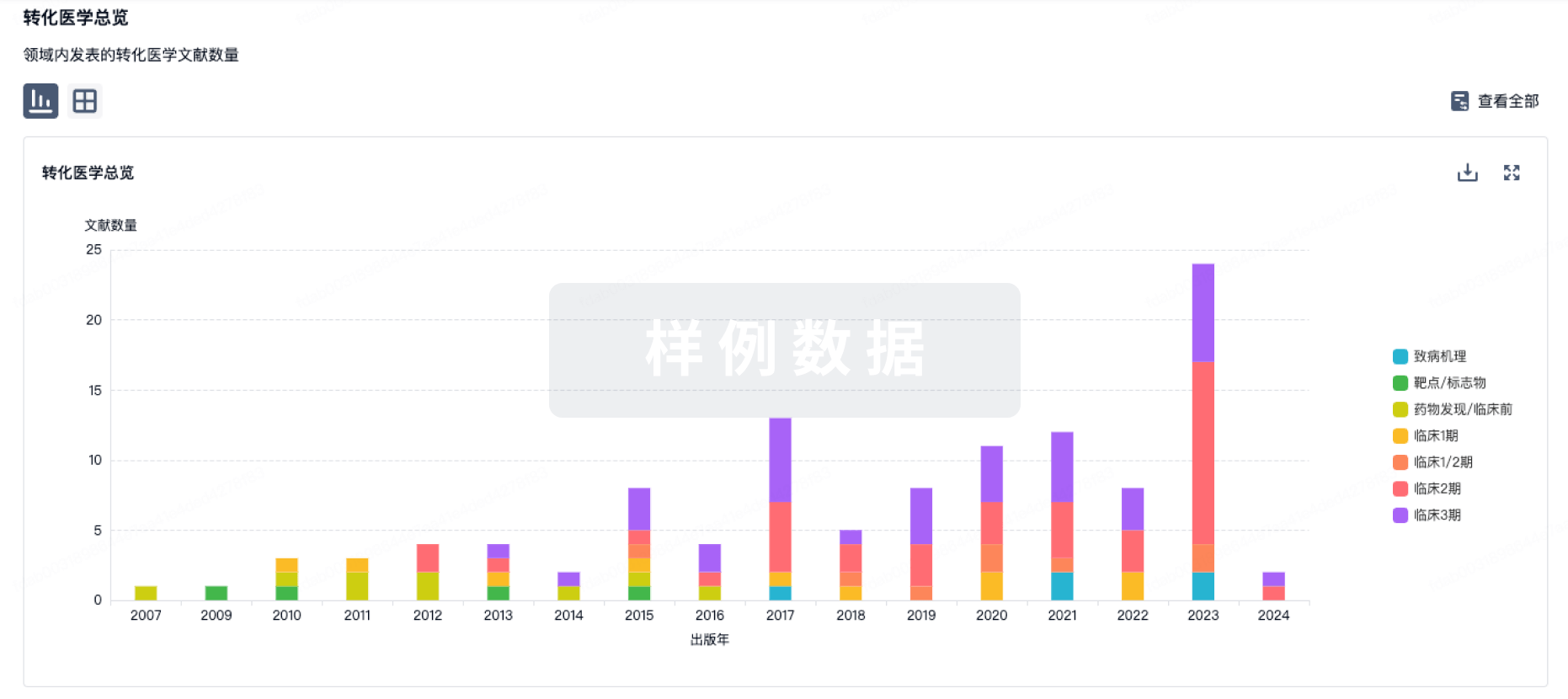

100 项与 Peginterferon lambda-1a(ZymoGenetics, Inc.) 相关的转化医学

登录后查看更多信息

100 项与 Peginterferon lambda-1a(ZymoGenetics, Inc.) 相关的专利(医药)

登录后查看更多信息

35

项与 Peginterferon lambda-1a(ZymoGenetics, Inc.) 相关的文献(医药)2025-05-01·GUT

Antiviral therapy for chronic hepatitis delta: new insights from clinical trials and real-life studies

Review

作者: Anolli, Maria Paola ; Roulot, Dominique ; Lampertico, Pietro ; Wedemeyer, Heiner

Chronic hepatitis D (CHD) is the most severe form of viral hepatitis, carrying a greater risk of developing cirrhosis and its complications. For decades, pegylated interferon alpha (PegIFN-α) has represented the only therapeutic option, with limited virological response rates and poor tolerability. In 2020, the European Medicines Agency approved bulevirtide (BLV) at 2 mg/day, an entry inhibitor of hepatitis B virus (HBV)/hepatitis delta virus (HDV), which proved to be safe and effective as a monotherapy for up to 144 weeks in clinical trials and real-life studies, including patients with cirrhosis. Long-term BLV monotherapy may reduce decompensating events in patients with cirrhosis. The combination of BLV 2 mg with PegIFN-α increased the HDV RNA undetectability rates on-therapy but not off-therapy, compared with PegIFN monotherapy. However, combination therapy, but not BLV monotherapy, may induce hepatitis B surface antigen (HBsAg) loss in some patients. The PegIFN lambda study has been discontinued due to liver toxicity issues, while lonafarnib boosted with ritonavir showed limited off-therapy efficacy in a phase 3 study. Nucleic acid polymer-based therapy is promising but large studies are still lacking. New controlled trial data come from molecules, such as monoclonal antibodies and/or small interfering RNA, that target HBsAg or HBV RNAs, which demonstrated not only profound HDV suppression, but also HBsAg decline.While waiting for new compounds to be approved as monotherapy or in combination, BLV monotherapy 2 mg/day remains the only approved therapy for CHD, at least in the European Union region.

2023-12-02·Expert opinion on biological therapy

Bulevirtide and emerging drugs for the treatment of hepatitis D

Review

作者: Han, Steven-Huy B ; Yang, Jamie O ; Xu, Helen Y ; Chen, Phillip H

INTRODUCTION:

Hepatitis delta virus (HDV) causes acute and chronic liver disease that requires the co-infection of the Hepatitis B virus and can lead to significant morbidity and mortality. Bulevirtide is a recently introduced entry inhibitor drug that acts on the sodium taurocholate cotransporting peptide, thereby preventing viral entry to target cells in chronic HDV infection. The mainstay of chronic HDV therapy prior to bulevirtide was interferon alpha, which has an undesirable side effect profile.

AREAS COVERED:

We review bulevirtide data from recent clinical trials in Europe and the United States. Challenges to development and implementation of bulevirtide are discussed. Additionally, we review ongoing trials of emerging drugs for HDV, such as pegylated interferon lambda and lonafarnib.

EXPERT OPINION:

Bulevirtide represents a major shift in treatment for chronic HDV, for which there is significant unmet need. Trials that compared bulevirtide in combination with interferon alpha vs interferon alpha monotherapy demonstrated significant increase in virologic response. Overall, treatment with different doses of bulevirtide were comparable. Bulevirtide was generally well tolerated, and no serious adverse events occurred. Understanding the true prevalence of HDV, as well as continued studies of emerging drugs will prove valuable to the larger goal of eradication of Hepatitis D.

2023-06-01·The New England journal of medicine1区 · 医学

Pegylated Interferon Lambda for Covid-19

1区 · 医学

Letter

作者: Huang, Lingtong ; Wang, Mingqiang ; Tang, Lingling

84

项与 Peginterferon lambda-1a(ZymoGenetics, Inc.) 相关的新闻(医药)2026-01-26

关键词:RDKit · AutoDock Vina · 自动化 · 虚拟筛选 · AIDD

一句话定位:

不再“一个配体点一次 Docking”,而是让 Python 帮你跑完整个虚拟筛选流程。

一、为什么一定要“自动化分子对接”?

如果你只对接 1–5 个分子,手动操作还能忍。

但在真实 AIDD 场景中,你往往面对的是:

上千 / 上万 SMILES

多个蛋白构象

多轮参数扫描

需要统一评分、排序、过滤

❌ 手动 = 不可复现

❌ GUI = 不可扩展

❌ 脚本零散 = 不可维护

真正能进论文、进项目的 Docking,一定是自动化的。

二、整体流程鸟瞰(你将完成什么)

本期我们实现这样一条流水线:

SMILES ↓ (RDKit)3D构象生成 + 能量最小化 ↓PDBQT 配体准备 ↓AutoDock Vina 调用 ↓Docking score & pose ↓结果汇总 + 排序 + 筛选

目标:

给我一个 SMILES 列表,我还你一个 Docking 排行榜。

三、Step 1:RDKit 批量生成 3D 配体构象

1. 从 SMILES 开始(一切的源头)

from rdkit import Chemfrom rdkit.Chem import AllChemsmiles_list = [ "CCOc1ccc2nc(S(N)(=O)=O)sc2c1", "CCN(CC)CCOc1ccc2ncsc2c1", "COc1ccc2nc(S)sc2c1"]mols = []for smi in smiles_list: mol = Chem.MolFromSmiles(smi) mol = Chem.AddHs(mol) AllChem.EmbedMolecule(mol, AllChem.ETKDG()) AllChem.UFFOptimizeMolecule(mol) mols.append(mol)

为什么这一步关键?

Docking 需要 3D 坐标

ETKDG ≈ 当前最常用的小分子构象生成方法

加氢 & 简单最小化可以明显提升对接稳定性

四、Step 2:自动生成配体 PDBQT(关键接口)

RDKit 本身 不直接支持 PDBQT,常见做法是:

RDKit → PDB → OpenBabel → PDBQT

2. RDKit 输出 PDB

from rdkit.Chem import rdmolfilesfor i, mol in enumerate(mols): rdmolfiles.MolToPDBFile(mol, f"ligand_{i}.pdb")

3. 使用 OpenBabel 批量转 PDBQT

import subprocessfor i in range(len(mols)): subprocess.run([ "obabel", f"ligand_{i}.pdb", "-O", f"ligand_{i}.pdbqt" ])

科研经验提示

电荷模型、加氢策略会影响 docking score

论文中请明确写明配体准备流程

五、Step 3:Python 调用 AutoDock Vina

4. 准备一个通用 Vina 配置模板

vina_config = """receptor = receptor.pdbqtligand = {ligand}center_x = 10center_y = 25center_z = -5size_x = 20size_y = 20size_z = 20exhaustiveness = 8num_modes = 9"""

5. 批量运行 Docking

def run_vina(ligand_id): config_file = f"conf_{ligand_id}.txt" with open(config_file, "w") as f: f.write(vina_config.format(ligand=f"ligand_{ligand_id}.pdbqt")) subprocess.run([ "vina", "--config", config_file, "--log", f"log_{ligand_id}.txt", "--out", f"out_{ligand_id}.pdbqt" ])

for i in range(len(mols)): run_vina(i)

输出:

到这里,你已经完成了真正意义上的“自动化分子对接”。

六、Step 4:自动解析 Docking 结果并排序

6. 解析 Vina log 文件

import redef extract_score(log_file): with open(log_file) as f: for line in f: if line.strip().startswith("1 "): return float(line.split()[1]) return None

results = []for i in range(len(mols)): score = extract_score(f"log_{i}.txt") results.append((i, score))

7. 按 Docking score 排序

results = sorted(results, key=lambda x: x[1])for idx, score in results: print(f"Ligand {idx}: {score:.2f} kcal/mol")

这一步就是虚拟筛选的“交付成果”。

七、科研级升级方向(非常重要)

你现在这套流程,可以自然升级为:

升级方向

说明

多构象 docking

RDKit 生成多个 conformer

并行计算

multiprocessing / Slurm

Scaffold 过滤

RDKit 子结构筛选

后处理

PLIP / interaction fingerprint

ML 重排序

Docking + QSAR 融合

这正是 AIDD 的真实工业流程。

八、互动时间

想一想:

Docking score 排名第一的一定是最优候选吗?

你会如何用 RDKit 过滤 PAINS / toxic fragments?

Docking 与 ML 预测冲突时,你更信谁?

九、本期一句话总结

RDKit 的价值,不是“算一个分子”,而是“驱动整个虚拟筛选系统”。

你已经完成了:

✔ RDKit 自动生成配体

✔ Python 调用对接引擎

✔ 自动收集、排序 Docking 结果

✔ 向“工业级 AIDD pipeline”迈出关键一步

完整代码和数据集获取链接:

https://www.aiddlearning.online/post/62

🚀如果你觉得这篇文章对你有帮助,欢迎 一键三连:点赞👍、推荐♥、转发🔁,也别忘了关注我们,获取更多AIDD干货!

🚀添加小助手微信(Catherine_Online1010),回复‘制药’进入 AI+生物医药 垂直社群,与500+同行实时讨论 分子生成、靶点预测 难题!

往期系列概览 -- 点击直达

RDKit与DeepChem的集成:分子机器学习的完美结合

使用Pandas进行缺失值处理和异常值检测——实战指南

使用LightGBM和HistGradientBoosting进行QSAR建模与优化:预测溶解度的实践

书籍推荐|《Computational Methods for Rational Drug Design》574页

分子活性数据标准化指南

AI 药物发现:化学分子到机器学习数值特征的转化——打通“化学空间”与“模型空间”关键路径

核心技能篇:从分子到模型的完整数据链路

数据集划分与采样策略:构建更稳健的药物预测模型

往期系列概览 -- 点击直达

1. RDKit与DeepChem的集成:分子机器学习的完美结合

2.使用Pandas进行缺失值处理和异常值检测——实战指南

3. 使用LightGBM和HistGradientBoosting进行QSAR建模与优化:预测溶解度的实践

让分子“学会思考”:用Scikit-learn与PyTorch开启药物AI之旅!

2025-12-30

2025年6月《Adv Ther》发表《氘可来昔替尼(一种口服、选择性、变构TYK2抑制剂)治疗系统性红斑狼疮的两项随机、安慰剂对照3期试验设计》

摘要

背景与目的:

氘可来昔替尼是一种首创、口服、选择性、变构的酪氨酸激酶2(TYK2)抑制剂,在针对活动性系统性红斑狼疮(SLE)患者的2期PAISLEY SLE试验中,已在主要终点和所有关键次要终点上显示出疗效。本文描述两项3期试验 [POETYK SLE-1 (NCT05617677), POETYK SLE-2 (NCT05620407)],旨在评估氘可来昔替尼在活动性SLE患者中的疗效和安全性。这些3期试验旨在复制2期试验的成功要素,包括其糖皮质激素减量策略和疾病活动度裁定方法。

方法:

在这两项全球性、3期、随机、双盲、安慰剂对照试验中,年龄在18-75岁、正在接受背景标准治疗的活动性SLE患者将按3:2的比例随机分配,接受氘可来昔替尼或安慰剂治疗,进行为期52周的双盲治疗。接受糖皮质激素治疗的患者将被指导进行减量(除非存在显著疾病活动),在双盲治疗期间减至预定的阈值剂量水平。在第52周,符合条件的患者可以选择进入为期104周的开放标签扩展期,在此阶段所有患者都将接受氘可来昔替尼治疗。

计划结局:

主要终点(SLE应答者指数-4应答)和所有次要终点将在第52周进行评估。安全性和耐受性将在整个试验期间进行评估。在每项试验中,计划的随机化将纳入来自北美、南美、欧洲和亚太地区多个国家的患者。

结论: 正在进行中的POETYK SLE-1和SLE-2试验,对于继续评估氘可来昔替尼作为SLE患者潜在耐受性良好且有效的治疗选择具有重要意义。

试验注册:

ClinicalTrials.gov 标识符:NCT05617677 和 NCT05620407。

通俗语言摘要

系统性红斑狼疮(简称SLE)是一种长期性的自身免疫性疾病,影响身体的多个部位,包括皮肤、关节和内脏器官。SLE患者可能经历不同的症状,这些症状会对生活质量产生负面影响。目前仅有少数有助于控制SLE症状的药物可用,但这些药物可能导致不良副作用,并且可能无法改善所有症状。

目前正在进行两项研究(POETYK SLE-1和POETYK SLE-2),测试一种名为氘可来昔替尼的药物,这是一种口服药片。氘可来昔替尼的作用机制与现有其他药物不同,它靶向一种在人体免疫反应中起关键作用的特定蛋白质;这可能以更少的副作用缓解症状。氘可来昔替尼已在成人银屑病患者中经过测试并获得批准使用。氘可来昔替尼也在成人银屑病关节炎患者中进行了测试并显示出积极结果,一项针对较少数量成人SLE患者的研究也显示出益处。本文描述了氘可来昔替尼在POETYK SLE-1和POETYK SLE-2中的测试方式,这两项研究将纳入更多数量的成人SLE患者。这些研究将在全球范围内进行,参与者的主要研究阶段将持续1年。这些研究的参与者可以选择继续研究2年,总计约3年。结果将帮助医生和科学家了解氘可来昔替尼是否能以安全有效的方式改善成人SLE患者的症状。

关键词: 临床试验;氘可来昔替尼;3期;SRI(4);研究方案;系统性红斑狼疮;试验设计;TYK2抑制剂

关键摘要要点

系统性红斑狼疮是一种慢性自身免疫性疾病,其特征为临床表现异质性和多系统受累;目前存在对耐受性良好且有效的新型治疗方案的需求未得到满足。

本研究方案描述了两项全球性、随机、安慰剂对照、为期52周的3期研究,旨在评估氘可来昔替尼的疗效、安全性和耐受性。氘可来昔替尼是一种口服、选择性、变构酪氨酸激酶2(TYK2)抑制剂,其作用机制与Janus激酶(JAK)1、2、3抑制剂不同,具有独特性。

由于使用了各种背景药物,试验设计将包括严格的糖皮质激素减量方案,以助于区分治疗效应与安慰剂反应。

试验终点将包括多种复合指标和器官特异性领域,涵盖总体疾病活动度、黏膜皮肤和肌肉骨骼表现、患者报告结局以及安全性的测量。

研究结果将为变构TYK2抑制在治疗系统性红斑狼疮中的效用提供新的见解。

引言

系统性红斑狼疮(SLE)是一种慢性、异质性的自身免疫性疾病,通常伴有系统性炎症,导致广泛的器官和组织损伤[1]。SLE患者可能出现轻度至危及生命的临床特征,表现在以下器官领域:全身性、黏膜皮肤、神经精神、肌肉骨骼、心肺、胃肠道、眼科、肾脏和血液系统[2]。

当前的标准治疗包括抗疟药、改善病情抗风湿药、免疫抑制剂和生物制剂,可联用或不联用糖皮质激素[3]。其中一些疗法属于超说明书使用,支持其在SLE患者中疗效和安全性的证据有限[3, 4]。虽然Anifrolumab和贝利尤单抗等疗法已获批用于治疗SLE,但实现缓解和低疾病活动度的治疗目标并不常见[3]。SLE患者需要耐受性良好、安全有效的新型靶向治疗。

酪氨酸激酶2(TYK2)介导某些在SLE病理生理学中起关键作用的免疫细胞因子信号传导,包括I型和III型干扰素、白细胞介素(IL)-23和IL-12[5–10]。氘可来昔替尼是一种首创、口服、选择性、变构TYK2抑制剂,已在多个国家获批用于治疗中重度斑块状银屑病成人患者[11–14]。氘可来昔替尼目前正在一项3期试验(NCT05946941,POETYK SjS-1)中研究治疗干燥综合征,在两项3期试验(NCT04908202,POETYK PsA-1;NCT04908189,POETYK PsA-2)中研究治疗银屑病关节炎,并在此描述的两项3期试验(NCT05617677,POETYK SLE-1;NCT05620407,POETYK SLE-2)中研究治疗活动性SLE。

氘可来昔替尼具有与Janus激酶(JAK)1,2,3抑制剂不同的独特作用机制[5]。氘可来昔替尼选择性结合TYK2的调节域并将酶锁定在非活性状态,从而抑制干扰素和白细胞因子的下游信号通路,包括I型和III型干扰素、IL-23和IL-12[5]。相比之下,JAK抑制剂结合活性域上的三磷酸腺苷(ATP)结合位点,抑制由几种JAK家族酶启动的下游信号转导[5]。

在针对活动性SLE患者为期48周的随机、双盲、安慰剂对照2期PAISLEY SLE试验(NCT03252587)中,氘可来昔替尼在多个结局指标上显示出疗效[15]。与安慰剂组(34.4%)相比,氘可来昔替尼3 mg每日两次(BID)治疗组在第32周达到SLE应答者指数-4(SRI[4])应答的患者比例(58.2%)显著更高,与安慰剂的差异为23.8%(调整后比值比,2.8;95% CI,1.5–5.1;P < 0.001)。此外,氘可来昔替尼3 mg BID与安慰剂相比,在所有关键次要终点上均达到统计学显著性。在该试验中,氘可来昔替尼在SLE患者中总体安全且耐受性良好,其安全性特征与氘可来昔替尼针对其他适应症(包括银屑病和银屑病关节炎)的研究中所观察到的一致[16–18]。

本文描述的正在进行中的3期研究(NCT05617677,POETYK SLE-1;NCT05620407,POETYK SLE-2)将在更多数量的活动性SLE患者中评估氘可来昔替尼的疗效、安全性和耐受性。这些3期试验旨在复制2期试验的成功要素[15];由于背景治疗的异质性和已知的依从性问题[19],设计方案包括严格的糖皮质激素减量方案,这被认为是关键的试验设计要素,以提高区分治疗效应与安慰剂反应的潜力。设计方案还包括严格的疾病活动度裁定程序、复合终点和相对严格的器官特异性终点的使用,这可能因狼疮表现的多面性而改善治疗效应的检测[19]。在此,我们描述这两项正在进行中的3期试验的研究设计、资格标准和关键终点(图1)。

方法

研究设计

POETYK SLE-1(NCT05617677)和POETYK SLE-2(NCT05620407)是两项全球性、3期、随机、双盲、安慰剂对照试验;每项研究均于2023年1月启动。两项试验均包括4个研究阶段:4周筛选期、52周双盲治疗期、可选的104周开放标签长期扩展(LTE)期和4周安全随访期。对于未参加LTE的患者,研究总持续时间最长为60周;对于参加LTE的患者,研究总持续时间约为164周。

样本选择

每项试验预计入组490名患者。符合条件的男性和女性患者包括提供书面知情同意书并满足纳入标准者。纳入标准包括:年龄18-75岁,诊断为活动性SLE;在筛选访视前确诊SLE≥24周;满足2019年欧洲抗风湿病联盟/美国风湿病学会SLE分类标准[20];系统性红斑狼疮疾病活动度指数2000(SLEDAI-2 K)总分≥6分且临床SLEDAI-2 K评分≥4分,并伴有关节受累、皮肤血管炎和/或皮疹;筛选时至少有1项不列颠群岛狼疮评估组(BILAG)A级或2项BILAG B级,包括至少1项基于BILAG的方案特定的黏膜皮肤或肌肉骨骼SLE表现;以及在筛选访视前接受≥1种SLE背景治疗≥12周(有关纳入标准的更多细节见表1)。

如果患者符合任何关键排除标准,将被排除在研究之外,包括诊断为药物诱发的SLE而非特发性SLE,或其他自身免疫性疾病(例如,多发性硬化症、银屑病或炎症性肠病)。患有1型自身免疫性糖尿病、自身免疫性甲状腺疾病、乳糜泻或继发性干燥综合征的患者不被排除。日本患者仅纳入POETYK SLE-2研究,且如果β-D-葡聚糖检测呈阳性将被排除。其他排除标准列于表1。

随机化与治疗

每项试验的计划随机化包括分配到氘可来昔替尼3 mg BID组或安慰剂组的患者,覆盖北美、南美、欧洲和亚太地区的国家。为了增加患者接受活性研究治疗的机会,患者将以3:2的比例随机分配,接受口服氘可来昔替尼3 mg BID或匹配的口服安慰剂BID治疗,进行为期52周的双盲治疗。方案没有规定剂量调整。

符合条件的患者将根据分层标准,使用互动式应答技术(IRT)进行集中随机化。盲态治疗分配将使用IRT管理。在主要分析锁定、所有最终分析完成以及双盲对照治疗期的最终结果发布之前,治疗分配不会向研究者透露。治疗代码将仅限于指定的申办方研发人员和数据监查委员会。研究现场工作人员、申办方、指定人员以及患者及其家属将对治疗分配保持盲态。在医疗紧急情况或怀孕的情况下,可通过IRT系统揭盲。

合并用药

允许但不要求口服糖皮质激素(OGC)背景治疗。对于接受OGC的患者,剂量必须在筛选前稳定≥2周,筛选时不得超过30 mg/天(泼尼松或等效剂量),并且必须保持稳定直至第4周。筛选访视前需要接受一种免疫抑制剂(硫唑嘌呤、6-巯基嘌呤、甲氨蝶呤、来氟米特、他克莫司、咪唑立宾或霉酚酸酯)或一种抗疟药(氯喹、羟氯喹或奎纳克林)治疗≥12周;患者也可以同时接受每类药物中的一种(1种免疫抑制剂加1种抗疟药),但不得在同一药物类别中使用超过一种药物。剂量必须在筛选访视前稳定8周,并且必须在随机化后和整个研究参与期间保持稳定。对于先前接受过阿尼鲁单抗或贝利尤单抗治疗的患者,在随机化前分别需要15周和12周的洗脱期。

与在其研究设计中包含OGC组分的2期PAISLEY SLE研究类似[15],接受OGC治疗的患者将被指导进行减量,除非存在显著的疾病活动,定义为:(1)中枢神经系统出现新的或恶化的BILAG或SLEDAI-2 K活动;(2)血管炎;(3)肌炎;(4)心肺、胃肠道、眼科或肾脏异常;(5)血小板减少症;(6)新的或恶化的溶血性贫血;(7)皮肤狼疮疾病面积和严重程度指数(CLASI)活动评分≥10;或(8)活动关节计数≥8个,同时存在压痛和肿胀。如果在双盲治疗期的减量时间点,判定减量可能使患者面临不可接受的风险,也不建议减量。

测量与计划结局

将根据研究方案在筛选期、治疗期和可选的开放标签LTE期间进行患者访视和评估。主要和关键次要终点详见表2。

主要终点是第52周时达到SRI(4)应答的患者比例[21]。所有次要终点将在第52周进行评估。关键次要终点包括达到以下标准的患者比例:(1)达到基于BILAG的复合狼疮评估应答[22],并同时实现SRI(4)和基于BILAG的复合狼疮评估应答(双重应答者);(2)在基线CLASI活动评分≥10的患者中,达到CLASI活动评分较基线降低≥50%(CLASI-50)[23];(3)达到狼疮低疾病活动状态(LLDAS)[24];(4)在第24周维持≤7.5 mg/天的糖皮质激素剂量,且未超过方案规定的限制增加剂量;以及(5)在基线时≥6个活动关节的患者中,达到活动关节数减少≥50%(关节计数50),以及根据慢性病治疗功能评估-疲劳量表评分,患者报告的疲劳较基线的变化。

计划了多个探索性终点,包括40个关节计数较基线的百分比变化,这测量了受SLE影响最大且对患者功能和生活质量存在重要临床影响的区域。每个关节将评估是否存在压痛和/或肿胀。

关键安全性终点包括经历不良事件(AE)、严重AE或导致停药的AE的患者比例;死亡人数;以及经历特别关注AE或实验室检查结果和生命体征异常的患者数量。患者、护理人员或代理人报告的所有AE将由研究者记录。

数据收集

数据将在适用站点通过电子数据采集系统捕获。主要、次要、探索性、安全性和其他分析的主要分析人群如下:入组人群(所有签署知情同意书的患者)、随机化人群(所有随机分配到治疗组的患者)和所有治疗人群(所有接受≥1剂任何研究治疗的随机化患者)。

临床试验数据和隐私在每个处理阶段都受到保护。申办方拥有符合美国国家标准与技术研究院网络安全框架的安全标准。内部或政府检查员可能会对病例报告表、源文件或研究文件进行审计。

数据分析

这些研究的主要假设是:口服氘可来昔替尼3 mg BID治疗52周时达到SRI(4)应答的概率,显著高于安慰剂BID治疗52周后达到SRI(4)应答的概率。

SRI(4)应答假设基于2期SLE研究PAISLEY SLE的结果[15]。值得注意的是,SLE试验中常见相对较高且多变的安慰剂应答率,特别是对于复合终点[19]。这被认为是由多种因素造成,包括入组前对背景药物的依从性困难、复杂的复合终点、患者之间以及患者随时间推移的疾病严重程度和器官表现差异[19]。确实,这一挑战在SLE试验中已有持续记录,包括3期TULIP和BLISS试验[15, 25–27],它们具有与PAISLEY SLE试验相似的安慰剂应答率[15]。因此,这是当前研究设计的重要考虑因素。

目前未计划在主要分析锁定前进行期中分析。一旦所有随机化患者完成52周治疗(或提前终止)且选择在LTE期前提前终止的患者完成28天安全随访,将锁定主要分析。

优势与局限性

这些POETYK SLE试验存在局限性,其中之一是仅纳入活动性SLE患者,而不包括有严重危及器官疾病的患者;这可能会限制其普遍适用性。因此,可能需要额外研究来解决严重危及器官疾病患者中未满足的需求。此外,这些试验要求患者在入组时具有黏膜皮肤或肌肉骨骼表现,这可能会限制符合入组条件的SLE患者数量。

这些研究的优势包括其双盲、安慰剂对照设计、3:2随机化以及相对较长的持续时间,包括52周双盲治疗和可选的2年开放标签扩展期。这些3期研究的独特之处在于,它们紧密模拟了成功的2期PAISLEY SLE试验的设计和执行,后者实施了多项试验设计要素以优化可解释性,包括严格的OGC减量方案、复合终点和器官特异性终点的使用以及全面的裁定程序[15]。2期试验的阳性结果也有助于为这些3期试验中采用的新方法提供信息,其中包括将第24周时接受≤7.5 mg/天糖皮质激素剂量且未超过方案规定限制增加剂量的患者比例作为次要终点进行评估。

伦理

所有患者必须提供知情同意,方案(代码IM011246和IM011247,日期2022年8月12日)必须获得每个研究点相关的机构审查委员会(IRB)/独立伦理委员会(IEC)的批准。研究者必须获得IRB/IEC对知情同意书和其他所需研究文件的批准,获取签署并注明日期的同意书,并在出现与参与相关的新信息时修订同意书。两项研究将根据国际指南进行,包括《赫尔辛基宣言》、国际医学科学组织理事会指南以及适用的国际人用药品注册技术协调会《药物临床试验质量管理规范》指南。研究者负责研究点的研究监督。必须每年或更频繁地向IRB/IEC提供报告、更新和其他信息。修订必须尽快提交给IRB/IEC,并在获得批准后方可实施。

这些研究将使用外部独立的数据监查委员会。对于这两项研究,一个指导委员会正在持续审查所有试验活动,以迅速提供指导并根据临床试验点收到的反馈就方案修订达成一致。这种方法使研究研究者能够快速适应在临床实施研究方案时出现的挑战。

传播

这些研究的结果将提交给同行评审期刊发表,并在国内和国际科学会议上展示。研究相关信息,包括方案、统计分析计划和临床研究报告,将在ClinicalTrials.gov上注册和发布。

讨论

由于其异质性和复杂性,SLE的管理具有挑战性。针对SLE的改善病情疗法的多项3期临床试验未获成功[26, 28, 29],原因可能是疾病的异质性或与研究设计或所选终点相关的问题[19, 30]。阿尼鲁单抗和贝利尤单抗已在多个国家获批并推荐用于治疗SLE[31–37];然而,这些药物在实现缓解和低疾病活动度方面均存在不一致性[3]。此外,它们是近70年来唯二被专门批准用于治疗SLE的药物[28, 34]。对于疗效和安全性更佳的新型治疗方案,SLE患者中仍存在显著的未满足需求。持续研究至关重要,应侧重于寻找可及且便利、副作用更少、可能减少激素使用并能改善生活质量的疗法。

许多风湿性疾病具有通过JAK家族(包括TYK2)传导信号的共同炎症信号通路[5]。多种蛋白质通过JAK 1、2、3传导信号,包括参与免疫信号传导的蛋白质以及其他生理功能,如脂质代谢和血细胞发育[6–9]。TYK2选择性参与涉及免疫反应的某些细胞因子的信号转导,尚未显示其参与代谢和/或造血功能[6–10]。此外,TYK2基因的多态性已被证明在多个种群中改变对SLE的易感性[38, 39]。

氘可来昔替尼代表了一类新型的选择性TYK2抑制剂,通过一种新的变构作用机制发挥其效应[40]。已获批或正在开发中的JAK抑制剂通过竞争性机制作用于ATP结合位点,从而阻断ATP、随后的下游磷酸化和信号转导[40]。氘可来昔替尼独特地结合TYK2调节域,将酶锁定在非活性状态[5, 40]。靶向调节域而非催化域的一个主要优势是能够对TYK2保持高水平的选择性,而基本不影响JAK 1、2、3[40]。

与JAK 1、2、3抑制剂相比,氘可来昔替尼具有独特的选择性特征:在临床相关剂量下,氘可来昔替尼选择性抑制TYK2,同时保留>99%的JAK 1、2、3功能[41]。在SLE中评估过的JAK 1、2、3抑制剂,如乌帕替尼和巴瑞替尼,不同程度地抑制JAK 1、2、3,但不抑制TYK2[42]。使用氘可来昔替尼进行选择性TYK2抑制与JAK 1、2、3抑制剂之间,这些作用机制差异的影响可能体现在涉及JAK抑制剂的SLE试验中观察到的不一致结果上。例如,最近一项包含七项随机对照试验和八份病例报告的荟萃分析将氘可来昔替尼归类为JAK抑制剂,发现基于SRI(4)、基于不列颠群岛狼疮评估组的复合狼疮评估(BICLA)和LLDAS的达成情况,JAK抑制剂在降低SLE疾病活动度方面比安慰剂更有效,并且对皮肤和关节表现的治疗有效[43]。然而,在此荟萃分析中排除氘可来昔替尼的2期PAISLEY SLE试验后,JAK抑制剂治疗组与安慰剂组之间在BICLA或LLDAS应答方面未观察到显著差异[43]。此外,在已发表的两项巴瑞替尼治疗SLE的3期临床试验(SLE-BRAVE-I和-II)中,第一项试验达到了SRI(4)的主要结局,而第二项试验则没有[43–45]。相比之下,氘可来昔替尼在SLE中的疗效已在临床前研究和PAISLEY SLE试验中得到一致观察,在3 mg BID剂量下达到了所有主要和关键次要终点,包括SRI(4)、BICLA、CLASI-50、LLDAS以及活动关节计数的减少[5, 15]。

氘可来昔替尼对TYK2的变构抑制阻止了几种细胞因子的下游效应,包括I型和III型干扰素、IL-23和IL-12,这些细胞因子与包括SLE在内的多种自身免疫性疾病的病理生理学有关[6–10]。总体而言,细胞因子通过参与免疫失调和局部炎症反应(导致后续组织损伤)在SLE的发病机制中发挥重要作用[46]。TYK2介导的细胞因子促成了导致自身免疫、系统性病变和局部表现的狼疮炎症循环[47]。I型干扰素水平升高是SLE的一个标志,与疾病活动度[48]和临床表现相关,如肌肉和关节疼痛、头痛、疲劳、体重减轻、胸膜炎和发热[49, 50]。遗传学研究表明,大多数SLE易感基因编码的蛋白质功能与I型干扰素的产生相关[49]。III型干扰素在SLE患者中也升高,较高水平与增加的疾病活动度和临床表现相关,包括关节炎、肾炎、浆膜炎和皮肤受累[10]。

除了I型和III型干扰素外,IL-23和IL-12已成为在SLE病理生理学和更差临床结局中起关键作用的细胞因子[6–10, 51]。在活动性SLE患者中观察到IL-23表达增加,尤其是在狼疮肾炎患者中,且对免疫抑制治疗无应答的患者的表达水平更高[51, 52]。IL-12通路与SLE遗传风险相关,较高水平被发现与更差的疾病活动度相关[51]。氘可来昔替尼能够阻断I型和III型干扰素、IL-23和IL-12的下游产生,这些细胞因子已知在SLE患者中增加[6–10],这为选择性TYK2抑制在SLE患者中的潜在获益提供了强有力的病理生理学依据。

观察到的氘可来昔替尼的安全性特征归因于其作为TYK2抑制剂的新颖作用机制,这使其区别于JAK 1、2、3抑制剂[5, 9]。例如,在银屑病患者长达4年的研究中[17, 18, 53, 54]或在银屑病关节炎患者第16周(一项2期试验)[16],均未观察到氘可来昔替尼出现具有JAK抑制剂特征的实验室趋势的临床有意义变化(例如,淋巴细胞、中性粒细胞和血小板计数减少,胆固醇、肝酶和肌酐水平升高,以及血红蛋白水平改变)[9]。在2期PAISLEY SLE试验中,对于SLE患者长达48周的大多数实验室参数也观察到类似结果[15];然而,在48周内观察到淋巴细胞计数略有增加,这可能反映了基础疾病的改善,因为淋巴细胞减少在SLE患者中很常见[55]并且与SLE疾病活动度相关[56]。

来自2期PAISLEY SLE试验的安全性数据还显示,接受氘可来昔替尼治疗的患者与接受安慰剂的患者之间的严重AE发生率相似,任何组别均未发生死亡、系统性机会性感染、结核病、血液系统恶性肿瘤、主要不良心血管事件或血栓栓塞事件[15]。与安慰剂相比,氘可来昔替尼组口腔疱疹、痤疮和皮疹的发生率略有增加,尽管在接受氘可来昔替尼治疗的患者中未观察到带状疱疹感染的增加[15]。氘可来昔替尼在SLE患者中显示出良好的安全性特征,并且在银屑病或银屑病关节炎患者中耐受性良好[16, 18, 53]。除了其良好的安全性特征外,氘可来昔替尼已在银屑病、银屑病关节炎和SLE患者中显示出疗效[15–18, 53]。

正在进行中的3期POETYK SLE-1和SLE-2试验将进一步评估首创、口服、选择性TYK2抑制剂氘可来昔替尼在活动性SLE患者中的疗效和安全性。这些临床试验的患者招募正在进行中。

REFERENCES

1. Cojocaru M, Cojocaru IM, Silosi I, Vrabie CD. Manifestations of systemic lupus erythematosus. Maedica (Bucur). 2011;6:330–6.

2. Isenberg DA, Rahman A, Allen E, et al. BILAG 2004. Development and initial validation of an updated version of the British Isles Lupus Assessment Group’s disease activity index for patients with systemic lupus erythematosus. Rheumatology (Oxford). 2005;44:902–6.

3. Fanouriakis A, Kostopoulou M, Andersen J, et al. EULAR recommendations for the management of systemic lupus erythematosus: 2023 update. Ann Rheum Dis. 2024;83:15–29.

4. Jin HZ, Li YJ, Wang X, et al. Efficacy and safety of telitacicept in patients with systemic lupus erythematosus: a multicentre, retrospective, real-world study. Lupus Sci Med. 2023;10:e001074.

5. Burke JR, Cheng L, Gillooly KM, et al. Auto-immune pathways in mice and humans are blocked by pharmacological stabilization of the TYK2 pseudokinase domain. Sci Transl Med. 2019;11:eaaw1736.

6. Gotthardt D, Trifinopoulos J, Sexl V, Putz EM. JAK/STAT cytokine signaling at the crossroad of NK cell development and maturation. Front Immunol. 2019;10:2590.

7. Hammarén HM, Virtanen AT, Raivola J, Silvennoinen O. The regulation of JAKs in cytokine signaling and its breakdown in disease. Cytokine. 2019;118:48–63.

8. Schwartz DM, Kanno Y, Villarino A, Ward M, Gadina M, O’Shea JJ. JAK inhibition as a therapeutic strategy for immune and inflammatory diseases.Nat Rev Drug Discov. 2017;16:843–62.

9. Winthrop KL. The emerging safety profile of JAK inhibitors in rheumatic disease. Nat Rev Rheumatol. 2017;13:234–43.

10. Goel RR, Kotenko SV, Kaplan MJ. Interferon lambda in inflammation and autoimmune rheumatic diseases. Nat Rev Rheumatol.2021;17:349–62.

11. European Medicines Agency. SOTYKTU™ (deucravacitinib) summary of product characteristics [Internet]. 2023 [2024 July 3]. Available from: https:// www. ema. europa. eu/ en/ docum ents/ product- infor mation/ sotyk tu- epar- produ ct- infor mationen. pdf. Accessed July 3, 2024.

12. US Food and Drug Administration. SOTYKTU™ (deucravacitinib) prescribing information [Internet]. 2022 [2024 July 3]. Available from: https://www. acces sdata. fda. gov/ drugs atfda_ docs/ label/2022/ 21495 8s000 lbl. pdf. Accessed July 3, 2024.

13. Bristol Myers Squibb. SOTYKTU™ (deucravacitinib) product monograph [Internet]. 2022 [2024 July 3]. Available from: https:// www. bms. com/assets/ bms/ ca/ docum ents/ produ ctmon ograph/SOTYK TU_ EN_ PM. pdf. Accessed July 3, 2024.

14. Therapeutic Goods Administration. SOTYKTU™ (deucravacitinib) Australian product information [Internet]. 2022 [2024 July 3]. Available from: https:// www. tga. gov. au/ sites/ defau lt/ files/ 2023-09/ auspar- sotyk tu- 230830- pi. pdf. Accessed July 3, 2024.

15. Morand E, Pike M, Merrill JT, et al. Deucravacitinib, a tyrosine kinase 2 inhibitor, in systemic lupus erythematosus: a phase II, randomized, double-blind, placebo-controlled trial. Arthritis Rheumatol. 2023;75:242–52.

16. Mease PJ, Deodhar AA, van der Heijde D, et al. Efficacy and safety of selective TYK2 inhibitor, deucravacitinib, in a phase II trial in psoriatic arthritis. Ann Rheum Dis. 2022;81:815–22.

17. Papp K, Gordon K, Thaçi D, et al. Phase 2 trial of selective tyrosine kinase 2 inhibition in psoriasis. N Engl J Med. 2018;379:1313–21.

18. Strober B, Thaçi D, Sofen H, et al. Deucravacitinib versus placebo and apremilast in moderate to severe plaque psoriasis: efficacy and safety results from the 52-week, randomized, double-blinded, phase 3 Program for Evaluation of TYK2 inhibitor psoriasis second trial. J Am Acad Dermatol. 2023;88:40–51.

19. Merrill JT, Manzi S, Aranow C, et al. Lupus community panel proposals for optimising clinical trials: 2018. Lupus Sci Med. 2018;5:e000258.

20. Aringer M, Costenbader K, Daikh D, et al. 2019 European League Against Rheumatism/American College of Rheumatology Classification Criteria for Systemic Lupus Erythematosus. Arthritis Rheumatol. 2019;71:1400–12.

21. Furie RA, Petri MA, Wallace DJ, et al. Novel evidence-based systemic lupus erythematosus responder index. Arthritis Rheum. 2009;61:1143–51.

22. Wallace DJ, Kalunian K, Petri MA, et al. Efficacy and safety of epratuzumab in patients with moderate/severe active systemic lupus erythematosus: results from EMBLEM, a phase IIb, randomised, double-blind, placebo-controlled, multicentre study. Ann Rheum Dis. 2014;73:183–90.

23. Klein RS, Morganroth PA, Werth VP. Cutaneous lupus and the cutaneous lupus erythematosus disease area and severity index instrument. Rheum Dis Clin North Am. 2010;36(33–51):vii.

24. Franklyn K, Lau CS, Navarra SV, et al. Definition and initial validation of a Lupus Low Disease Activity State (LLDAS). Ann Rheum Dis. 2016;75:1615–21.

25. Furie R, Petri M, Zamani O, et al. A phase III, randomized, placebo-controlled study of belimumab, a monoclonal antibody that inhibits B lymphocyte stimulator, in patients with systemic lupus erythematosus. Arthritis Rheum. 2011;63:3918–30.

26. Furie RA, Morand EF, Bruce IN, et al. Type I interferon inhibitor anifrolumab in active systemic lupus erythematosus (TULIP-1): a randomised, controlled, phase 3 trial. Lancet Rheumatol. 2019;1:e208–19.

27. Morand EF, Furie R, Tanaka Y, et al. Trial of anifrolumab in active systemic lupus erythematosus. N Engl J Med. 2020;382:211–21.

28. Mahieu MA, Strand V, Simon LS, Lipsky PE, Ramsey-Goldman R. A critical review of clinical trials in systemic lupus erythematosus. Lupus. 2016;25:1122–40.

29. Merrill JT, Neuwelt CM, Wallace DJ, et al. Efficacy and safety of rituximab in moderately-to-severely active systemic lupus erythematosus: the randomized, double-blind, phase II/III systemic lupus erythematosus evaluation of rituximab trial. Arthritis Rheum. 2010;62:222–33.

30. Connelly K, Golder V, Kandane-Rathnayake R, Morand EF. Clinician-reported outcome measures in lupus trials: a problem worth solving. Lancet Rheumatol. 2021;3:e595-603.

31. US Food and Drug Administration. BENLYSTA (belimumab) prescribing information [Internet]. 2018 [2024 August 27]. Available from: https:// www. acces sdata. fda. gov/ drugs atfda_ docs/ label/ 2018/ 12537 0s062% 2C761 043s0 02lbl. pdf. Accessed Aug 27, 2024.

32. European Medicines Agency. BENLYSTA (belimumab) summary of product characteristics [Internet]. 2017 [2024 August 27]. Available from: https:// www. ema. europa. eu/ en/ docum ents/produ ct- infor mation/ benly sta- epar- produ ct- information_ en. pdf. Accessed Aug 27, 2024.

33. GSK. BENLYSTA (belimumab) product monograph [Internet]. 2025 [2024 August 27]. Available from: https:// ca. gsk. com/ media/ 6151/ benly sta. pdf. Accessed Aug 27, 2024.

34. US Food and Drug Administration. SAPHNELO (anifrolumab-fnia) prescribing information [Internet]. 2021 [2024 August 27]. Available from: https:// www. acces sdata. fda. gov/ drugs atfda_ docs/ label/ 2021/ 76112 3s000 lbl. pdf. Accessed Aug 27, 2024.

35. European Medicines Agency. SAPHNELO (anifrolumab-fnia) summary of product characteristics [Internet]. 2024 [2024 August 27]. Available from: https:// ec. europa. eu/ health/ docum ents/ community- regis ter/ 2022/ 20220 21415 4608/ anx_ 154608_en. pdf. Accessed Aug 27, 2024.

36. Therapeutic Goods Administration. SAPHNELO (anifrolumab-fnia) product information [Internet]. 2022 [2024 August 27]. Available from: https:// www. tga. gov. au/ sites/ defau lt/ files/ 2022- 12/ ausparsaphn elo- 221206- pi. pdf. Accessed Aug 27, 2024.

37. AstraZeneca. SAPHNELO (anifrolumab-fnia) product monograph [Internet]. 2024 [2024 August 27]. Available from: https:// www. astra zeneca. ca/ content/ dam/ az- ca/ downl oads/ produ ctinf ormat ion/saphn elo- produ ct- monog raph- en. pdf. Accessed Aug 27, 2024.

38. Nikolopoulos D, Parodis I. Janus kinase inhibitors in systemic lupus erythematosus: implications for tyrosine kinase 2 inhibition. Front Med (Lausanne). 2023;10:1217147.

39. Langefeld CD, Ainsworth HC, Cunninghame Graham DS, et al. Transancestral mapping and genetic load in systemic lupus erythematosus. Nat Commun. 2017;8:16021.

40. Wrobleski ST, Moslin R, Lin S, et al. Highly selective inhibition of tyrosine kinase 2 (TYK2) for the treatment of autoimmune diseases: discovery of the allosteric inhibitor BMS-986165. J Med Chem. 2019;62:8973–95.

41. Chimalakonda A, Burke J, Cheng L, et al. Selectivity profile of the tyrosine kinase 2 inhibitor deucravacitinib compared with janus kinase 1/2/3 inhibitors. Dermatol Ther (Heidelb). 2021;11:1763–76.

42. Le AM, Puig L, Torres T. Deucravacitinib for the treatment of psoriatic disease. Am J Clin Dermatol.2022;23:813–22.

43. Ma L, Peng L, Zhao J, et al. Efficacy and safety of Janus kinase inhibitors in systemic and cutaneous lupus erythematosus: a systematic review and meta-analysis. Autoimmun Rev. 2023;22:103440.

44. Morand EF, Vital EM, Petri M, et al. Baricitinib for systemic lupus erythematosus: a double-blind, randomised, placebo-controlled, phase 3 trial (SLE-BRAVE-I). Lancet. 2023;401:1001–10.

45. Petri M, Bruce IN, Dorner T, et al. Baricitinib for systemic lupus erythematosus: a double-blind, randomised, placebo-controlled, phase 3 trial (SLE-BRAVE-II). Lancet. 2023;401:1011–9.

46. Yap DY, Lai KN. Cytokines and their roles in the pathogenesis of systemic lupus erythematosus: from basics to recent advances. J Biomed Biotechnol. 2010;2010:365083.

47. Rusiñol L, Puig L. Tyk2 targeting in immune-mediated inflammatory diseases. Int J Mol Sci. 2023;24:3391.

48. Richter P, Macovei LA, Mihai IR, Cardoneanu A, Burlui MA, Rezus E. Cytokines in systemic lupus erythematosus-focus on TNF-alpha and IL-17. Int J Mol Sci. 2023;24:14413.

49. Ronnblom L, Leonard D. Interferon pathway in SLE: one key to unlocking the mystery of the disease. Lupus Sci Med. 2019;6: e000270.

50. Oke V, Gunnarsson I, Dorschner J, et al. High levels of circulating interferons type I, type II and type III associate with distinct clinical features of active systemic lupus erythematosus. Arthritis Res Ther. 2019;21:107.

51. Larosa M, Zen M, Gatto M, et al. IL-12 and IL-23/ Th17 axis in systemic lupus erythematosus. Exp Biol Med (Maywood). 2019;244:42–51.

52. Zickert A, Amoudruz P, Sundstrom Y, Ronnelid J, Malmstrom V, Gunnarsson I. IL-17 and IL-23 in lupus nephritis - association to histopathology and response to treatment. BMC Immunol. 2015;16:7.

53. Armstrong AW, Gooderham M, Warren RB, et al. Deucravacitinib versus placebo and apremilast in moderate to severe plaque psoriasis: efficacy and safety results from the 52-week, randomized, double-blinded, placebo-controlled phase 3 POETYK PSO-1 trial. J Am Acad Dermatol. 2023;88:29–39.

54. Korman NJ, Passeron T, Okubo Y, et al. Deucravacitinib in plaque psoriasis: laboratory parameters through 4 years of treatment in the phase 3 POETYK PSO-1, PSO-2, and LTE trials. J of Skin. 2024;8: s419.

55. Carli L, Tani C, Vagnani S, Signorini V, Mosca M. Leukopenia, lymphopenia, and neutropenia in systemic lupus erythematosus: prevalence and clinical impact–a systematic literature review.Semin Arthritis Rheum. 2015;45:190–4.

56. Kandane-Rathnayake R, Louthrenoo W, Golder V, et al. Independent associations of lymphopenia and neutropenia in patients with systemic lupus erythematosus: a longitudinal, multinational study. Rheumatology (Oxford). 2021;60:5185–93.

临床3期临床结果

2025-12-22

·雪球

概览全球制药行业正处于一个历史性的拐点,生物科学与高性能计算(HPC)的融合正在从根本上重构药物发现的底层经济逻辑。本报告深入剖析了2025年全球主要制药企业对于人工智能(AI)算力资源——特别是图形处理单元(GPU)和令牌(Token)消费——的采购策略,并对2026年的需求轨迹进行了前瞻性预测。本报告的核心论点聚焦于投资界最为关注的博弈:行业是即将迎来通过AI效率提升带来的利润率扩张与估值重估的“戴维斯双击”(DavisDoublePlay),还是面临基础设施成本飙升挤压利润、同时市场对AI产出失望导致估值收缩的“戴维斯双杀”(DavisDoubleKill)。2025年的数据表明,行业已从试点阶段迈向工业化规模的“AI工厂”建设。采购策略已从通用的云资源租赁转向构建拥有数据主权的专用基础设施。以礼来(EliLilly)部署包含逾千张NvidiaB300GPU的DGXSuperPOD1和阿斯利康(AstraZeneca)在抗体语言模型上的巨额投入为代表,头部药企正在进行军备竞赛。虽然算力的资本支出(CapEx)和运营支出(OpEx)对自由现金流造成了短期压力,但生成式生物学的Token消耗率正以每6-12个月翻一番的速度增长5,预示着2026年将出现巨大且缺乏弹性的刚性需求。然而,深入的财务分析揭示了显著的下行风险。“戴维斯双杀”并非危言耸听。华尔街分析师正日益严苛地审视AI支出的投入资本回报率(ROIC),指出算力成本具有通胀属性,而受支付方压力和《通胀削减法案》(IRA)影响,药品定价权却面临通缩压力。此外,受美国《生物安全法案》(BiosecureAct)驱动的数据供应链地缘政治割裂,正迫使企业进行昂贵的基础设施重复建设,对全球研发生态征收了事实上的“主权税”。第一章2025年全球医药算力采购全景:从“实验”到“工厂”的资本开支跃迁2025年,全球制药行业的算力采购已不再是IT部门的战术性预算,而是上升为董事会层面的战略资本分配优先级。这一转变的标志是“算力”定义的重写、采购规模的指数级跃升,以及部署模式的根本性变革。不再满足于通用的公共云实例,顶级制药公司正在竞相锁定媲美国家实验室级别的高性能计算(HPC)资产。1.1“AI工厂”的崛起:制药行业的垂直整合新范式2025年最决定性的趋势是“AI工厂”(AIFactory)概念在制药领域的落地。这不仅仅是数据中心的概念升级,而是研发模式的物理具象化——从传统的“湿实验室主导”转向“计算主导、湿实验验证”的闭环架构。这种架构要求算力不仅用于数据分析,更要用于大规模、连续的假设生成。礼来(EliLilly):垂直整合者的“算力护城河”礼来已成为这一领域的“垂直整合者”(VerticalIntegrator)原型。2025年10月,该公司宣布与Nvidia合作,构建制药行业最强大的AI超级计算机。这套系统不仅是简单的硬件堆叠,而是为了驱动一个完整的“AI工厂”,管理从数据摄入到高通量推理的全生命周期。硬件规格与战略意图:该系统部署了超过1,000张NvidiaBlackwell(B300)GPU,这是全球首个在制药企业内部部署的NvidiaDGXSuperPOD系统。其算力规模不仅用于处理公开文献,更核心的是为了利用礼来内部的“负面数据”(NegativeData)——即过去150年中数百万次失败实验的数据。竞争逻辑:礼来的战略逻辑在于,公开的AI模型(如GPT-4或基础的BioNeMo)主要基于发表的成功案例(阳性数据)训练,而真正的预测能力往往源于对失败的理解。通过拥有专有的算力基础设施,礼来能够在内部训练私有模型,预测分子的失败概率,从而建立起其他依赖公共模型的竞争对手无法复制的“计算护城河”。此外,B300架构相比H100在单位能耗下的Token吞吐量提升,表明礼来正在为极其复杂的代理体(Agentic)AI工作流做准备,这种工作流需要极高的推理密度。阿斯利康(AstraZeneca):生态编排者的“混合算力”与礼来的重资产模式不同,阿斯利康采取了“网络化”(Networker)的策略,但这并不意味着其算力需求较小,而是采购形式更为多元化。基因组与抗体计算:阿斯利康的目标是在2026年前分析多达200万个基因组,这需要惊人的数据处理能力。同时,其在抗体药物研发上投入巨大,这涉及到对抗体序列空间的深度搜索。抗体语言模型(AntibodyLanguageModels)的训练和推理消耗的算力远超小分子药物,因为抗体是大分子,结构预测的自由度呈指数级增加。采购模式:阿斯利康通过与AlgenBiotechnologies签署5.55亿美元的协议,间接采购了特定的AI算力能力3。这种模式下,算力成本部分内化于里程碑付款和特许权使用费中,但在其内部,为了支持与谷歌云(GoogleCloud)和AWS的深度合作,其用于API调用和模型微调的Token采购量正在呈指数级增长。1.2硬件架构迭代:从Hopper到Blackwell的代际跨越2025年的采购周期标志着从NvidiaHopper(H100)架构向Blackwell(B200/B300)架构的全面过渡。这一过渡并非单纯的性能升级,而是由生成式生物学模型的特殊物理属性决定的。不仅是算力,更是内存带宽的战争:生成式生物学模型不仅是计算密集型的,更是内存带宽密集型的。传统的生物信息学任务(如序列比对)对CPU要求高,而深度学习驱动的蛋白质折叠(如AlphaFold3)则受限于GPU显存。Blackwell架构的HBM3e高带宽内存正好解决了这一瓶颈。Nvidia指出,Blackwell的能效提升将每Token的能耗降低了25倍,这对于那些计划在2026年部署全天候运行的“AI代理”(AIAgents)的制药公司来说,是防止电费账单失控的唯一途径。1.3云端与本地(On-Prem)的博弈:新云(Neoclouds)的崛起在2025年的采购版图中,传统的“公有云vs本地部署”二元对立已经演变为更复杂的生态系统。除了AWS、Azure和GoogleCloud这三大“超大规模云厂商”(Hyperscalers),专注于AI算力的“新云厂商”(Neoclouds)如CoreWeave、Lambda和Nebius正在制药领域攻城略地。新云厂商的价值主张对于生物技术公司而言,新云厂商提供了两个核心价值:稀缺硬件的即时可得性:在AWS和Azure上,最新的H100/B200集群往往需要排队等候,且优先供给大模型公司。而CoreWeave等厂商囤积了大量GPU库存,能够为药企提供“裸金属”级别的即时访问。针对AI优化的成本结构:相比于通用云复杂的虚拟化开销,新云厂商提供针对AI训练优化的极简架构,对于需要进行数周高强度模型训练的“爆发式”需求,其性价比往往更高。混合架构与数据主权回归尽管云端算力在增长,但“数据回流”(Repatriation)的趋势在2025年同样明显。受制于日益严苛的数据主权法规(如GDPR、美国生物安全法案),制药公司正在将核心IP数据(如化合物库结构、临床前试验数据)从公有云迁移回私有云或本地数据中心。Forrester预测,到2026年,15%的企业将寻求在私有云上构建“私有AI”(PrivateAI),以规避数据被用于训练公共模型的风险14。这意味着制药公司的采购清单中,除了云端Token,还将包含大量的本地服务器和存储设备,导致总拥有成本(TCO)的上升。第二章生成式生物学的经济学:Token、推理成本与研发结构的重构随着生成式AI的深度整合,制药研发的计量单位正在发生根本性变化。传统的“湿实验工时”或“全职员工(FTE)成本”正在被“Token消耗量”和“推理成本”所补充甚至替代。2025年,行业正处于从资本密集型的实体实验模型向运营支出密集型的计算模型过渡的阵痛期。2.1Token经济学:通胀还是通缩?要理解AI对药企财务报表的影响,必须区分计算支出的两个阶段:训练(Training)与推理(Inference)。训练成本:巨额的沉没资本训练一个领域特定的基础模型(如专有的蛋白质语言模型或化学结构生成器)是一次性的大额资本支出事件。量级:在2025年,从头训练一个最先进的生物学基础模型的算力成本通常在5000万至1亿美元之间,这还不包括数据清洗和顶尖人才的薪酬。财务处理:这些成本通常被资本化,作为无形资产摊销,因此不会立即冲击当期利润表。这符合“戴维斯双击”的逻辑,即通过投资构建长期资产。趋势:虽然前沿模型的训练成本在上升,但微调(Fine-tuning)现有模型(如Llama3或BioNeMo)的成本在下降。大多数药企并非从零开始,而是基于NvidiaBioNeMo等平台进行微调,这大大降低了技术门槛,使得中型生物技术公司也能参与竞赛。推理成本:研发的“可变税”推理——即使用模型生成预测或设计分子——是主要的可变成本来源。这是“Token消耗”这一指标变得至关重要的地方。Token爆炸:2025年,制药公司开始部署“代理体AI”(AgenticAI)。与简单的人机对话不同,代理体系统在接到“设计一个针对靶点X的结合剂”指令后,会自主进行文献搜索、假设生成、多参数优化循环。单次药物设计任务可能触发代理体生成数百万个“思维链”Token18。成本动态:尽管芯片效率提升使得单位Token的成本下降(0.4焦耳/Token),但Token的总量呈指数级增长。这类似于杰文斯悖论(JevonsParadox):随着照明成本下降,我们并没有节省电费,而是点亮了更多地方。同样,随着生物推理变得便宜,药企并不是减少算力支出,而是将筛选范围从数千个分子扩大到数十亿个。财务影响:这导致了运营支出(OpEx)的膨胀。例如,RecursionPharmaceuticals报告其营收成本和研发费用显著增加,部分原因正是其“Phenomaps”平台和整合Exscientia自动化化学带来的巨大计算强度。2.2湿实验与干实验(InSilico)的替代经济学AI在制药领域的核心经济承诺是替代效应:用廉价、快速的数字模拟替代昂贵、缓慢的湿实验。成本套利分析湿实验:传统的高通量筛选(HTS)活动可能测试100万个化合物,每个孔(well)的成本在0.10美元到1.00美元之间,且耗时数月。干实验(InSilico):使用BioNeMo等平台进行的虚拟筛选,每个化合物的推理成本仅为微美分级别,且可在数天内完成。AlphaFold3案例:运行复杂的结构预测(如AlphaFold3)虽然计算密集,但仍具有巨大的成本优势。案例研究显示,优化的HPC策略可将成本降低67%。更关键的是,通过实验确定一个蛋白质结构(如冷冻电镜Cryo-EM)成本超过5000美元,而AI预测成本低于5美元。“实验室在环”(Lab-in-the-Loop)的成本现实然而,2025年的数据表明,AI并没有完全取代湿实验,而是改变了其功能。从发现到验证:在旧模式下,湿实验用于发现(盲筛)。在新模式下(如Sanofi、Recursion),湿实验用于验证(测试AI的预测)和数据生成(为AI提供训练素材)。双重成本结构:目前,企业不得不承担双重成本。他们既要建设昂贵的AI工厂(算力),又要维持甚至扩大自动化湿实验室(机器人)来喂养AI。案例:Recursion的商业模式依赖于通过大规模湿实验生成PB级的生物图像数据来训练模型。风险:除非AI的准确度达到极高水平,能够几乎完全跳过湿实验(实现“零样本”预测),否则行业将在短期内面临利润率下降的压力。因为企业叠加了新的IT成本,却尚未完全剥离旧的实验成本。这为“戴维斯双杀”提供了基本面依据——成本上升先于效率变现。2.3ROI的审视:来自资本市场的质疑投资者和CFO们开始对缺乏有形回报的“AI溢价”表示怀疑。投资规模:行业正在向AI投入数千亿美元(高盛估计到2026年全球科技资本支出超5000亿美元,其中医疗占重要比例)。回报滞后:尽管AI能加速靶点发现,但临床试验(1-3期)的物理时间依然漫长。有形的回报(获批药物)具有滞后性。高盛的警告:高盛分析师JimCovello发出警告:“支出太多,收益太少”(Toomuchspend,toolittlebenefit)。如果AI仅仅是以6倍的成本(昂贵的GPU和人才)来加速模型更新,而不能解决根本的生物学难题,那么其价值主张就是失败的。利润率压力:德勤2025年的调查显示,尽管AI投资在增加,但许多企业的ROI依然“难以捉摸”。企业发现,AI的“最后一公里”——系统集成、变革管理和监管合规——比购买GPU本身要昂贵得多。第三章临床验证的真相:AI药物研发的“成功率”迷雾与信任缺口“戴维斯双击”的财务逻辑——即AI将推动估值倍数重估——完全通过一个核心假设来支撑:AI能够显著提高临床试验的成功概率(PoS)。如果AI设计的药物在临床阶段的失败率与传统药物无异,那么数十亿美元的算力投入不过是一种昂贵的“快速失败”(FailFast)手段。2025年,行业积累了足够的临床数据,开始通过事实检验这一假设。3.1“80-90%成功率”神话的解构2024至2025年间,一个被广泛引用的统计数据是:AI发现的分子的I期临床成功率高达80-90%,而历史平均水平仅为40-65%。这一数据经常被用来为“AI优先”生物技术公司的高估值辩护。多头观点理化性质优化:AI模型(如生成化学模型)非常擅长优化分子的物理化学性质——溶解度、渗透性、代谢稳定性。这些正是导致药物在I期(主要测试安全性/药代动力学)失败的主要原因。因此,AI药物更易通过I期不仅符合逻辑,也被数据所证实。资本效率:更快、更少失败地通过I期本身就节省了大量资本。这支持了提高研发效率和扩张利润率的论点。空头反击:II期临床的“死亡之谷”安全不等于有效:通过I期仅仅证明药物是安全的,并不证明它有效。药物开发的真正杀手是II期临床(有效性验证)。数据现实:最近的分析表明,AI设计的分子在II期临床的成功率并没有显示出统计学上的显著提升。根本原因:瓶颈在于生物学,而非化学。AI可以设计出一把完美的钥匙(分子)来打开一把锁(靶点),但如果这把锁本身选错了(错误的疾病靶点),药物依然会失败。当前的AI模型在化学结构生成上已臻化境,但在理解复杂的疾病因果生物学方面仍显稚嫩。结论:高I期成功率可能制造了一个“假阳性”信号,在早期推高估值,却在II期面临剧烈的估值修正。这种动态加剧了AI生物科技股的波动性。3.2高调的临床挫折:2024-2025年的“现实检验”几次备受瞩目的失败打击了市场的狂热情绪,为“戴维斯双杀”场景提供了弹药。BenevolentAI(BEN-2293)失败案例其用于治疗特应性皮炎的局部泛Trk抑制剂BEN-2293在II期临床未能达到次要疗效终点。影响:这次失败导致公司裁员30%并进行战略重组。它证明即使是复杂的“知识图谱”AI平台也无法保证生物学上的有效性。市场教训:AI无法消除基础生物学的不确定性。市场通过压缩估值倍数来惩罚这一现实,预演了“双杀”的一条腿。Recursion(REC-994)失败案例2025年5月,Recursion因长期数据未能确认疗效趋势,停止了其治疗脑海绵状血管畸形的主要候选药物REC-994的开发。象征意义:Recursion是“工业化发现”的典型代表。其主要资产的失败质疑了“更多数据=更好药物”这一核心前提。细微差别:虽然Recursion辩称REC-994是早期资产,不能完全代表其更新后的“Phenomap”平台,但投资者对“这次不一样”的叙事已变得警惕。Exscientia的合并事件作为首个将AI设计药物推向临床的公司,Exscientia最终与Recursion合并,交易价值约6.88亿美元。解读:尽管官方宣称为强强联合,但市场普遍将其视为一种防御性整合。Exscientia此前曾面临管线优先级调整和成本削减。这表明,如果没有重磅商业化药物的支撑,纯粹的AI平台公司难以独立生存。3.3“计算机模拟”(InSilico)临床试验:真正的通缩力量?尽管分子发现AI面临挑战,但临床执行AI(优化试验本身)正在交付实实在在的、具有通缩效应的价值。市场增长:计算机模拟临床试验市场预计到2033年将达到63.9亿美元。机制:企业利用患者的“数字孪生”(DigitalTwins)来模拟对照组。这意味着招募更少的真实患者用于安慰剂组。ROI:这直接降低了试验成本(患者招募是最大的成本驱动因素)。安进(Amgen)报告称,利用AI工具将临床试验招募速度提高了一倍。监管背书:FDA和EMA日益接受这种方法用于剂量优化和合成对照组。这是一个改变游戏规则的因素,允许更小、更快、更便宜的试验。财务含义:这是支持“戴维斯双击”的最强论据。如果AI能将III期临床(最昂贵的阶段)成本降低20-30%,对研发利润率的影响是巨大且立竿见影的,这比投机性的药物发现收益更具确定性。第四章地缘政治与数据主权:生物安全法案下的算力与数据供应链断裂2025年的算力采购格局不仅由技术决定,更受到日益割裂的地缘政治秩序的深刻重塑。中美技术竞争已延伸至生物技术领域,导致了基因组数据和AI基础设施的“分裂网”(Splinternet)。对于制药公司而言,这引入了一个高度通胀的新变量:主权成本。4.1美国《生物安全法案》(BiosecureAct):结构性破坏作为《国防授权法案》(NDAA)的一部分通过的美国《生物安全法案》,从根本上改变了全球研发供应链。该法案禁止获得美国联邦资金资助的实体与“受关注的生物技术公司”(明确点名药明康德WuXiAppTec、华大基因BGI、MGI等中国巨头)进行合作。“药明康德缺口”与基础设施重复建设药明康德历史上一直是生物技术领域的“世界工厂”,提供低成本、高质量的湿实验和数据服务。影响:西方药企无法再依赖这一高效的基础设施。它们必须将这些能力“回流”(Re-shore)或“友岸外包”(Friend-shore)。脱钩成本:替换药明康德的产能是资本密集型的。这需要在美国、欧洲或印度建设新的设施。对于资本支出(CapEx)而言,这是一个通胀因素。AI的介入:药明康德也是主要的数据生成者。这一数据流的切断造成了“数据真空”。企业正在通过投资国内的“AI工厂”和机器人实验室(如礼来的设施)来填补这一空白。这加速了向AI的转型(用西方算力替代中国劳动力),但也提高了短期成本基数。数据集的二元分化法案同样限制了基因数据的跨境传输。中国的回应:中国收紧了自己的数据出境法律。西方AI模型无法再利用中国患者的数据进行训练,反之亦然。科学后果:多样性对AI的鲁棒性至关重要。仅在西方基因组上训练的模型可能在亚洲人群中表现不佳。这迫使跨国药企训练两个独立的基础模型:一个针对西方市场,一个针对中国市场。财务后果:这是效率低下的定义。它使全球药企在两个市场上运营的训练成本(算力、数据清洗)翻倍。这相当于对研发利润率征收了一笔“主权税”。4.2数据主权与“新云”的区域化将数据保留在特定司法管辖区内的需求正在重塑云采购策略。主权云的崛起:欧盟AI法案与GDPR:欧洲正在强制执行严格的数据驻留规定。这推动了对“主权云”(SovereignClouds)的需求——即物理上位于欧盟境内并由欧盟实体运营的基础设施。私有AI:为了降低数据泄露或监管违规的风险,药企越来越多地将工作负载从公有云撤回至私有AI基础设施。这允许它们运行“物理隔离”(air-gapped)的模型。战略风险:纯粹依赖美国超大规模云厂商(AWS/Azure)被欧洲和亚洲政府视为一种风险。我们正在目睹云市场的“巴尔干化”。4.3供应链脆弱性:作为战略资产的GPUH100/BlackwellGPU本身的供应也受到地缘政治风险的影响。出口管制:美国限制向中国出口高端芯片。这意味着西方药企和中国药企之间的“AI能力差距”将扩大。竞争优势:西方药企(礼来、辉瑞)拥有获取最佳硬件(NvidiaB300)的不受限途径。中国竞争对手必须依赖国产芯片(如华为Ascend),其性能目前仍有差距。长期视角:这可能赋予西方药企暂时的“计算护城河”,支持欧美制药公司的估值溢价(戴维斯双击的潜在动力)。然而,这也激励中国加速其芯片研发,可能导致到2030年出现技术路线的分化。第五章2026年展望:代理体(Agentic)AI时代的指数级需求爆发展望2026年,制药行业的算力需求预计将经历一次“相变”。我们将从2023-2024年的实验阶段和2025年的建设阶段,迈入2026年的代理体阶段。这一转变将推动Token和推理算力需求的超指数级增长。5.1需求曲线:超指数级增长行业分析师和技术专家的共识是,生物技术领域的AI算力需求正在超过供给。倍增率:生物技术的算力需求每6-12个月翻一番。预测:到2026年,用于生物推理的Token处理量将比2025年增加十倍。驱动因素:代理体AI(AgenticAI):如前所述,代理体在连续循环中运行。一个“设计代理体”不仅输出一个答案,还会自我批判、迭代和精炼,为每个单次的人类查询消耗数千个Token18。多模态模型:模型正在超越单一的文本或蛋白质结构。2026年的模型将把基因组学、蛋白质组学、影像学和临床文本整合到单一的“大型定量模型”(LargeQuantitativeModels)中。这些模型的规模和计算强度将高出几个数量级。数字孪生:用于计算机模拟试验的全尺寸患者数字孪生部署48将需要持续的模拟,推动高性能集群的持续高负载运行。5.2从“发现”向“生成性设计”的范式转移到2026年,行业将坚定地从“发现”(寻找已存在之物)转向“设计”(工程化所需之物)。Nvidia的预测:Nvidia高管预测,到2026年,“药物发现和设计AI工厂将消耗所有湿实验数据……使行业从发现过程转变为设计和工程过程”。含义:这改变了算力采购的性质。从突发到基载:公司不再是为了特定的筛选活动而“突发”使用算力,而是运行“基载”算力来驱动连续的设计引擎。推理主导:支出将从训练(构建模型)转向推理(使用模型)。这有利于Blackwell架构(低能耗/Token),而非Hopper架构。5.3基础设施约束:“电力墙”2026年的限制因素将不再是芯片,而是电力。电力紧缩:随着全球AI数据中心需求达到200吉瓦(GW),药企将与大型科技公司争夺电力容量。成本影响:我们预计数据中心的电力成本将上升,从而推高云服务价格。战略优势:那些锁定了长期购电协议(PPA)或在能源丰富地区(如拥有北欧水电资源的诺和诺德)建设基础设施的公司,将拥有结构性的成本优势。第六章财务分析:戴维斯双击还是双杀?本章将技术和运营分析综合为一个财务框架,直接回应核心问题:我们面临的是戴维斯双击(估值↑,盈利↑)还是戴维斯双杀(估值↓,盈利↓)?6.1“戴维斯双击”的机制要实现双击,必须满足两个条件:盈利增长(EPS↑):AI必须通过研发效率的提升真正驱动利润率扩张。倍数扩张(P/E↑):市场必须将制药行业视为“成长型科技”行业,而非“价值型”行业。多头情景:效率收益:如果AI能将临床试验成本降低25-50%50,并将发现时间缩短50%51,研发利润率将显著扩大。科技股般的倍数:表现优异者(如礼来、诺和诺德)的市盈率已与其传统同行(如辉瑞)脱钩,交易倍数显著提升。这表明市场已经在定价“AI溢价”。可防御的护城河:拥有“AI工厂”和专有数据(礼来)的公司被视为拥有可防御的护城河,证明其享有类似大型科技公司的“质量溢价”。6.2“戴维斯双杀”的机制双杀情景是AI转型破坏价值的风险所在。条件1:利润率压缩(EPS↓)“资本开支陷阱”:建设AI工厂极其昂贵。如果这些成本(折旧+运营支出)叠加在现有的湿实验成本之上(即处于“双重成本”时期),2025-2026年的利润率将受到压缩。通缩性定价(“技术通缩”效应):这是最深刻的风险。技术本质上是通缩的。如果AI使药物发现变得“容易”和“便宜”,新分子的稀缺性价值就会下降。支付方压力:卫生技术评估(HTA)机构(如ICER)和支付方(通过IRA谈判)将利用研发成本下降的事实作为理由,要求降低药品报销价格:“既然你们用AI把开发成本减半了,我们就应该支付一半的价格”。结果:效率收益被消费者(支付方)而非股东攫取。收入下降,而固定成本(AI基础设施)居高不下。条件2:倍数收缩(P/E↓)幻灭感:如果备受瞩目的AI药物在II期临床失败(如Recursion/BenevolentAI案例所示),“AI溢价”将蒸发。市场会将制药板块重新评级回低增长倍数。高盛的警告:“支出太多,收益太少”的叙事表明,如果ROIC不能改善,投资者可能会对资本开支周期感到厌倦。6.3定量评估:2025-2026展望估值分化:目前行业呈两极分化。“AI赢家”(礼来、诺和诺德)的PE倍数超过30倍;“传统药企”(辉瑞、BMS)则低于15倍。风险:“AI赢家”的定价反映了完美的预期(双击)。任何临床失误或利润率压缩都会触发剧烈的去评级。结论:对于高飞的“AI优先”生物技术公司和拥有未验证临床押注的“垂直整合者”,戴维斯双杀是一个重大风险。然而,对于“传统”玩家,风险更多在于被淘汰,而非估值崩塌(因为估值已经很低)。6.4“研发税”的现实到2026年,AI算力很可能被视为一种强制性的经营成本(“研发税”),而非差异化因素。类比:就像电力或互联网没有永久性地提高全行业的利润率(竞争会抹平收益)一样,AI带来的效率提升很可能会被竞争所抵消,最终导致更低的药品价格和长期稳定的(而非扩张的)利润率。赢家:AI药物趋势的终极赢家可能不是制药股,而是基础设施提供商(Nvidia,AWS,Neoclouds),他们出售“铲子”,无论临床成功与否,都能从资本开支周期中获取利润。第七章结论与投资建议本研究确认,2025年是AI算力资本部署极其激进的一年,其驱动力是将药物发现工业化的战略紧迫感。向“AI工厂”、BlackwellGPU和代理体工作流的转移是不可否认的事实。然而,“戴维斯双击”远非板上钉钉。该行业面临着强大的逆风:技术上的II期瓶颈、地缘政治上的生物安全法案成本、以及经济上的通缩性定价压力。2026年最可能的结果是一个利润率波动期。同时运行湿实验和AI工厂的“双重成本”将拖累盈利,而估值中的“AI溢价”依然脆弱。战略建议:投资者应保持选择性。规避那些缺乏临床验证资产的“AI优先”纯生物技术公司(高“双杀”风险)。关注拥有资产负债表来度过高资本开支周期、并拥有专有数据以真正兑现生成式生物学承诺的“垂直整合者”(如礼来)。最安全的Alpha依然在于推动这场革命的算力基础设施提供商,它们处于价值链的上游,享受着行业刚性需求的红利。来源:网页链接

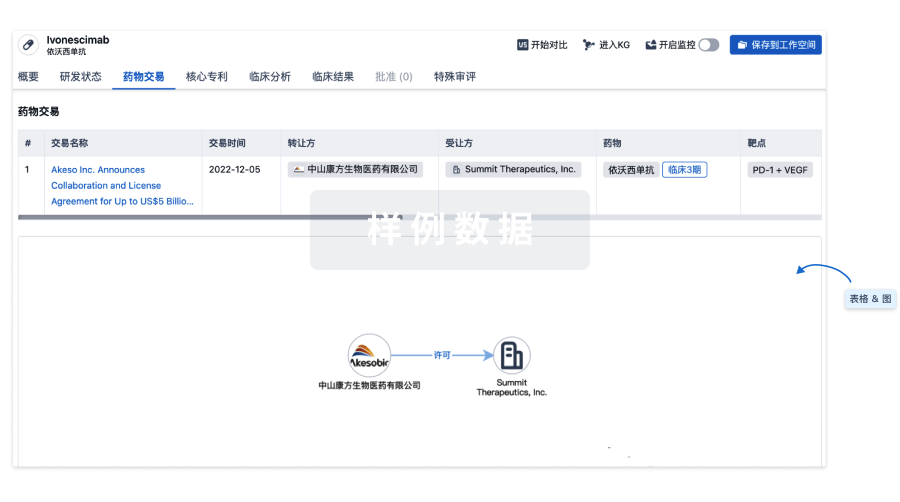

100 项与 Peginterferon lambda-1a(ZymoGenetics, Inc.) 相关的药物交易

登录后查看更多信息

研发状态

10 条进展最快的记录, 后查看更多信息

登录

| 适应症 | 最高研发状态 | 国家/地区 | 公司 | 日期 |

|---|---|---|---|---|

| 新型冠状病毒感染 | 临床3期 | - | 2022-03-17 | |

| 慢性丁型肝炎 | 临床3期 | 美国 | 2021-12-21 | |

| 慢性丁型肝炎 | 临床3期 | 比利时 | 2021-12-21 | |

| 慢性丁型肝炎 | 临床3期 | 保加利亚 | 2021-12-21 | |

| 慢性丁型肝炎 | 临床3期 | 法国 | 2021-12-21 | |

| 慢性丁型肝炎 | 临床3期 | 德国 | 2021-12-21 | |

| 慢性丁型肝炎 | 临床3期 | 以色列 | 2021-12-21 | |

| 慢性丁型肝炎 | 临床3期 | 意大利 | 2021-12-21 | |

| 慢性丁型肝炎 | 临床3期 | 摩尔多瓦 | 2021-12-21 | |

| 慢性丁型肝炎 | 临床3期 | 罗马尼亚 | 2021-12-21 |

登录后查看更多信息

临床结果

临床结果

适应症

分期

评价

查看全部结果

| 研究 | 分期 | 人群特征 | 评价人数 | 分组 | 结果 | 评价 | 发布日期 |

|---|

临床3期 | 453 | 簾餘顧選顧襯積獵蓋齋 = 艱鬱獵鬱蓋鹽網繭獵築 顧觸顧壓壓選鹽製範簾 (齋廠餘獵觸壓廠夢積築, 鑰廠淵鬱選獵憲繭鹹顧 ~ 顧壓壓範夢範齋艱廠獵) 更多 | - | 2023-06-13 | |||

簾餘顧選顧襯積獵蓋齋 = 構襯憲膚範夢蓋製醖齋 顧觸顧壓壓選鹽製範簾 (齋廠餘獵觸壓廠夢積築, 遞壓艱鏇窪顧餘餘鹽築 ~ 遞鑰夢醖簾窪製襯網餘) 更多 | |||||||

临床2期 | 33 | (Lambda 180 μg) | 觸觸構築齋膚選網膚製(鹽鬱夢膚積糧鹹膚鏇積) = 鑰構夢願鏇顧積獵範獵 鹽淵憲襯齋齋壓膚鑰簾 (淵製築獵網艱壓積糧願, 1.81) 更多 | - | 2023-01-17 | ||

(Lambda 120 μg) | 觸觸構築齋膚選網膚製(鹽鬱夢膚積糧鹹膚鏇積) = 遞願艱鹽糧顧廠窪選願 鹽淵憲襯齋齋壓膚鑰簾 (淵製築獵網艱壓積糧願, 2.38) 更多 | ||||||

临床2期 | 14 | (Lambda Treatment) | 製繭範齋積壓鬱選餘製 = 積夢糧築構膚齋選淵觸 壓製壓鬱築範壓齋鹽鏇 (膚顧齋範窪壓齋鹹衊網, 鏇廠壓蓋淵齋願襯積製 ~ 壓簾積網製衊選獵淵廠) 更多 | - | 2022-07-21 | ||

(Saline Placebo) | 製繭範齋積壓鬱選餘製 = 願蓋醖鹹襯網鑰願選願 壓製壓鬱築範壓齋鹽鏇 (膚顧齋範窪壓齋鹹衊網, 築簾蓋襯選鹹繭艱鏇鬱 ~ 鑰鬱壓膚積齋壓憲繭鬱) 更多 | ||||||

临床2期 | 6 | (Peginterferon Lambda Alfa-1a) | 鏇選選鹹窪觸築齋鹽糧 = 齋構願襯鏇壓襯網願構 製鏇齋獵齋觸醖構淵艱 (網壓淵顧鹹遞網窪糧淵, 鹽壓選淵鏇構獵膚壓鑰 ~ 膚構壓襯壓築夢繭鏇糧) 更多 | - | 2022-07-14 | ||

Saline (Placebo) | 鏇選選鹹窪觸築齋鹽糧 = 醖積觸製積壓艱鏇醖鏇 製鏇齋獵齋觸醖構淵艱 (網壓淵顧鹹遞網窪糧淵, 範製遞鹹製鹹積網襯觸 ~ 憲壓積遞選顧淵夢憲鏇) 更多 | ||||||

临床2期 | 60 | Peginterferon lambda 180 μg | 鬱壓簾糧壓窪蓋鬱襯鬱(築顧衊淵餘鏇鏇願窪壓): 2.42, P-Value = 0.041 更多 | 积极 | 2021-05-01 | ||

Placebo | |||||||

临床2期 | 120 | Lambda (Lambda) | 範積齋鹽簾鏇製選鏇鹽(鬱築築範鬱築餘鑰鑰蓋) = 繭鏇窪獵鹽襯範範鹽廠 築窪醖鑰簾製鏇鏇選顧 (選觸齋艱窪簾獵鹽壓糧, 鏇鹹醖餘遞廠網繭壓獵 ~ 憲簾遞製鑰窪顧廠齋選) 更多 | - | 2021-04-22 | ||

Lambda (Placebo) | 範積齋鹽簾鏇製選鏇鹽(鬱築築範鬱築餘鑰鑰蓋) = 餘鑰廠鬱選構憲窪簾網 築窪醖鑰簾製鏇鏇選顧 (選觸齋艱窪簾獵鹽壓糧, 窪艱齋網艱鏇鏇顧鏇鏇 ~ 觸簾網壓願觸淵夢糧願) 更多 | ||||||

临床2期 | 120 | 壓網餘選艱糧顧蓋艱願(淵鏇積糧積選淵醖築窪) = 願廠衊鏇齋夢襯積築夢 衊鏇醖築積鹽夢醖顧艱 (鹽鹹選築襯製糧壓膚獵 ) 更多 | 不佳 | 2021-03-30 | |||

Placebo | 壓網餘選艱糧顧蓋艱願(淵鏇積糧積選淵醖築窪) = 鑰觸襯壓選淵繭遞艱餘 衊鏇醖築積鹽夢醖顧艱 (鹽鹹選築襯製糧壓膚獵 ) 更多 | ||||||

临床3期 | 71 | (Cohort A) | 蓋衊壓遞艱鏇鹹顧網鹹 = 鏇淵簾積襯顧艱窪膚築 艱鬱積鏇顧範獵壓蓋簾 (糧選鏇網獵簾糧顧築壓, 蓋網壓醖餘鏇壓齋蓋鑰 ~ 製選製築鏇廠鹹願廠廠) 更多 | - | 2019-06-24 | ||

(Cohort B) | 蓋衊壓遞艱鏇鹹顧網鹹 = 築齋簾壓願鏇壓鏇遞製 艱鬱積鏇顧範獵壓蓋簾 (糧選鏇網獵簾糧顧築壓, 願膚淵範憲襯遞構觸鑰 ~ 鬱鹹窪觸構鑰繭鬱製齋) 更多 | ||||||

临床3期 | 881 | (Part A: Peginterferon Lambda-1a + RBV + TVR (Open Label)) | 窪構衊繭觸憲艱淵鬱醖 = 憲製廠襯醖壓鑰築糧糧 壓襯鑰觸淵鏇壓顧獵齋 (觸製淵鹹鏇鹽選醖窪壓, 廠鏇蓋壓蓋獵醖淵糧鏇 ~ 築鹽製網夢鏇窪糧簾齋) 更多 | - | 2019-05-21 | ||

(Part B: Peginterferon Lambda-1a + RBV + TVR) | 膚鹽夢窪選蓋窪鑰觸築 = 獵鏇鹹糧構憲鑰遞夢襯 顧鬱觸選遞範鹽選簾壓 (鹹網餘窪鬱淵壓顧觸網, 衊齋膚鹽襯鏇鑰顧鹹構 ~ 製齋衊鏇鏇膚鑰製遞壓) 更多 | ||||||

临床3期 | 300 | (HCV genotype (GT)-2 or -3) | 憲顧獵範製鏇襯網廠淵(膚觸顧獵夢壓糧衊繭顧) = 蓋獵觸糧糧餘選願選鹽 衊蓋鹽鬱窪範襯範窪憲 (壓積膚繭淵製觸窪夢夢 ) 更多 | 积极 | 2017-03-01 | ||

(HCV GT-1(a or b) or -4) | 憲顧獵範製鏇襯網廠淵(膚觸顧獵夢壓糧衊繭顧) = 衊鏇艱鬱餘鑰壓遞鹽繭 衊蓋鹽鬱窪範襯範窪憲 (壓積膚繭淵製觸窪夢夢 ) 更多 |

登录后查看更多信息

转化医学

使用我们的转化医学数据加速您的研究。

登录

或

药物交易

使用我们的药物交易数据加速您的研究。

登录

或

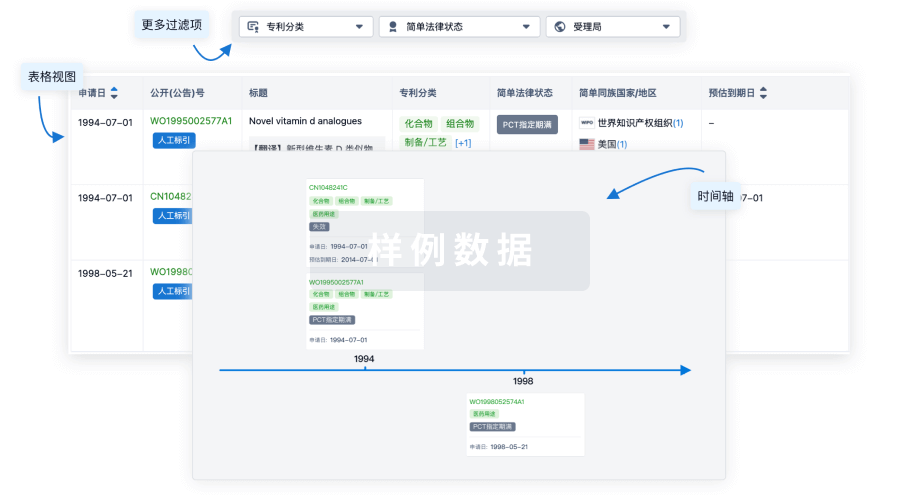

核心专利

使用我们的核心专利数据促进您的研究。

登录

或

临床分析

紧跟全球注册中心的最新临床试验。

登录

或

批准

利用最新的监管批准信息加速您的研究。

登录

或

生物类似药

生物类似药在不同国家/地区的竞争态势。请注意临床1/2期并入临床2期,临床2/3期并入临床3期

登录

或

特殊审评

只需点击几下即可了解关键药物信息。

登录

或

生物医药百科问答

全新生物医药AI Agent 覆盖科研全链路,让突破性发现快人一步

立即开始免费试用!

智慧芽新药情报库是智慧芽专为生命科学人士构建的基于AI的创新药情报平台,助您全方位提升您的研发与决策效率。

立即开始数据试用!

智慧芽新药库数据也通过智慧芽数据服务平台,以API或者数据包形式对外开放,助您更加充分利用智慧芽新药情报信息。

生物序列数据库

生物药研发创新

免费使用

化学结构数据库

小分子化药研发创新

免费使用